As Artificial Intelligence continues to permeate critical sectors such as healthcare, education, finance, law enforcement, and governance, the demand for transparent, fair, and ethically grounded AI systems has never been more urgent. This special issue aims to bring together multidisciplinary perspectives and innovative solutions that enhance trustworthiness in AI, with an emphasis on explainability, fairness, and bias mitigation.

We welcome contributions from computer science, social science, law, ethics, public policy, and other relevant domains. The goal is to foster a comprehensive understanding of how AI systems can be designed, audited, and deployed to empower people and protect rights in both technical and social contexts.

Topics of interest include, but are not limited to:

- Explainable AI (XAI):

- Model interpretability techniques (A1)

- Post-hoc explanation models (A2)

- Human-centered explanation frameworks (A3)

- Trust calibration between humans and AI (A4)

- Fairness in AI:

- Algorithmic fairness and equity-aware design (B1)

- Discrimination-aware machine learning (B2)

- Socio-technical approaches to fairness (B3)

- Measurement and auditing of bias (B4)

- Bias Mitigation Techniques:

- Pre-processing, in-processing, and post-processing solutions (C1)

- Causal inference for bias detection and removal (C2)

- De-biasing large language models and generative systems (C3)

- Bias in training data and representation learning (C4)

- Regulation, Ethics, and Governance:

- Ethical and legal frameworks for trustworthy AI (D1)

- Responsible AI policies across nations (D2)

- Transparency and accountability in algorithmic decision-making (D3)

- Risk assessment and compliance in AI deployment (D4)

- Cross-Disciplinary Case Studies:

- Trustworthy AI in healthcare, education, law, finance, and smart cities (E1)

- Human-AI collaboration in high-stakes domains (E2)

- Citizen-centric design of AI systems (E3)

- Societal impact assessments of AI tools (E4)

- Tools, Frameworks, and Benchmarks:

- Open-source libraries for fairness and XAI (F1)

- Evaluation metrics for trust and transparency (F2)

- Toolkits for bias detection and reporting (F3)

- Datasets for trustworthy AI research (F4)

- Any Other Topic (G1)

Submission Guidelines

- For Initial Submission use pdf file and Don’t include author’s name and affiliation in the manuscript pdf file. Keep remember! DON’T INCLUDE THE AUTHOR’S NAME IN THE PDF FILE OF INITIAL SUBMISSION.

- You can download the Online Submission Guidelines for steps wise submission process.

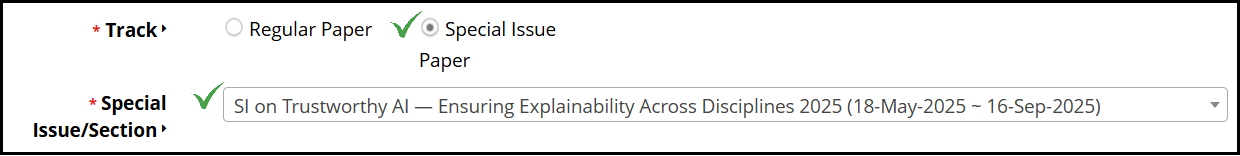

- During Online submission, you have to select Special Issue Paper from the Track menu and then select SI on Trustworthy AI — Ensuring Explainability Across Disciplines 2025 in Special Issue/Selection. (Screenshot attached below)

- In cover letter section, the author must specify the topic code from A1 to G1 (minimum 1 with maximum 3) and the invitation code.

Click on “online submission” button to submit your manuscript. You have to register yourself first

Important Dates

- Manuscript Submission Deadline: 15th September 2025

- Notification of Acceptance: 15th November 2025

- Final Manuscript Due: 15th December 2025

- Publication Date: Two to Three Weeks after final submission

Guest Editorial Board

Guest Editor-in-Chief

- Dr. Shiladitya Munshi, Dean, School of Engineering & Architecture, Techno India University, Tripura, India

Guest Editors

- Dr. Ayan Chakraborty, Principal, Techno International Newtown, Kolkata, India

- Dr. Kamalesh Karmakar, Associate Professor, Department of Information Technology, Techno International Newtown, Kolkata, India

Publication Fee

Publishing an article in Advances in Science, Technology and Engineering Systems Journal requires Article Processing Charges that will be billed by submitting author following the acceptance of an article for publication. For the special issue, there is a special discount on publication charge of 15% as an invited article which is applicable after applying country-based discount. For more information visit the publication fee page https://www.astesj.com/publication-fee/.