User’s Demographic Characteristic on the Evaluation of Gamification Interactive Typing for Primary School Visually Impaired with System Usability Scale

Volume 5, Issue 5, Page No 876–881, 2020

Adv. Sci. Technol. Eng. Syst. J. 5(5), 876–881 (2020);

DOI: 10.25046/aj0505107

DOI: 10.25046/aj0505107

Keywords: Usability, Gamification, Unity, Visually Impaired, System Usability Scale, Evaluation

This paper extends the gamification interactive typing for Primary School Visually Impaired in Indonesia with some development according to previous user’s feedback. This study focuses in to renew the application and evaluate the updated application for visually impaired children developed by utility Unity software. Besides, standards of good gamification are worthy of study and can increase the motivation to learn, and it only can happen if it meets the needs of the user. To achieve those goals, it has completed some development on several sections includes the homepage, input text, text size, and scoring. In this paper, System Usability Scale (SUS) is utilized, and some statistical model is conducted such as average, mean, Pearson-Product Moment correlation, T-test, and ANOVA. T-test results show that no difference between the partial and fully visually impaired participant, gender, and participants who used a similar application and not. The grades do not affect the SUS score, so it proved that the collected SUS score as average 75 is an objective result from the users although the average grade is 46. Moreover, both variable usability (0.884) and variable learnability (0.771) are positively correlated toward the System Usability Scale, notwithstanding variable usability and variable learnability is not correlated (0.383). The impact of this research can improve the industries especially the education field in Indonesia and some expectations from that result are included experience, knowledge, and skills of the users that need to be evaluated in further research. Hence, in the future, by using this application, we can increase the standard of living visually impaired people and enhance industry 4.0 in Indonesia.

1. Introduction

The International Organization for Standardization ISO 9241-11 has defined usability as the “Extent to which a product can be used by specified users to achieve specified goals with effectiveness, efficiency, and satisfaction in a specified context of use”[1].

This paper extends the gamification interactive typing for Primary School Visually Impaired in Indonesia to meet some suggestions [2] with some studies on the context of the effectiveness of the games in learning for visually impaired children. According to that paper, several categories include name page, score, subject matter, sound, user experience, and information. This study focuses on updating the application and evaluating the latest application for visually impaired children developed utilizing Unity engine.

There are many models for evaluating the usability of the application or software such as the User Experience Questionnaire (UEQ)[3], Game Experience Questionnaire (GEQ)[4–6], Computer System Usability Questionnaire (CSUQ)[7], Quality Function Deployment (QFD)[8], Questionnaire for User Interface Satisfaction (QUIS), and System Usability Scale (SUS). CSUQ, QUIS, and SUS reach only 30-40% accuracy with a sample size of only 6, while SUS increases about 75% accuracy at a sample size of 8 and reaches 100% at a sample size of 12 as stated by Tullis and Stetson [9]. In this paper, we utilized the System Usability Scale conducted by John Brooke[10]. This system is utilized to access the nutrition application that provides an effective human and virtual coaching approach to raise parent’s awareness about children’s eating behavior and lifestyle [11]. Moreover, the System Usability Scale (SUS) is also has used to access user satisfaction on interactive maps for the visually impaired [12] and e-learning systems [13]. Furthermore, the questionnaire is modified with GEQ and UEQ so that applications can be tailored to the needs of users.

2. Research Method

Based on the results of research from a previous paper [2], proper gamification standards are feasible to study and can increase learning motivation because user needs are met. After conducting several model evaluations, the applications created ordinarily require the development of user input, so that what is expected is achieved.

This application, which is intended for the blind Primary School in Indonesia, was developed using Unity Engine which has a universal system that can adapt to current technology needs, such as a personal computer, a mobile, head-mounted display, the internet of things, and various platforms (see figure 1).

Figure 1: Compatible Platform with Unity Engine [14]

Unity engine also offers all the features needed to create beautiful, engaging, and enhanced content with sustainable engine upgrades with multi-platform support, documentation, forums, and tutorials, and therefore a lot of developers eager to use that engine.

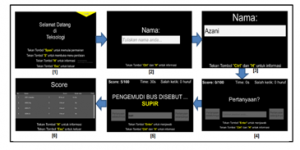

Figure 2: Page Flow of Application

In this paper, we adopted the DECIDE framework to utilize the evaluation. This framework is proposed by Rogers et al. (2011) [15] as a usability evaluation framework. This framework used as a guide for evaluating the usability of the LMS Moodle by Melton [16], Planning Support System (PSS) conducted by Russo et al. (2015) [17], and virtual laboratory of open-source programming in a virtual classroom based on Moodle in 2016 [18]. The phase-in of this framework includes six stages, namely:

- Determine the Evaluation Goals

In this first phase, we determine the purpose of this evaluation. The purpose is to evaluate the usability of the updated application.

Table 1: Comparison Previous and Current Application

| Section | Old Application | New Application |

| Home Page | The application on the information section is still unclear, some buttons sound ambiguous. More information (tutorial) is required. In addition, the question has not varied and currently available a question of classes 1 and 2 only. | The application is currently equipped with some information (tutorials) and some selection keys: the “space” button to start the game, the “S” button to open the scoring menu, the “H” button for information, and the “Esc” button to exit from game as shown in figure 2 no.1. In addition, the level of this application is more varied, not only for classes 1 and 2 but also for class 3 to class 6 of elementary school that is adapted to the curriculum. |

| Input text | This application is not equipped with backspace tones, so the user does not know which letters have been deleted. Also, in each question, the user does not get information related to what button information can be used. So that additional information for the user is not clear yet. | Figure 2 no.2 and no.3 show that the application is equipped with backspace tones so that when the user deletes the desired letter, it becomes easier to specify other letters that will be inputted. |

| Text Size | The text size is small and there is no option to continue to the next question or return to the previous question.

|

The applications are equipped with larger and clearer text sizes, also, the user can choose to proceed to the next question or return to the previous question. Then on each question page, as shown in figure no.4 and 5, the User knows the position of the letter being typed with information from the application. When the user enters the answer to each question, the application will guide the user to do the next step and some information to go ahead or exit the application. |

| Scoring | Information obtained by the user including the current generated value, the total time required, number of questions the user has done, and the number of letters incorrectly inputted by the user. | In the current application, as shown in figure no.6, it can display the scores by the user, the total time required, the number of incorrect letters entered by the user, and there are several selection information buttons, namely the “H” button for information and the “Esc” button for exit. |

- Explore the Questions

There are 13 participants included 9 elementary students and 4 teachers with visually impaired. First, the questionnaire included 10 item questions relating to the satisfaction, efficiency, and effectiveness using a Likert scale from 1 (strongly disagree) to 5 (strongly agree) was adopted from System Usability Scale (SUS) to understand usability assessment of user responses to this application.

Table 2: List of Questionnaires

| System usability scale | |

| 1. | I think that I would like to use this application frequently. |

| 2. | I found the application unnecessarily complex. |

| 3. | I thought the application was easy to use. |

| 4. | I think that I would need the support of a technical person to be able to use this application. |

| 5. | I found the various functions in this application were well integrated. |

| 6. | I thought there was too much inconsistency in this application. |

| 7. | I would imagine that most people would learn to use this application very quickly. |

| 8. | I found the application very cumbersome to use. |

| 9. | I felt very confident using the application. |

| 10 | I needed to learn a lot of things before I could get going with this application. |

The SUS questionnaire is shown in Table 2. Positive statements are displayed on odd items as the score is calculated from scale minus 1, while negative statements are displayed on even items as the score is calculated from 5 minus scale. As a result, the SUS score is generated from the total score of all items multiplied 2.5 so it ranges from 0 (completely disable) to 100 (completely usable).

- Choose the Evaluation and Data Collection Methods

The evaluation is a controlled setting involving users. Before doing testing, the user is given some brief guidance about this application, and then they should fulfill the questionnaire about the application.

- Identify the Practical Issues

As Russo et al. has stated that some practical issues must be considered when conducting an evaluation [17]. In this issue, the participants have chosen that represent for this application. For that purpose, the teachers, and students from the elementary school with disabilities have asked to participate in evaluating this application. When conducting the evaluation, the participants rely on the voices to fill out the questionnaires, while the questionnaire is given in the written form, so some volunteers read out each question which is then answered orally by the participants and recorded in writing by the volunteer.

- Decide How to Deal with the Ethical Issues.

The participants have been informed that the collected data during the evaluation and how it is used will be for this research.

- Evaluate, Analyze, Interpret, and Present the data

In this phase, evaluation has been done and analyzed on the profile of participants such as gender (female or male), age, role (teacher or student with grade), and type of disabilities (fully visual impaired or partial visual impaired). Besides, the experience of using a similar application also asked.

Also, according to Bangor et al. (2008) [19] and Lewis and Sauro [20] in their paper, the SUS questionnaire has two variables are usability (8 items) and learnability (2 items) as implemented in this study. Question numbers 1, 2, 3, 5, 7, and 9 are grouped to the usability variable while question numbers 4 and 10 are grouped to learnability variable. Based on the results of questionnaires, validity is tested by utilizing Pearson-Product Moment correlation while reliability is examined by adopting Cronbach’s Alpha method [21].

Subsequently, in the T-test, it can analyze the comparison of SUS score by gender, role, type of disabilities, and their experience of using a similar application. Meanwhile, an analysis of variance (ANOVA) is used to test the hypothesis of a comparison of two or more than two groups. This test has been used in research such as road damage classification [22], factors affecting behavioral intention [23], factors that determine consumer perceptions [24]. In this study, ANOVA is examined to make sure whether the application’s score affects the SUS score or not. Therefore, we can make conclusions according to the analysis test that has been carried out.

3. Results and Analysis

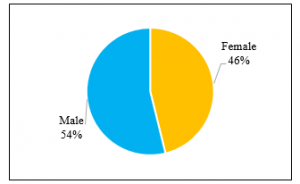

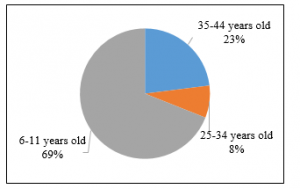

According to the data collected with the result in a response rate of 100 percent, as stated in figure 3 and figure 4, they are 7 out of 13 (54%) male participants and the rest (46%) are female participants aged as 69% are 6-11 years old, 8% are 25-34 years old, and 23% are 35-44 years old.

Figure 3: Percentage of Participants by Gender

Based on question 1 to 10, Table 3 shows the results of the SUS calculation. The method to calculate the results can be looked at in session 2. The SUS score has a level acceptable as produced by Bangor et al. (2008) [19]. SUS score below 50 is not acceptable while between 70 and 80 are acceptable although more than 90 are excellent. In this study, the average SUS is 75 of 100 means this system has 75% usable.

Cronbach’s Alpha [21] calculation is conducted to examine the reliability and validity of SUS. Hossain reported that reliability is acceptable when 0.6 ≤ α ≤ 0.7 [25]. This finding indicates that this SUS is reliable (α = 0.601).

Figure 4: Percentage of Participants by Age

Table 3: SUS Score Results

| Participant | Q1 | Q2 | Q3 | Q4 | Q5 | Q6 | Q7 | Q8 | Q9 | Q 10 | SUS Score | Grade Score |

| 1 | 5 | 3 | 4 | 2 | 5 | 1 | 3 | 1 | 5 | 1 | 85 | 04 |

| 2 | 5 | 1 | 5 | 2 | 5 | 3 | 4 | 2 | 3 | 2 | 80 | 63 |

| 3 | 5 | 3 | 5 | 4 | 5 | 5 | 5 | 3 | 4 | 4 | 63 | 37 |

| 4 | 5 | 3 | 5 | 2 | 4 | 2 | 5 | 2 | 5 | 5 | 75 | 72 |

| 5 | 5 | 1 | 5 | 5 | 5 | 1 | 5 | 1 | 5 | 5 | 80 | 69 |

| 6 | 5 | 2 | 4 | 3 | 3 | 2 | 4 | 1 | 4 | 4 | 70 | 60 |

| 7 | 5 | 3 | 4 | 3 | 5 | 2 | 5 | 2 | 4 | 3 | 75 | 23 |

| 8 | 5 | 2 | 4 | 3 | 3 | 4 | 5 | 2 | 5 | 3 | 70 | 32 |

| 9 | 5 | 3 | 4 | 2 | 4 | 3 | 4 | 3 | 4 | 3 | 68 | 46 |

| 10 | 4 | 3 | 4 | 4 | 4 | 1 | 4 | 2 | 5 | 3 | 70 | 48 |

| 11 | 5 | 2 | 5 | 3 | 5 | 3 | 5 | 2 | 4 | 4 | 75 | 51 |

| 12 | 5 | 3 | 4 | 2 | 4 | 2 | 4 | 2 | 5 | 3 | 75 | 73 |

| 13 | 4 | 1 | 5 | 1 | 4 | 1 | 4 | 1 | 5 | 2 | 90 | 23 |

Table 4: Correlation on Usability and Learnability

| ALL | Usability | |

| Usability | 0.884 | 1 |

| Learnability | 0.771 | 0.383 |

Based on the Pearson-Product Moment correlation calculation, both variable usability (0.884) and variable learnability (0.771) are positively correlated toward the System Usability Scale, notwithstanding variable usability and variable learnability are not correlated (0.383) as shown in Table 4.

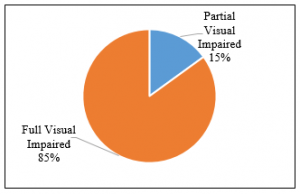

Moreover, 11 (85%) full visual impaired and 2 (15%) partial visual impaired with the distribution as stated in Figure 5.

According to the results in Table 5, the T-test is conducted to confirm our assumption that the Ha1: SUS score of participants with partial visually impaired differently from the SUS score of participants with totally loss vision, and 5% significant level. Table 4 described that P-Value 0.123 shows statistically that there is no difference between the SUS score of participants with partial visually impaired and the SUS score of participants with fully visually impaired.

Figure 5: Percentage of Participants by Types of Visually Impaired.

Table 5: T-Test Results on SUS Score of Partial and Full Visual Impaired Participants

| Partial | Full | |||

| Mean | 82.5 | 73.727 | ||

| Variance | 12.5 | 50.018 | ||

| Observations | 2 | 11 | ||

| Pooled Variance | 46.6 | |||

| Hypothesized Mean Difference | 0 | |||

| df | 11 | |||

| t Stat | 1.672 | |||

| P(T<=t) one-tail | 0.061 | |||

| t Critical one-tail | 1.796 | |||

| P(T<=t) two-tail | 0.123 | |||

| t Critical two-tail | 2.201 | |||

Besides, a T-test was also conducted on gender to compare SUS score between male and female that Ha2: SUS Score of male participants is different from the SUS score of female participants.

Table 6 describes that there is no difference in SUS score by gender.

Table 6: T-Test Results on SUS Score by Gender

| Male | Female | |

| Mean | 75.429 | 74.667 |

| Variance | 52.952 | 64.667 |

| Observations | 7 | 6 |

| Pooled Variance | 58.277 | |

| Hypothesized Mean Difference | 0 | |

| df | 11 | |

| t Stat | 0.179 | |

| P(T<=t) one-tail | 0.430 | |

| t Critical one-tail | 1.796 | |

| P(T<=t) two-tail | 0.861 | |

| t Critical two-tail | 2.201 |

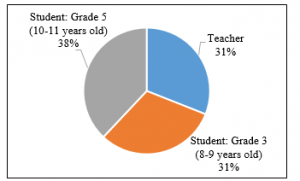

From 13 participants with disabilities, there were 4 (31%) teachers and 9 (69%) students as in Figure 6.

Figure 6: Percentage of Participants by Role

Table 7: T-Test Results on SUS Score by Role

| Student | Teacher | Grade 5 | Grade 3 | |

| Mean | 72.3 | 81.25 | 73.6 | 70.75 |

| Variance | 31.75 | 56.25 | 52.3 | 8.917 |

| Observations | 9 | 4 | 5 | 4 |

| Pooled Variance | 38.432 | 33.707 | ||

| Hypothesized Mean Difference | 0 | 0 | ||

| df | 11 | 7 | ||

| t Stat | -2.394 | 0.732 | ||

| P(T<=t) one-tail | 0.018 | 0.244 | ||

| t Critical one-tail | 1.796 | 1.895 | ||

| P(T<=t) two-tail | 0.036 | 0.488 | ||

| t Critical two-tail | 2.201 | 2.365 |

The T-test also performed comparing the SUS score between students and teachers that Ha3: SUS score of students is different from the SUS score of teachers and Ha4: SUS score on grade 5 is different from the SUS score on grade 3.

The results in table 7 show that there is a difference between the SUS score of students and the SUS score of teachers, but there is no difference between students of grade 3 and grade 5.

Some participants have used a similar application, therefore in this study, a comparison of the SUS score was carried out on participants who had a used similar application and not. The results can be seen in Table 8 that there is no difference in SUS scores between participants who have used a similar application and who have not.

Table 8: T-Test Results on SUS Score from Participants Used Similar Application and Not

| No | Yes | |

| Mean | 74.8 | 76 |

| Variance | 30.4 | 183 |

| Observations | 10 | 3 |

| Pooled Variance | 58.14545 | |

| Hypothesized Mean Difference | 0 | |

| df | 11 | |

| t Stat | -0.239 | |

| P(T<=t) one-tail | 0.408 | |

| t Critical one-tail | 1.796 | |

| P(T<=t) two-tail | 0.815 | |

| t Critical two-tail | 2.201 |

In addition, Moreover, after calculating the SUS Score with some T-test is completed, another hypothesis was developed with the assumption that Ha3: Grades do not affect SUS Score.

P-Value and significance F is more than 5% proved that the user’s grade does not significantly affect the SUS score as shown in Table 9.

Table 9: ANOVA and Partial (T) Results

| R Square | P-Value | F | Sig. F |

| 0.061 | 0.415 | 0.717 | 0.415 |

4. Conclusion

Based on the result, although the average grade is 46, the average SUS score is 75 of 100 shows that this application is 75% fulfill the usability of the application. This SUS is reliable with α = 0.601. Both variable usability (0.884) and variable learnability (0.771) are positively correlated toward the System Usability Scale, notwithstanding variable usability and variable learnability are not correlated (0.383).

After completed the T-test on the SUS score, it has concluded that no difference between the partial versus fully visually impaired participant, gender differences, and whether participants used similar applications. However, there is the difference between students’ SUS score with a mean of 72.3 and teachers’ SUS score with a mean of 81.3, even though there is no difference in the SUS score according to student’s grade. Also, grades do not affect the SUS score, so it proved that the collected SUS score as average 75 is an objective result from the users although the average grade is 46.

Therefore, some of the expectations from these results including the experience, knowledge, and skills of the users need to be evaluated in future research.

Acknowledgment

We thank Sekolah Luar Biasa Tuna Netra (SLB-A) Pembina Tingkat Nasional, and our participants for their help and contributions.

- W. ISO, “9241-11. Ergonomic requirements for office work with visual display terminals (VDTs).,” The International Organization for Standardization, 1998.

- Yanfi, Y. Udjaja, A.C. Sari, “A Gamification Interactive Typing for Primary School Visually Impaired Children in Indonesia,” in Procedia Computer Science, 2017, doi:10.1016/j.procs.2017.10.032.

- Y. Udjaja, “EKSPANPIXEL BLADSY STRANICA: Performance Efficiency Improvement of Making Front-End Website Using Computer Aided Software Engineering Tool,” Procedia Computer Science, 135, 292–301, 2018, doi:10.1016/j.procs.2018.08.177.

- Y. Udjaja, V.S. Guizot, N. Chandra, “Gamification for elementary mathematics learning in Indonesia,” International Journal of Electrical and Computer Engineering, 8(5), 3859–3865, 2018, doi:10.11591/ijece.v8i5.pp3859-3865.

- Y. Udjaja, “Gamification Assisted Language Learning for Japanese Language Using Expert Point Cloud Recognizer,” International Journal of Computer Games Technology, 2018, 2018, doi:10.1155/2018/9085179.

- D.P. Kristiadi, Y. Udjaja, B. Supangat, R.Y. Prameswara, H.L.H.S. Warnars, Y. Heryadi, W. Kusakunniran, “The effect of UI, UX and GX on video games,” in 2017 IEEE International Conference on Cybernetics and Computational Intelligence, CyberneticsCOM 2017 – Proceedings, 2018, doi:10.1109/CYBERNETICSCOM.2017.8311702.

- J.R. Lewis, “IBM Computer Usability Satisfaction Questionnaires: Psychometric Evaluation and Instructions for Use,” International Journal of Human-Computer Interaction, 1995, doi:10.1080/10447319509526110.

- Y. Udjaja, Sasmoko, Y. Indrianti, O.A. Rashwan, S.A. Widhoyoko, “Designing Website E-Learning Based on Integration of Technology Enhance Learning and Human Computer Interaction,” in 2018 2nd International Conference on Informatics and Computational Sciences, ICICoS 2018, 2019, doi:10.1109/ICICOS.2018.8621792.

- T.S. Tullis, J.N. Stetson, “A Comparison of Questionnaires for Assessing Website Usability ABSTRACT: Introduction,” Usability Professional Association Conference, 2004.

- J. Brooke, “SUS-A quick and dirty usability scale,” Usability Evaluation in Industry, 1996.

- S. Gabrielli, M. Dianti, R. Maimone, M. Betta, L. Filippi, M. Ghezzi, S. Forti, “Design of a Mobile App for Nutrition Education (TreC-LifeStyle) and Formative Evaluation With Families of Overweight Children,” JMIR MHealth and UHealth, 2017, doi:10.2196/mhealth.7080.

- A. Brock, P. Truillet, B. Oriola, D. Picard, C. Jouffrais, “Design and user satisfaction of interactive maps for visually impaired people,” in Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), 2012, doi:10.1007/978-3-642-31534-3_80.

- N. Harrati, I. Bouchrika, A. Tari, A. Ladjailia, “Exploring user satisfaction for e-learning systems via usage-based metrics and system usability scale analysis,” Computers in Human Behavior, 2016, doi:10.1016/j.chb.2016.03.051.

- Unity Technologies, Game engine, tools and multiplatform, Unity Technologies, 2016.

- Y.R. Jenny Preece, Helen Sharp, “INTERACTION DESIGN: beyond human-computer interaction, 3rd Edition, Chapter 8: DATA ANALYSIS, INTERPRETATION, AND PRESENTATION. Publisher: John Wiley & Sons,” Interactive Computation: The New Paradigm, 2011, doi:10.1007/3-540-34874-3_10.

- J. Melton, “The lms moodle: A usability evaluation,” Prefectural University of Kumamoto Retrieved …, 2006.

- P. Russo, M.F. Costabile, R. Lanzilotti, C.J. Pettit, “Usability of planning support systems: An evaluation framework,” in Lecture Notes in Geoinformation and Cartography, 2015, doi:10.1007/978-3-319-18368-8_18.

- A. Zakiah, “Evaluation of interaction design of virtual laboratory of open source programming in virtual classroom based on moodle using decide framework case study: C programming,” International Journal of Psychosocial Rehabilitation, 2020, doi:10.37200/IJPR/V24I2/PR200708.

- A. Bangor, P.T. Kortum, J.T. Miller, “An empirical evaluation of the system usability scale,” International Journal of Human-Computer Interaction, 2008, doi:10.1080/10447310802205776.

- J.R. Lewis, J. Sauro, “The factor structure of the system usability scale,” in Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), 2009, doi:10.1007/978-3-642-02806-9_12.

- L.J. Cronbach, “Coefficient alpha and the internal structure of tests,” Psychometrika, 1951, doi:10.1007/BF02310555.

- F.E. Gunawan, Yanfi, B. Soewito, “A vibratory-based method for road damage classification,” in 2015 International Seminar on Intelligent Technology and Its Applications, ISITIA 2015 – Proceeding, 2015, doi:10.1109/ISITIA.2015.7219943.

- Y. Yanfi, Y. Kurniawan, Y. Arifin, “Factors Affecting the Behavioral Intention of using Sedayuone Mobile Application,” ComTech: Computer, Mathematics and Engineering Applications, 8(3), 137, 2017, doi:10.21512/comtech.v8i3.3722.

- F.E. Gunawan, I. Sari, Y. Yanfi, “The consumer intention to use digital membership cards,” Journal of Business & Retail Management Research, 13(04), 117–124, 2019, doi:10.24052/jbrmr/v13is04/art-10.

- G. Hossain, “Rethinking self-reported measure in subjective evaluation of assistive technology,” Human-Centric Computing and Information Sciences, 2017, doi:10.1186/s13673-017-0104-7.

- Marlene Böhmer, Thorsten Herfet, "PerfVis+: From Timestamps to Insight through Integration of Visual and Statistical Analysis", Advances in Science, Technology and Engineering Systems Journal, vol. 10, no. 6, pp. 77–87, 2025. doi: 10.25046/aj100607

- Li Weiguo, Chen Yanhong, Yang Libing, Yang Liyan, "Integration and Innovation of a Micro-Topic-Pedagogy Teaching Model under the New Engineering Education Paradigm", Advances in Science, Technology and Engineering Systems Journal, vol. 10, no. 4, pp. 32–40, 2025. doi: 10.25046/aj100404

- Falko Gawantka, Franz Just, Marina Savelyeva, Markus Wappler, Jörg Lässig, "A Novel Metric for Evaluating the Stability of XAI Explanations", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 1, pp. 133–142, 2024. doi: 10.25046/aj090113

- Gil Hyun Kang, Hwi Jin Kwon, In Soo Chung, Chul Su Kim, "Development and Usability Evaluation of Mobile Augmented Reality Contents for Railway Vehicle Maintenance Training: Air Compressor Case", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 1, pp. 91–103, 2024. doi: 10.25046/aj090109

- Mauricio Flores-Nicolás, Magally Martínez-Reyes, Felipe de Jesús Matías-Torres, "The Graded Multidisciplinary Model: Fostering Instructional Design for Activity Development in STEM/STEAM Education", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 5, pp. 55–61, 2023. doi: 10.25046/aj080506

- Lai Kecheng, He Qikun, Hu Ning, Fujinami Tsutomu, "Estimating Subjective Appetite based on Cerebral Blood Flow", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 6, pp. 195–203, 2022. doi: 10.25046/aj070621

- Segundo Moisés Toapanta Toapanta, Rodrigo Humberto Del Pozo Durango, Luis Enrique Mafla Gallegos, Eriannys Zharayth Gómez Díaz, Yngrid Josefina Melo Quintana, Joan Noheli Miranda Jimenez, Ma. Roció Maciel Arellano, José Antonio Orizaga Trejo, "Prototype to Mitigate the Risks, Vulnerabilities and Threats of Information to Ensure Data Integrity", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 6, pp. 139–150, 2022. doi: 10.25046/aj070614

- Neni Hermita, Rian Vebrianto, Zetra Hainul Putra, Jesi Alexander Alim, Tommy Tanu Wijaya, Urip Sulistiyo, "Effectiveness of Gamified Instructional Media to Improve Critical and Creative Thinking Skills in Science Class", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 3, pp. 44–50, 2022. doi: 10.25046/aj070305

- Boris Kontsevoi, Sergei Terekhov, "TETRA™ Techniques to Assess and Manage the Software Technical Debt", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 5, pp. 303–309, 2021. doi: 10.25046/aj060534

- Marko Suvajdzic, Dragana Stojanovic, "Discover DaVinci: Blockchain, Art and New Ways of Digital Learning", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 5, pp. 273–278, 2021. doi: 10.25046/aj060530

- Wilawan Inchamnan, Jiraporn Chomsuan, "The Gamification Design for Affordances Pedagogy", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 4, pp. 138–146, 2021. doi: 10.25046/aj060416

- Rotimi Adediran Ibitomi, Tefo Gordan Sekgweleo, Tiko Iyamu, "Decision Support System for Testing and Evaluating Software in Organizations", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 3, pp. 303–310, 2021. doi: 10.25046/aj060334

- Trust Nhubu, Edison Muzenda, Belaid Mohamed, Charles Mbohwa, "Framework for Decentralizing Municipal Solid Waste Management in Harare, Zimbabwe", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 1029–1037, 2021. doi: 10.25046/aj0602117

- Ali Alzaed, "Application of a Reusability Approach in Simulation of Heritage Buildings Performance, Taif- Saudi Arabia", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 132–138, 2021. doi: 10.25046/aj060216

- Yousra Karim, Abdelghani Cherkaoui, "Fuzzy Analytical Hierarchy Process and Fuzzy Comprehensive Evaluation Method Applied to Assess and Improve Human and Organizational Factors Maturity in Mining Industry", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 75–84, 2021. doi: 10.25046/aj060210

- Rosula Reyes, Justine Cris Borromeo, Derrick Sze, "Performance Evaluation of a Gamified Physical Rehabilitation Balance Platform through System Usability and Intrinsic Motivation Metrics", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 1164–1170, 2021. doi: 10.25046/aj0601131

- Preeti Nair, Devendra Pratap Singh, Navneet Munoth, "Challenges and New Paradigms in Conservation of Heritage-based Villages in Rural India -A case of Pragpur and Garli Villages in Himachal Pradesh", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 1112–1119, 2021. doi: 10.25046/aj0601124

- Safaa Albasri, Mihail Popescu, Salman Ahmad, James Keller, "Procrustes Dynamic Time Wrapping Analysis for Automated Surgical Skill Evaluation", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 912–921, 2021. doi: 10.25046/aj0601100

- Diena Rauda Ramdania, Dian Sa’adillah Maylawati, Yana Aditia Gerhana, Novian Anggis Suwastika, Muhammad Ali Ramdhani, "Octalysis Audit to Analyze gamification on Kahoot!", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 457–463, 2021. doi: 10.25046/aj060149

- Jennifer Snell Ballard, Jerry Lee, "Recognition of Maximal Lift Capacity using the Polylift", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 121–127, 2021. doi: 10.25046/aj060113

- Meyliana, Yakob Utama Chandra, Cadelina Cassandra, Surjandy, Erick Fernando, Henry Antonius Eka Widjaja, Harjanto Prabowo, "Education Value Chain Model for Examination, Grading, and Evaluation Process in Higher Education based on Blockchain Technology", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 1698–1703, 2020. doi: 10.25046/aj0506202

- Wooyoung Kim, Yi-Hsin Hsu, Zican Li, Preston Mar, Yangxiao Wang, "NemoSuite: Web-based Network Motif Analytic Suite", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 1545–1553, 2020. doi: 10.25046/aj0506185

- Poonam Ghuli, Manoj Kartik R, Mohammed Amaan, Mridul Mohta, N Kruthik Bhushan, Poonam Ghuli, Shobha G, "Recommendation System for SmartMart-A Virtual Supermarket", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 1408–1413, 2020. doi: 10.25046/aj0506170

- Ogbuefi Uche Chinweoke, Ibeni Christopher, "Load Evaluation with Fast Decoupled-Newton Raphson Algorithms: Evidence from Port Harcourt Electricity", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 1099–1110, 2020. doi: 10.25046/aj0505134

- Yanfi Yanfi, Yogi Udjaja, Adrian Victor Juandi, "The Effect of User Experience from Teksologi", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 847–851, 2020. doi: 10.25046/aj0505103

- Liana Khamis Qabajeh, Mohammad Moustafa Qabajeh, "Detailed Security Evaluation of ARANz, ARAN and AODV Protocols", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 176–192, 2020. doi: 10.25046/aj050523

- Giuliana Gaona-Gamarra, Brian Meneses-Claudio, Avid Roman-Gonzalez, "The Ludocreative Expression for the Production of Texts in Children of Early Education", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 127–136, 2020. doi: 10.25046/aj050518

- Diego Iquira-Becerra, Michael Flores-Conislla, Juan Deyby Carlos-Chullo, Briseida Sotelo-Castro, Claudia Payalich-Quispe, Carlo Corrales-Delgado, "A Critical Analysis of Usability and Learning Methods on an Augmented Reality Application for Zoology Education", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 2, pp. 384–392, 2020. doi: 10.25046/aj050250

- Hasung Kang, Gede Putra Kusuma, "The Effectiveness of Personality-Based Gamification Model for Foreign Vocabulary Online Learning", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 2, pp. 261–271, 2020. doi: 10.25046/aj050234

- Hung-Chi Chu, Fang-Lin Chao, Liza Lee, Pei-Yun Kao, "Implement Wireless and Distributed Vibrator for Enhancing Physical Activity of Visually Impaired Children", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 1, pp. 100–105, 2020. doi: 10.25046/aj050113

- Aref Hassan Kurd Ali, Halikul Lenando, Mohamad Alrfaay, Slim Chaoui, Haithem Ben Chikha, Akram Ajouli, "Performance Analysis of Routing Protocols in Resource-Constrained Opportunistic Networks", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 6, pp. 402–413, 2019. doi: 10.25046/aj040651

- Woochun Jun, "Development of Evaluation Metrics for Learners in Unplugged Activity", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 6, pp. 216–219, 2019. doi: 10.25046/aj040628

- Pablo Pérez-Gosende, "Evaluation of Classroom Furniture Design for Ecuadorian University Students: An Anthropometry-Based Approach", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 6, pp. 163–172, 2019. doi: 10.25046/aj040620

- Aulia Rahmawati, Emil Robert Kaburuan, Anditya Arifianto, Nahda Kurnia Juniati, "CISELexia: Computer-Based Method for Improving Self-Awareness in Children with Dyslexia", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 5, pp. 258–267, 2019. doi: 10.25046/aj040532

- Rowaida Khalil Ibrahim, Subhi Rafeeq Mohammed Zeebaree, Karwan Fahmi Sami Jacksi, "Survey on Semantic Similarity Based on Document Clustering", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 5, pp. 115–122, 2019. doi: 10.25046/aj040515

- Woochun Jun, "A Study on Development of Evaluation Metrics for Learners in Physical Computing", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 5, pp. 82–87, 2019. doi: 10.25046/aj040510

- Fang-Lin Chao, Hung-Chi Chu, Liza Lee, "Enhancing Bodily Movements of the Visually Impaired Children by Airflow", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 4, pp. 308–313, 2019. doi: 10.25046/aj040439

- Hun Choi, Gyeongyong Heo, "An Enhanced Fuzzy Clustering with Cluster Density Immunity", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 4, pp. 239–243, 2019. doi: 10.25046/aj040429

- Shanmuganathan Vasanthapriyan, Malith De Silva, "Optical Braille Recognition Software Prototype for the Sinhala Language", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 4, pp. 221–229, 2019. doi: 10.25046/aj040427

- Anna Tatsiopoulou, Christos Tatsiopoulos, Basillis Boutsinas, "Providing Underlying Process Mining in Gamified Applications – An Intelligent Knowledge Tool for Analyzing Game Player’s Actions", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 4, pp. 212–220, 2019. doi: 10.25046/aj040426

- Vaibhav B. Vaijapurkar, Yerram Ravinder, "Development of Tactile Display and an Efficient Approach to Enhance Perceptual Analysis in Rehabilitation", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 4, pp. 01–11, 2019. doi: 10.25046/aj040401

- Fang-Lin Chao, Hung-Chi Chu, Liza Lee, "Robot-Assisted Posture Emulation for Visually Impaired Children", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 1, pp. 193–199, 2019. doi: 10.25046/aj040119

- Denise Ashe, Alan Eardley, Bobbie Fletcher, "An Empirical Study of Icon Recognition in a Virtual Gallery Interface", Advances in Science, Technology and Engineering Systems Journal, vol. 3, no. 6, pp. 289–313, 2018. doi: 10.25046/aj030637

- Khalifa Sylla, Samuel Ouya, Masamba Seck, Gervais Mendy, "The Value of Integrating MSRP Protocol in E-learning Platforms of Universities", Advances in Science, Technology and Engineering Systems Journal, vol. 3, no. 5, pp. 321–327, 2018. doi: 10.25046/aj030538

- Htwe Nu Win, Khin Thidar Lynn, "Community Detection in Social Network with Outlier Recognition", Advances in Science, Technology and Engineering Systems Journal, vol. 3, no. 2, pp. 21–27, 2018. doi: 10.25046/aj030203

- Mustafa Çöçelli, Ethem Arkın, "A Category Based Threat Evaluation Model Using Platform Kinematics Data", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 3, pp. 1330–1341, 2017. doi: 10.25046/aj0203168

- Shuji Shinohara, Yasuhiro Omiya, Mitsuteru Nakamura, Naoki Hagiwara, Masakazu Higuchi, Shunji Mitsuyoshi, Shinichi Tokuno, "Multilingual evaluation of voice disability index using pitch rate", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 3, pp. 765–772, 2017. doi: 10.25046/aj020397

- Adeyemi I. Olabisi, Thankgod E. Boye, Emagbetere Eyere, "Evaluation of Pure Aluminium Inoculated with Varying Grain Sizes of an Agro-waste based Inoculant", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 4, pp. 14–25, 2017. doi: 10.25046/aj020403

- Martín, María de los Ángeles, Diván, Mario José, "Applications of Case Based Organizational Memory Supported by the PAbMM Architecture", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 3, pp. 12–23, 2017. doi: 10.25046/aj020303

- Dillon Chrimes, Belaid Moa, Mu-Hsing (Alex) Kuo, Andre Kushniruk, "Operational Efficiencies and Simulated Performance of Big Data Analytics Platform over Billions of Patient Records of a Hospital System", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 1, pp. 23–41, 2017. doi: 10.25046/aj020104

- Samantha Mathara Arachchi, Siong Choy Chong, Alik Kathabi, "System Testing Evaluation for Enterprise Resource Planning to Reduce Failure Rate", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 1, pp. 6–15, 2016. doi: 10.25046/aj020102