Classification of Wing Chun Basic Hand Movement using Virtual Reality for Wing Chun Training Simulation System

Volume 6, Issue 1, Page No 250-256, 2021

Author’s Name: Hendro Arieyanto1,a), Andry Chowanda2

View Affiliations

1Computer Science Department, Graduate Program in Computer Studies, Bina Nusantara University, Jakarta, 11480, Indonesia

2Computer Science Department, School of Computer Science, Bina Nusantara University, Jakarta, 11480, Indonesia

a)Author to whom correspondence should be addressed. E-mail: hendro.arieyanto@binus.ac.id

Adv. Sci. Technol. Eng. Syst. J. 6(1), 250-256 (2021); ![]() DOI: 10.25046/aj060128

DOI: 10.25046/aj060128

Keywords: Virtual reality, Martial arts, Wing Chun, Classification, Skeletal joints, Optimization

Export Citations

To create a Virtual Reality (VR) system for Wing Chun’s basic hand movement training, capturing, and classifying movement data is an important step. The main goal of this paper is to find the best possible method of classifying hand movement, particularly Wing Chun’s basic hand movements, to be used in the VR training system. This paper uses Oculus Quest VR gear and Unreal Engine 4 to capture features of the movement such as location, rotation, angular acceleration, linear acceleration, angular velocity, and linear velocity. RapidMiner Studio is used to pre-process the captured data, apply algorithms, and optimize the generated model. Algorithms such as Support Vector Machine (SVM), Decision Tree, and k-Nearest Neighbor (kNN) are applied, optimized, and compared. By classifying 10 movements, the result shows that the optimized kNN algorithm obtained the highest averaged performance indicators: Accuracy of 99.94%, precision of 99.70%, recall of 99.70%, and specificity of 99.97%. The overall accuracy of the optimized kNN is 99.71%

Received: 02 October 2020, Accepted: 28 December 2020, Published Online: 15 January 2021

1.Introduction

Martial arts training is one of the preferable methods of physical exercise. It provides health & psychological benefits, such as preventing osteoporosis [1], reduces stress, depression, and increases mindfulness [2], and also has the potential to reduce aggressive behavior [3]. Basic movements of martial arts training such as stance and punches are usually done with the supervision of the instructor. However, instructors cannot always be there for one student, they must accompany other students as well. It could be a problem for new students who have just learned stances and punches. Repetitive movements without supervision could result in the students become unmotivated and stop their movement. Having the instructor to watch over them is helpful for the students to keep repeating the movement without stopping. There needs to be a method of training to make the students keep their excitement going during basic training when the instructor was not able to watch them. Watching video tutorials and reading books are some of the ways to improve the students’ training experience, however, it lacks interactions and presence needed in martial arts training.

This is when Virtual Reality (VR) technology comes into play. It is mentioned by [4] that VR is typically defined in technical terms, meaning it is associated with technical hardware such as computer systems, head-mounted display (HMD), motion trackers, etc. VR itself can be simply defined as an environment that simulates the real world and is generated by a computer system in which users can then interact with by using motion trackers [5]. The trackers are used to collect data from the real environment and translate them to the virtual environment [6].

Due to its immersive capabilities, VR technology has been used in areas, such as architecture and landscape planning [7], dancing [8], military training [9], medical, such as stroke rehabilitation [10], and psychological treatment [11]. It has also been used for research in martial arts scope, even though there has not been much of them. The reason is that higher immersion and presence result in higher performance to the user [12].

A VR-based training simulation system could help in practicing the movement and motivates the user, due to its immersive capabilities [13]. To develop the system, capturing and classifying the hand movements is crucial, as being able to classify basic movement in Wing Chun is one of the basic training outcomes. This study uses Oculus Quest, a VR gear with a head-mounted display (HMD) and a pair of controllers. These controllers are used to capture hand movements. Variables such as time, location, rotation, angular acceleration, linear acceleration, angular velocity, and linear velocity, were recorded and used as features for classification.

The purpose of this paper is to find the best possible method of classifying Wing Chun’s basic hand movement to develop a VR-based training system. Since this paper uses Oculus Quest with a pair of hand controllers, hand movement data can be captured in the form of location in the world space. Not only that, other properties like rotation, angular acceleration, linear acceleration, angular velocity, and linear velocity can be captured as well. RapidMiner Studio [14], [15] is used to process the data, optimize models, and to analyze the result. This paper is presented as such: Section 1 is the introduction. In section 2, this paper outlines the studies and works that were related to motion and gesture recognition or classification. Section 3 outlines the method that is used in this paper. Section 4 shows the result and analysis of the study. Section 5 provides the conclusion of this paper based on the result and analysis. Section 6 provides discussions and further works that are possible to improve this paper.

2. Related Works

The study by [16] shows the approach is to segment or classify movement into distinct behaviors. The first approach is using Principal Component Analysis (PCA), which is based on the observation that simple motion exhibit lower dimensionality than complex motions. The motion is broken into frames, and each frame is represented as a point, which is the joint’s location. It is based on dimensionality, in which a motion sequence with a single behavior should have a smaller dimensionality than the one with multiple behaviors. The second approach is using Probabilistic PCA. The Probabilistic PCA ignores the noises of motion, unlike PCA. The third approach is Gaussian Mixture Model (GMM), in which the entire sequence of motion is segmented whenever two consecutive sets of frames belong to different Gaussian distributions. The Probabilistic PCA obtained the best result of all three approaches: 90% precision for 95% recall.

In [17], the authors conducted a survey on sequence classification, in which it is stated that multivariate time series classification has been used for gesture and motion classification. The research by [18] captured motion data using CyberGlove, with 22 sensors in different locations at the glove and 1 angular sensor. The data are split into 3 datasets and used K-folds validation of K = 3. The multi-attribute motion data is reduced to feature vectors with Singular Value Decomposition (SVD) and then classified using Support Vector Machine (SVM). The study results in the accuracy of 96% for dataset 1, and 100% for dataset 2 and 3.

The research by [19] used wearable accelerometers to capture movement data. Several movements such as sitting, sitting down, standing, standing up, and walking, are the subject of classification. The features that are extracted from 4 accelerometers are further pre-processed into an acceleration in x, y, and z-axis for each, resulting in 12 features. The features of the movement data are then selected with Mark Hall’s selection algorithm [20] and classified with C4.5 decision tree with AdaBoost ensemble method [21]. The study used 10 iterations of AdaBoost with C4.5 tree confidence factor of 0.25 and with 10-folds cross-validation. The overall performance recognition was 99.4%.

In [22], the authors compared the classification accuracy of several algorithms such as C4.5 decision tree, multilayer perception, Naive Bayes, logistic regression, and k-Nearest Neighbor (kNN). A smartphone with the capability of recording accelerometer and gyroscope data was used as the motion sensor. Waikato Environment for Knowledge Analysis (WEKA) machine learning tool was used to apply the algorithms and compare them. The result of the study shows that kNN algorithm obtained the highest (averaged) accuracy in all motion classification by 84.6%.

The study by [23] used the multi-modal approach, which is using a combination of audio, video, and skeletal joints. The audio segmentation used the Hidden Markov Model (HMM) to determine the “silence” and “event” of motion. The segmentation based on skeletal joints uses the y-coordinate of hand joint location. The first step is to identify the start and end frames of gestures of either left or right hand, and the second step is to identify the same by using both hands. For the RGB and video modalities, SVM is used as the gesture classification. The audio classifier and the SVM classifier is then fused with a fusion algorithm. This study, which used 275 test samples with over 2,000 unlabeled gestures, resulted in an average edit distance of 0.2074.

The study by [24] used a signal captured from movement instead of the joint location. The study used 12 Trigno Wireless electrodes. The signal is divided into several segments, calculates the spectrogram, and then normalized. PCA is then applied to reduce the dimensionality of the data but maintaining important information. The last step is to apply SVM to the data to do the classification. Compared with the Root Mean Square (RMS) method, the method of this study results in higher accuracy of 9.75% and a reduced error rate of 12%.

Lower-limb motion classification was done by using piezoelectret sensors that apply forcemyography (FMG) which reads force distribution generated by muscle contractions [25]. study compared kNN, Linear Discriminant Analysis (LDA), and Artificial Neural Network (ANN) to classify 4 lower-limb motions, namely leg raising, leg dropping, knee extension, and knee flexion. The highest accuracy is obtained by kNN with an accuracy of 92.90%.

The study by [26] goes into more specific, classifying motions into karate stances, movements, and forms. The captured data consists of a 3-axis accelerometer, gyroscope, and magnetometer. The classification is done by averaging the dataset with Dynamic Time Warping (DTW) Barycenter Averaging (DBA) algorithm, which is an averaging method that iteratively refills an initially selected sequence, to minimize its squared distance / DTW [27] to averaged sequences. In this study, a movement template was generated from two different-styled Karate masters and then both are compared. This results in a recognition rate of 94.20%.

In [28], the authors did a study on human motion recognition by using VR. A combination of LDA, Genetic Algorithm (GA), and SVM is proposed to classify human motion. After collecting motion data, LDA is used to extract features, and then GA is used to search for optimal parameters. After optimal parameters are found, SVM is then used for classification. There are 10 motions to be classified and several averaged results are obtained: Precision of 95.65%, Accuracy of 97.05%, Specificity of 92.78%, and Sensitivity (Recall) of 94.01%.

It can be inferred from the related works that there has been various research about human motion recognition and classification for years. SVM seems to be favored among the study of motion classification. When several studies compared algorithms for movement classification, kNN seems to provide the highest accuracy. This paper performs several algorithms that were mentioned in the related works and optimizes its parameters to improve the results.

3. Proposed Method

This study utilizes the features from Oculus Quest VR gear, along with Unreal Engine 4 (UE4), which helps in extracting features of movements. RapidMiner Studio is used to pre-process the data, apply algorithms, and analyze the results.

3.1. Method of extraction

To be able to capture the hand movement, a simple game based on UE4 is developed. When the game starts, the user can press a button on the Oculus Controllers to start capturing movement data. Several movement data that are recorded are basic Wing Chun hand movements such as Straight Punch, Tan Sau, Pak Sau, Gan Sau, and Bong Sau. The recording starts from the beginning stance to the ending stance of a movement. The same movement is done 10 times within around 40 seconds with around 1 to 2 seconds interval for every time the movement is done.

3.2. Extracted Data

The movement data is generated in the form of CSV files for each movement and each hand, resulting in 10 files. The extracted features are time, location, rotation, angular acceleration, linear acceleration, angular velocity, and linear velocity. Except for time, all the features are extracted in the form of vectors, and then split into x, y, and z-axis, resulting in a total of 19 features. RapidMiner Studio is used to process the data and give labels to the data. The data is then processed again by mixing all files of each hand and movement into 1 file. The dataset consists of 8,725 rows with a total of 10 labels. For the left hand, the labels are Punch, Tan, Pak, Gan, and Bong. For the right hand, the labels are R_Punch, R_Tan, R_Pak, R_Gan, and R_Bong.

3.3. Method of optimizing algorithm

Several algorithms are performed to the dataset using RapidMiner Studio to check for their accuracy. From there, the parameter optimization process is used to make the algorithms perform better. The selected algorithms for comparison are SVM, Decision Tree, and kNN.

Figure 1 shows the flow of the algorithm optimization method. First, an algorithm is applied to the dataset with split validation to check the initial performance. The dataset is first normalized and then split into 80% of the training set and 20% of the testing set by using Split Data module. An algorithm is then applied to the dataset, and then measure the performance to obtain accuracy. After performing split validation, the initial results of the algorithms’ performances were obtained.

Then, parameter optimization is performed to get the best possible value of the parameters in the algorithm process. The algorithm is applied to the normalized dataset with 10-folds cross-validation, and then put into the Optimize Parameter module. This module executes the cross-validation using all the selected combinations of parameters that are available in the algorithm. This process results in the best accuracy of all selected combinations of parameters.

Figure 1: Flow of algorithm optimization method

Figure 1: Flow of algorithm optimization method

After finding the optimized value of parameters, the process of optimizing selection of features is performed to the algorithm. The process is identical to the Optimize Parameter module, but this time the algorithm is put into the Optimize Selection module. This module executes the cross-validation of the algorithm and weights the features of the dataset that further improve the accuracy. This process can be done until no more features are removed. After this process, a model from the algorithm with optimized parameters and selected features is obtained. This model is then applied to the dataset with split validation process

4. Result and Analysis

The performance indicators were based on the study by [28], in which the 4 most used indicators for action classifications are used: accuracy, precision, recall (sensitivity), and specificity.

4.1. Support Vector Machine

The parameters used to obtain the final result for SVM algorithm are SVM Type: nu-SVC [29] with the nu value of 0.1, in which nu value is an upper bound on the fraction of margin errors and a lower bound on the fraction of support vectors, and kernel type: linear, in which it is suitable when having a lot of features in the dataset [30]. The relevant features obtained from the process of optimize selection are: time, loc_x, loc_y, loc_z, rot_x, rot_y, rot_z, ang_acc_x, ang_vel_y, lin_vel_x, and lin_vel_z. The result of the optimized SVM model for each movement is shown in Table 1. The movement R_Tan and Gan obtained the highest result (100%) in all 4 indicators. R_Gan and Tan reached the highest result (100%) in the recall and specificity. The least accuracy, precision, recall, and specificity are each obtained by Punch (99.02%), Pak (95.93%), R_Bong (95.06%), and Punch (99.41%) respectively. The overall accuracy obtained from the optimized SVM model is 98.11%.

Table 1: Result of the optimized SVM model for each movement

| SVM | accuracy | precision | recall | specificity |

| R_Bong | 99.42% | 98.72% | 95.06% | 99.49% |

| R_Gan | 99.88% | 98.73% | 100.00% | 100.00% |

| R_Pak | 99.48% | 96.82% | 97.44% | 99.75% |

| R_Punch | 99.71% | 99.46% | 97.88% | 99.74% |

| R_Tan | 100.00% | 100.00% | 100.00% | 100.00% |

| Pak | 99.36% | 95.93% | 97.63% | 99.74% |

| Bong | 99.54% | 97.59% | 97.59% | 99.74% |

| Gan | 100.00% | 100.00% | 100.00% | 100.00% |

| Tan | 99.77% | 98.05% | 100.00% | 100.00% |

| Punch | 99.02% | 95.94% | 95.45% | 99.41% |

| Average | 99.62% | 98.12% | 98.11% | 99.79% |

Table 2: Result of the optimized Decision Tree model for each movement

| DT | accuracy | precision | recall | specificity |

| R_Bong | 98.59% | 92.59% | 92.59% | 99.22% |

| R_Gan | 99.17% | 94.94% | 96.15% | 99.61% |

| R_Pak | 98.77% | 94.70% | 91.67% | 99.16% |

| R_Punch | 99.47% | 98.91% | 96.30% | 99.54% |

| R_Tan | 100.00% | 100.00% | 100.00% | 100.00% |

| Pak | 98.71% | 91.53% | 95.86% | 99.54% |

| Bong | 99.12% | 97.48% | 93.37% | 99.28% |

| Gan | 99.70% | 100.00% | 96.64% | 99.68% |

| Tan | 99.94% | 100.00% | 99.50% | 99.93% |

| Punch | 98.42% | 90.52% | 96.46% | 99.53% |

| Average | 99.19% | 96.07% | 95.85% | 99.55% |

4.2. Decision Tree

The parameters used to obtain the final result for Decision Tree algorithm are Criterion: information gain, Confidence: 0.140, and Minimal Gain: 0.019. Information gain is useful to minimize randomness in the dataset [31]. The relevant features obtained from the process of optimizing selection are loc_x, loc_y, lox_z, rot_x, rot_y, rot_z, lin_vel_y, and lin_vel_z. The result of the optimized Decision Tree model for each movement is shown in Table 2. The movement R_Tan obtained the highest result (100%) in all 4 indicators, with Gan and Tan obtained the highest precision as well. The least accuracy, precision, recall, and specificity are each obtained by Punch (98.42%), Punch (90.52%), R_Pak (91.67%), and R_Pak (99.16%) respectively. The overall accuracy obtained from the optimized Decision Tree model is 96.06%.

Table 3: Result of the optimized kNN model for each movement

| kNN | accuracy | precision | Recall | specificity |

| R_Bong | 99.71% | 98.16% | 98.77% | 99.87% |

| R_Gan | 100.00% | 100.00% | 100.00% | 100.00% |

| R_Pak | 100.00% | 100.00% | 100.00% | 100.00% |

| R_Punch | 100.00% | 100.00% | 100.00% | 100.00% |

| R_Tan | 100.00% | 100.00% | 100.00% | 100.00% |

| Pak | 100.00% | 100.00% | 100.00% | 100.00% |

| Bong | 99.71% | 98.79% | 98.19% | 99.81% |

| Gan | 100.00% | 100.00% | 100.00% | 100.00% |

| Tan | 100.00% | 100.00% | 100.00% | 100.00% |

| Punch | 100.00% | 100.00% | 100.00% | 100.00% |

| Average | 99.94% | 99.70% | 99.70% | 99.97% |

4.3. k-Nearest Neighbor

The parameters used to obtain the result for kNN algorithm are Measure Type: Numerical Measures, Numerical Measure: Manhattan Distance, and k = 5. Manhattan distance is preferable when there is high dimensionality in the dataset [32]. The relevant features obtained from the process of optimizing selection are time, loc_x, rot_x, rot_y, rot_z, and ang_vel_x. The result of the optimized kNN model for each movement is shown in Table 3. All movements except for R_Bong and Bong obtained the highest result (100%) in all 4 indicators. The least accuracy, precision, recall, and specificity are each obtained by R_Bong (99.71%), R_Bong (98.16%), Bong (98.19%), and Bong (99.81%) respectively. The overall accuracy obtained from the optimized kNN model is 99.71%.

4.4. Summary of the result

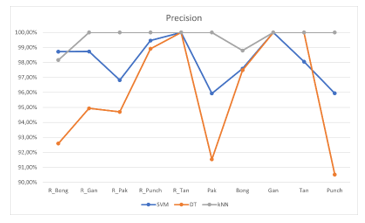

By using RapidMiner Studio, 10 basic Wing Chun hand movements were classified. The result of the classification by 3 optimized algorithms are shown in Table 1-3. Figure 2 shows the comparison of the averaged results between the optimized SVM, Decision Tree, and kNN algorithms.

The averaged results of the optimized SVM and Decision Tree show that accuracy and specificity have higher results than precision and recall, while in the optimized Decision Tree, the precision and recall results are less than the optimized SVM one. From all 3 algorithms, kNN obtained the highest results in all 4 indicators, reaching more than 99%.

Figure 2: Comparison of average results between the optimized algorithms

Figure 2: Comparison of average results between the optimized algorithms

Figure 3: Accuracy of all movements from 3 algorithms

Figure 3: Accuracy of all movements from 3 algorithms

Figure 4: Precision of all movements from 3 algorithms

Figure 4: Precision of all movements from 3 algorithms

The number of extracted features for optimized SVM, Decision Tree, and kNN are varied. The time feature is not present in the Decision Tree. As for angular acceleration, linear acceleration, angular velocity, and linear velocity, the features present in the 3 algorithms are varied. However, lin_vel_z is present in both SVM and Decision Tree. The rotation feature (rot_x, rot_y, and rot_z) are present in all 3 algorithms. Location (loc_x, loc_y, loc_z) are present in SVM and Decision Tree, while only loc_x that is present in kNN. This result shows that Wing Chun hand is focused more on positioning than velocity and acceleration. This corresponds according to Master Ip Chun, if the hand position and movement is not correct, it will not be useful and may be dangerous [33].

Figure 5: Recall of all movements from 3 algorithms

Figure 5: Recall of all movements from 3 algorithms

Figure 6: Specificity of all movements from 3 algorithms

Figure 6: Specificity of all movements from 3 algorithms

From Figure 3-6, kNN has the highest results in all 4 indicators, while Decision Tree has the lowest. While all algorithm’s curve has some intersections with each other at some movements, the difference between them is clear enough. It can also be seen that R_Tan reached 100% in every indicator and all applied algorithms. For every indicator, the shape of the graphs for kNN is identical, except for R_Bong and Bong, which are not reaching 100%. This is understandable because even though Bong Sau is a basic hand movement, the shape and movement are more complex than other basic movements.

Figure 7: Comparison between the overall accuracy of default and optimized algorithms

Figure 7: Comparison between the overall accuracy of default and optimized algorithms

The initial accuracy for SVM, Decision Tree, and kNN is 52.57%, 55.14%, and 98.06%, respectively. After the optimization process, the split validation process is done again to obtain the final accuracy. The final accuracy for SVM, Decision Tree, and kNN is 98.11%, 96.06%, and 99.71%, respectively. From the comparison seen in Figure 7, it can be seen that the optimization improves all 3 algorithms, especially SVM and Decision Tree. Both default and optimized results show that kNN obtains the highest accuracy.

To check whether the result shows a statistically significant difference (p 0.05 and p 0.01), Wilcoxon signed-rank test is performed on all 4 indicators (accuracy, precision, recall, and specificity) of both default and optimized algorithms. The test shows that all 4 indicators in SVM have a statistically significant difference. Both Decision Tree and kNN have a statistically significant difference in all indicators except for precision. Table 4 shows the result of the test.

Wilcoxon signed-rank test is also performed to the comparison of each algorithm in both default and optimized. The result of the comparison of default algorithms shows that SVM and Decision Tree do not have a statistically significant difference. The result of the comparison of the optimized algorithm shows that all the algorithms have a statistically significant difference. Table 5 shows the result of the test.

Table 4: Wilcoxon signed-rank test result of 4 indicators of both default and optimized algorithms

| SVM | DT | kNN | |

| Accuracy | 0.005** | 0.008** | 0.043* |

| Precision | 0.005** | 0.093 | 0.091 |

| Recall | 0.008** | 0.021* | 0.043* |

| Specificity | 0.008** | 0.015* | 0.028* |

Table 5: Wilcoxon signed-rank test result of the comparison of each algorithm in both default and optimized

| SVM-DT | SVM-kNN | DT-kNN | |

| Default | 0.721 | 0.005** | 0.008** |

| Optimized | 0.011* | 0.012* | 0.008** |

5. Conclusion

The purpose of this study is to find the best possible method of classifying Wing Chun basic hand movement to create a Wing Chun training simulation system in VR. The results show that movement data features such as location, rotation, and linear velocity are significant enough to classify Wing Chun basic hand movements. Right hand Tan Sau has the highest value of all 4 indicators. Meanwhile, based on the result of the optimized kNN algorithm, Bong Sau of both hands is the only movement that is not reaching 100% performance, which is understandable because Bong Sau is a more complex basic movement. Before the optimization process, only kNN algorithm that has a high-performance result out of 3 algorithms. However, after the optimization of parameters and selection of features, SVM and Decision Tree’s performances significantly improved. The comparison of the results shows that the optimized kNN algorithm obtained the highest results.

6. Discussion

Five basic movements were captured for each left and right, making it 10 movements in total. The process of capturing the movement is done with Oculus Quest VR gear that comes with a pair of handheld Oculus Controller. A simple game is developed with Unreal Engine 4 to capture motion data that consists of several features of movements, such as location, rotation, etc. RapidMiner Studio is used to pre-process the dataset, apply algorithms, and optimize the learning model. This study compares SVM, Decision Tree, and kNN algorithms since 3 of them were commonly used in the earlier study of motion classification and recognition. The performance indicators such as accuracy, precision, recall, and specificity are used to compare the overall results of the 3 algorithms.

In this study, while algorithms like SVM, Decision Tree, and kNN are compared, this study does not include combining algorithms, for example like combining SVM with kNN, etc. RapidMiner Studio has various modules that help in processing data with algorithms. AdaBoost module has been applied to the optimized kNN, but the result shows no improvement. The module like Ensemble Stacking has yet to be explored further, although attempt to use stacking to stack all 3 algorithms, the result is not as good as the obtained result of each optimized algorithm has been done. Machine learning algorithms such as Deep Learning, Neural Networks, and Spatio-temporal algorithms can also be applied and compared for future studies.

The simple game that is developed to capture the movements also has a limitation in which the user can only stand still. The game can still be developed so that it could improve the motion capture process to include the user able to move around while performing hand movements. While this study shows that the algorithms can accurately classify basic hand movements, there are more basic hand movements that are very similar to each other. These movements can only be distinguished by looking at the area of contact of the hand. This can also be a field of further study for better movement classification. Further classification of sequences could be a field to be explored as well since Wing Chun also has a more complex set of movements that require more than one type of movement.

Conflict of Interest

The authors declare no conflict of interest.

Acknowledgment

The authors would like to thank Abba Suganda Girsang S.T., M.Cs., Ph.D for the helpful suggestions and Sifu Julius Khang for his teaching of Wing Chun.

- T.H. Chow, B.Y. Lee, A.B.F. Ang, V.Y.K. Cheung, M.M.C. Ho, S. Takemura, The effect of Chinese martial arts Tai Chi Chuan on prevention of osteoporosis: A systematic review, Journal of Orthopaedic Translation, 12, 74–84, 2018, doi:10.1016/j.jot.2017.06.001.

- J. Kong, G. Wilson, J. Park, K. Pereira, C. Walpole, A. Yeung, Treating depression with tai Chi: State of the art and future perspectives, Frontiers in Psychiatry, 10(APR), 2019, doi:10.3389/fpsyt.2019.00237.

- A. Harwood, M. Lavidor, Y. Rassovsky, Reducing aggression with martial arts: A meta-analysis of child and youth studies, Aggression and Violent Behavior, 2017, doi:10.1016/j.avb.2017.03.001.

- J. Steuer, “Defining Virtual Reality: Dimensions Determining Telepresence,” Journal of Communication, 1992, doi:10.1111/j.1460-2466.1992.tb00812.x.

- M. Fernandez, “Augmented-Virtual Reality: How to improve education systems,” Higher Learning Research Communications, 2017, doi:10.18870/hlrc.v7i1.373.

- G. Riva, R.M. Baños, C. Botella, F. Mantovani, A. Gaggioli, Transforming experience: The potential of augmented reality and virtual reality for enhancing personal and clinical change, Frontiers in Psychiatry, 2016, doi:10.3389/fpsyt.2016.00164.

- M.E. Portman, A. Natapov, D. Fisher-Gewirtzman, “To go where no man has gone before: Virtual reality in architecture, landscape architecture and environmental planning,” Computers, Environment and Urban Systems, 2015, doi:10.1016/j.compenvurbsys.2015.05.001.

- N.Y. Lee, D.K. Lee, H.S. Song, “Effect of virtual reality dance exercise on the balance, activities of daily living, And depressive disorder status of Parkinson’s disease patients,” Journal of Physical Therapy Science, 2015, doi:10.1589/jpts.27.145.

- K.K. Bhagat, W.K. Liou, C.Y. Chang, “A cost-effective interactive 3D virtual reality system applied to military live firing training,” Virtual Reality, 2016, doi:10.1007/s10055-016-0284-x.

- K.E. Laver, B. Lange, S. George, J.E. Deutsch, G. Saposnik, M. Crotty, Virtual reality for stroke rehabilitation, Cochrane Database of Systematic Reviews, 2017, doi:10.1002/14651858.CD008349.pub4.

- D. Freeman, S. Reeve, A. Robinson, A. Ehlers, D. Clark, B. Spanlang, M. Slater, Virtual reality in the assessment, understanding, and treatment of mental health disorders, Psychological Medicine, 2017, doi:10.1017/S003329171700040X.

- J.A. Stevens, J.P. Kincaid, “The Relationship between Presence and Performance in Virtual Simulation Training,” Open Journal of Modelling and Simulation, 2015, doi:10.4236/ojmsi.2015.32005.

- M. Slater, M. V. Sanchez-Vives, Enhancing our lives with immersive virtual reality, Frontiers Robotics AI, 2016, doi:10.3389/frobt.2016.00074.

- V. Kotu, B. Deshpande, Predictive Analytics and Data Mining: Concepts and Practice with RapidMiner, 2014, doi:10.1016/C2014-0-00329-2.

- M.R.K. Hofmann, Data Mining Use Cases and Business Analytics Applications, 2016.

- J. Barbi?, A. Safonova, J.Y. Pan, C. Faloutsos, J.K. Hodgins, N.S. Pollard, “Segmenting motion capture data into distinct behaviors,” in Proceedings – Graphics Interface, 2004.

- Z. Xing, J. Pei, E. Keogh, “A brief survey on sequence classification,” ACM SIGKDD Explorations Newsletter, 2010, doi:10.1145/1882471.1882478.

- C. Li, L. Khan, B. Prabhakaran, “Real-time classification of variable length multi-attribute motions,” Knowledge and Information Systems, 2006, doi:10.1007/s10115-005-0223-8.

- W. Ugulino, D. Cardador, K. Vega, E. Velloso, R. Milidiú, H. Fuks, “Wearable computing: Accelerometers’ data classification of body postures and movements,” in Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), 2012, doi:10.1007/978-3-642-34459-6_6.

- M. Hall, “Correlation-Based Feature Selection for Machine Learning,” Department of Computer Science, 19, 2000.

- Y. Freund, R.E. Schapire, “Experiments with a New Boosting Algorithm,” Proceedings of the 13th International Conference on Machine Learning, 1996, doi:10.1.1.133.1040.

- W. Wu, S. Dasgupta, E.E. Ramirez, C. Peterson, G.J. Norman, “Classification accuracies of physical activities using smartphone motion sensors,” Journal of Medical Internet Research, 2012, doi:10.2196/jmir.2208.

- K. Nandakumar, K.W. Wan, S.M.A. Chan, W.Z.T. Ng, J.G. Wang, W.Y. Yau, “A multi-modal gesture recognition system using audio, video, and skeletal joint data,” in ICMI 2013 – Proceedings of the 2013 ACM International Conference on Multimodal Interaction, 2013, doi:10.1145/2522848.2532593.

- X. Zhai, B. Jelfs, R.H.M. Chan, C. Tin, “Short latency hand movement classification based on surface EMG spectrogram with PCA,” in Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMBS, 2016, doi:10.1109/EMBC.2016.7590706.

- X. Li, Q. Zhuo, X. Zhang, O.W. Samuel, Z. Xia, X. Zhang, P. Fang, G. Li, “FMG-based body motion registration using piezoelectret sensors,” in Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMBS, 2016, doi:10.1109/EMBC.2016.7591758.

- T. Hachaj, M. Piekarczyk, M.R. Ogiela, “Human actions analysis: Templates generation, matching and visualization applied to motion capture of highly-skilled karate athletes,” Sensors (Switzerland), 2017, doi:10.3390/s17112590.

- D. Berndt, J. Clifford, “Using dynamic time warping to find patterns in time series,” Workshop on Knowledge Knowledge Discovery in Databases, 1994.

- F. Zhang, T.Y. Wu, J.S. Pan, G. Ding, Z. Li, “Human motion recognition based on SVM in VR art media interaction environment,” Human-Centric Computing and Information Sciences, 2019, doi:10.1186/s13673-019-0203-8.

- B. Schölkopf, A.J. Smola, R.C. Williamson, P.L. Bartlett, “New support vector algorithms,” Neural Computation, 2000, doi:10.1162/089976600300015565.

- C.W. Hsu, C.C. Chang, C.J. Lin, “A Practical Guide to Support Vector Classification,” BJU International, 2008.

- V. Jain, A. Phophalia, J.S. Bhatt, “Investigation of a Joint Splitting Criteria for Decision Tree Classifier Use of Information Gain and Gini Index,” in IEEE Region 10 Annual International Conference, Proceedings/TENCON, 2019, doi:10.1109/TENCON.2018.8650485.

- W. Song, H. Wang, P. Maguire, O. Nibouche, “Local Partial Least Square classifier in high dimensionality classification,” Neurocomputing, 2017, doi:10.1016/j.neucom.2016.12.053.

- I. Chun, M. Tse, Wing Chun Kung Fu: Traditional Chinese King Fu for Self-Defense and Health, St. Martin’s Publishing Group, 1998.

Citations by Dimensions

Citations by PlumX

Google Scholar

Scopus

Crossref Citations

No. of Downloads Per Month

No. of Downloads Per Country