Assistive System for Collaborative Assembly Task using Augmented Reality

Volume 9, Issue 4, Page No 110–118, 2024

Adv. Sci. Technol. Eng. Syst. J. 9(4), 110–118 (2024);

DOI: 10.25046/aj090412

DOI: 10.25046/aj090412

Keywords: Augmented reality, STEM education, Education robot

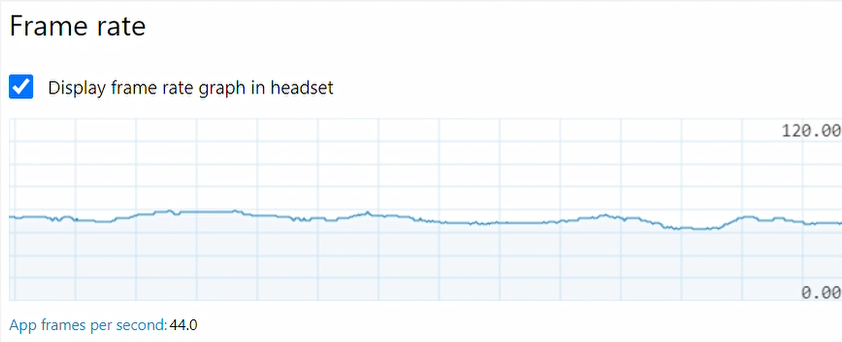

Augmented reality (AR) technology has been increasingly used in developing teaching materials with the aim of sparking more interest in technology (T) and engineering (E) among students in STEM education. In the proposed system, AR is integrated with an educational robot controlled by a KidBright microcontroller board, developed by the Educational Technology research team (EDT) at the National Electronics and Computer Technology Center in Thailand. Moreover, the KidBright program has been implemented over 2,200 Thai schools. To maximize the benefits of the KidBright program, the Assistive System for Collaborative Assembly Task using Augmented Reality (ASCAT-AR) was created with the objective of enabling students to learn and collaborate in assembling robots. Students will work in pairs to assemble robots using the system and learn about mechanics, sensors, and 3D-printed parts. The students were divided into two groups: Group A read the manual and assembled the robot independently, while Group B used the ASCAT-AR system. In addition, AR applications offer smooth graphic rendering at 44-60 frames per second. Evaluation result showed that Group B students had a higher average success rate than average success rate of Group A students. The results showed that users of the ASCAT-AR system were more motivated in learning and obtained more knowledge about robot technology and programming.

1. Introduction

STEM education is a method of teaching that focuses on science, technology, engineering, and mathematics. Students receive training and preparation for the necessary 21st-century skills needed for success in the modern world [1]. Based on Bloom’s Taxonomy [2], the related skills are classified into three categories: cognitive skills as shown in Table 1, such as critical thinking and problem-solving; social and emotional skills, such as communication and collaboration; and technological skills, such as the ability to use digital tools and platforms [3]

Previous works have revealed gaps in the effectiveness of STEM education in preparing students. One significant gap is the shortage of teachers specializing in STEM fields. A case study published in the Journal of Science Education and Technology highlights the challenges faced by many schools, particularly those in low-income neighborhoods, in providing STEM instruction due to difficulties in recruiting qualified teachers [4], [5]. Another challenge lies in the insufficient professional development opportunities for STEM teachers, as some educators lack the essential skills and knowledge needed to seamlessly incorporate STEM teaching and learning [6]. One such gap is the absence of standards and frameworks for developing and implementing MR teaching tools in STEM education. Although there is a growing interest in using MR for educational purposes, [7] a study published in the Journal of Science Education and Technology indicated a lack of sufficient guidelines and frameworks to assist educators in creating and utilizing MR resources effectively [8]. Table 2 show a comparison of the technologies.

Research published in the Journal of Science Education and Technology highlights the scarcity of mixed reality (MR) integration in STEM curricula. Rather than fully integrating mixed reality (MR) into the curriculum, some STEM instructors use it sporadically, which may reduce its effectiveness in teaching [9], [10]. Thailand has placed significant emphasis on STEM education and is committed to developing a more skilled and innovative workforce [11] The STEM education curriculum has been previously introduced and studied in Thailand[12], with initiatives focusing on curriculum development, digital media production, implementation in classrooms, and teacher training [13] The challenge is that students demonstrate low engagement in STEM disciplines. This lack of interest can hinder the effectiveness of STEM education and the development of a skilled future workforce [14]. Recently, robotics competitions have been organized in Thailand to motivate students’ interest and creativity in robotics. In line with these efforts, schools have developed STEM education curricula that allow students to engage with technology. Although there is research on the design and implementation of MR learning games for robot assembly, there are still gaps in the teaching of technology and engineering subjects in STEM education. To enhance skills in robotics technology, this research project has developed an Assistive System for Collaborative Assembly Task using Augmented Reality (ASCAT-AR). This proposed system enables students to learn about the components and begin assembling a robot. The project implements augmented reality (AR) technology to captivate students’ interests and relate their learning to future career opportunities.

Table 1: The Revised Taxonomy (2001) [4]

| 1.Remember |

This level is about recalling information, such as facts, definitions, and concepts. |

| 2.Understand |

This level is about understanding the meaning of information, such as being able to explain it in your own words or apply it to new situations. |

| 3.Apply |

This level is about using information to solve problems or complete tasks. |

| 4.Analyze |

This level is about breaking down information into its component parts and understanding how they relate to each other. |

| 5.Evaluate |

This level is about making judgments about the value or worth of information. |

| 6.Create |

This level is about putting information together in new and original ways. |

Table 2: XR Technology Comparison [9]

| Features | AR | VR | MR |

| Definition | Limited interaction with virtual objects | Natural interaction with virtual objects | Natural interaction with both real and virtual objects |

| Hardware | Headset or smartphone | Headset required | Headset required |

| Applications | Navigation, wayfinding, product visualization, gaming | Gaming, entertainment, education, training | Gaming, entertainment, design, manufacturing, education, training |

This research aims to develop an innovative educational tool to assist students in learning technology and engineering concepts within STEM education. AR technology is utilized within this system, and the educational robot is designed to be interfaced with Microsoft HoloLens 2 devices.

2. Proposed System

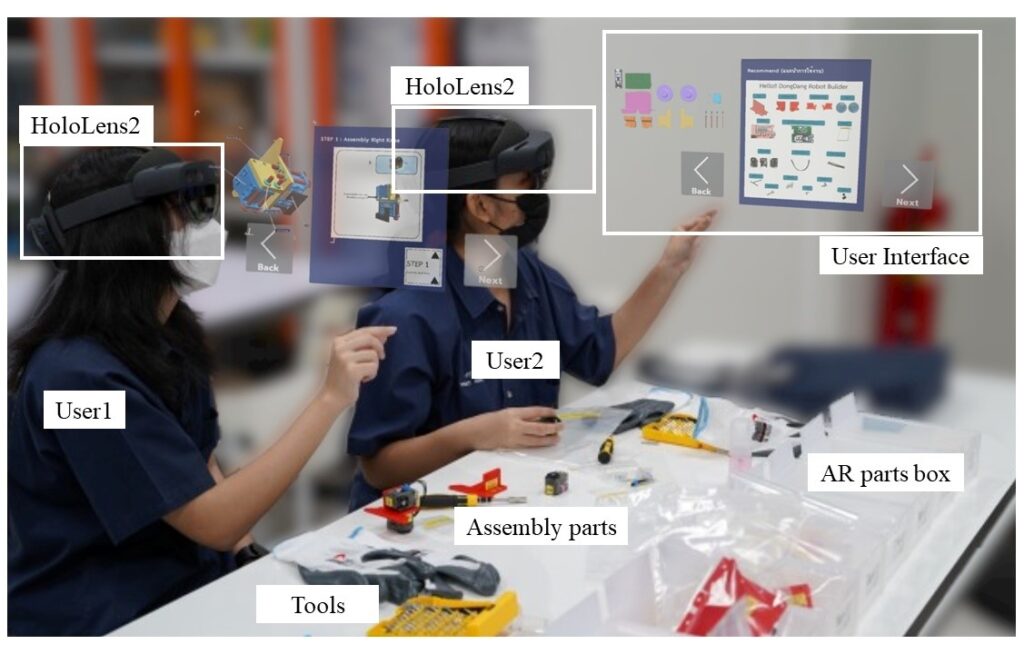

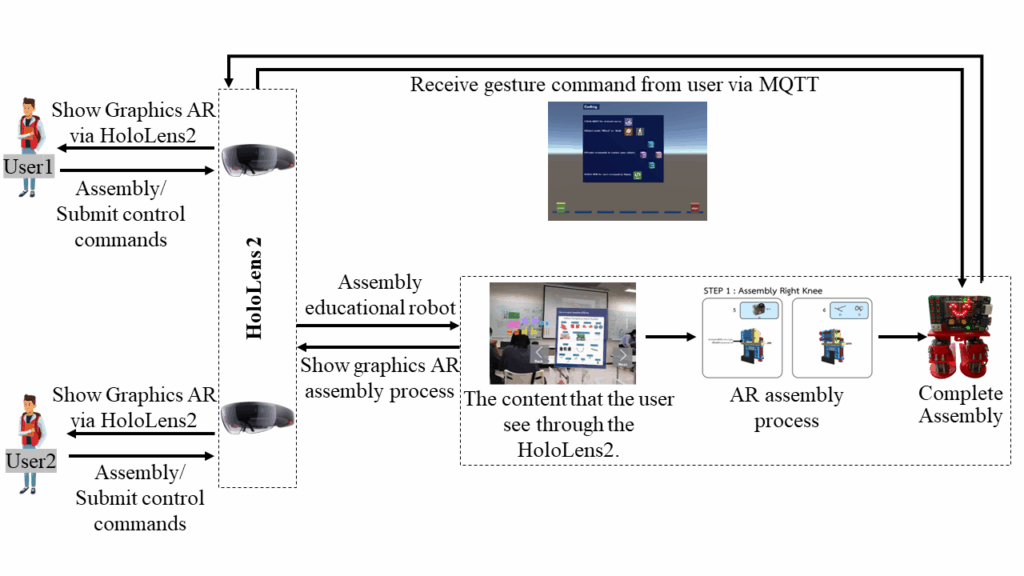

This research aims to create an innovative educational tool that can motivate students to understand STEM contents through robot assembly and control. Augmented reality (AR) technology is incorporated into this system to enhance the learning experience. The system overview, demonstrated in Figure 1, shows how users can use hand gestures to interact with a 3D model, manipulate its motion, and access information. Once the robot assembly is completed, the Microsoft HoloLens 2 provides a user interface for controlling the robot.

2.1. System Overview

The system overview depicted in Figure 1 illustrates that users can assemble an educational robot and utilize hand gesture recognition to rotate, move, zoom in, and zoom out a 3D prototype. The display is viewed through the Microsoft HoloLens 2. Once the educational robot is fully assembled, the system will show model a user interface and a window for simple programming, which is used to control the educational robot.

2.2. System Configuration

The system operates through two primary processes: constructing the robot and controlling it. Figure 2 provides an overview of the involved steps. Initially, students are required to assemble the components of the educational robot. They can then write basic code to manage the robot using Microsoft HoloLens 2. Communication with the robot is conducted via a MQTT protocol. All instructions for building and controlling the robot are displayed directly on the Microsoft HoloLens 2.

2.3. Mechanical Design and Implementations

The Otto platform was effectively used in a robotics engineering class at MIT, demonstrating its suitability for educational purposes [15]. The education robot is based on the Otto DIY Ninja robot, which is an open-source robot designed to teach programming, mechanics, electronics, design, the internet of things, and artificial intelligence [16]. It was selected as the foundation for the project because of its well-designed, affordable nature that is suitable for Thai schools. Several modifications were made to the Otto DIY Ninja design to align it more closely with Thai curriculum and teaching style. The robot is a low-cost, user-friendly, and educationally rich tool that can be used to teach various STEM concepts to students in Thailand.

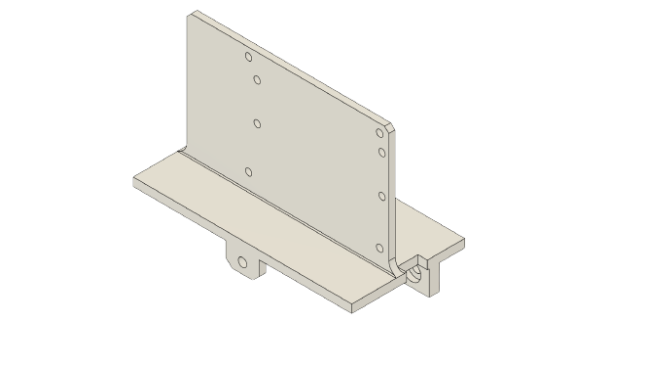

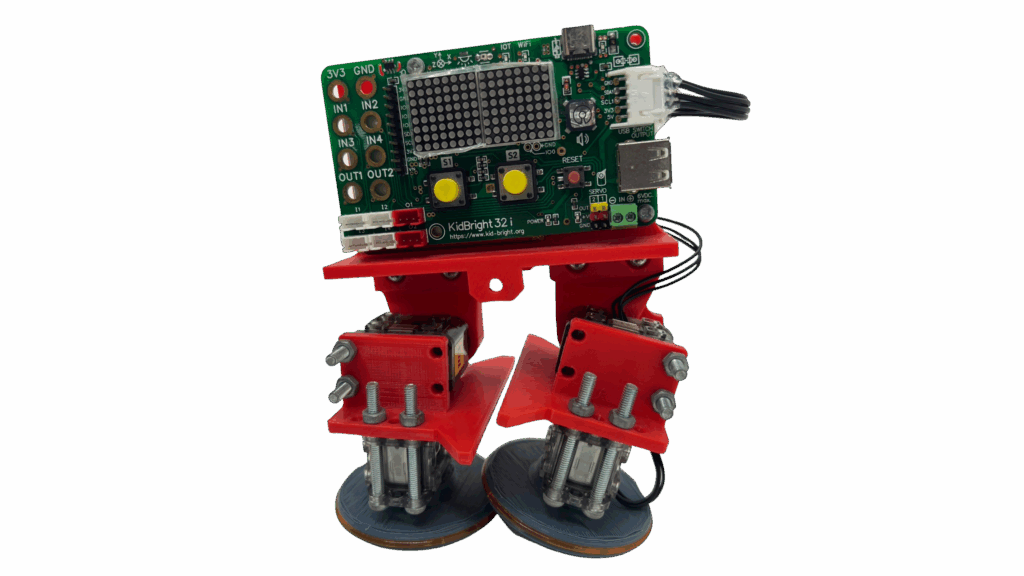

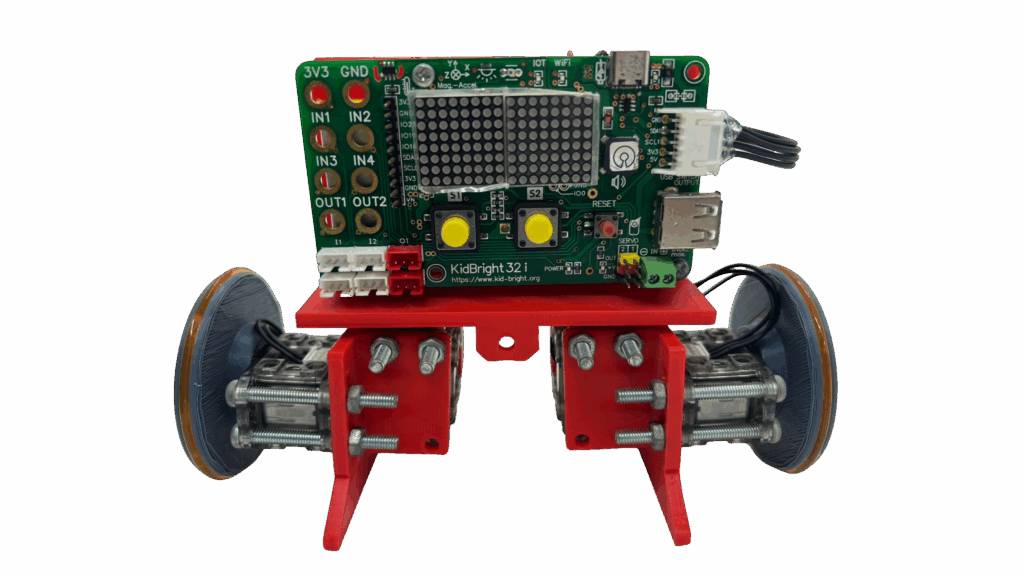

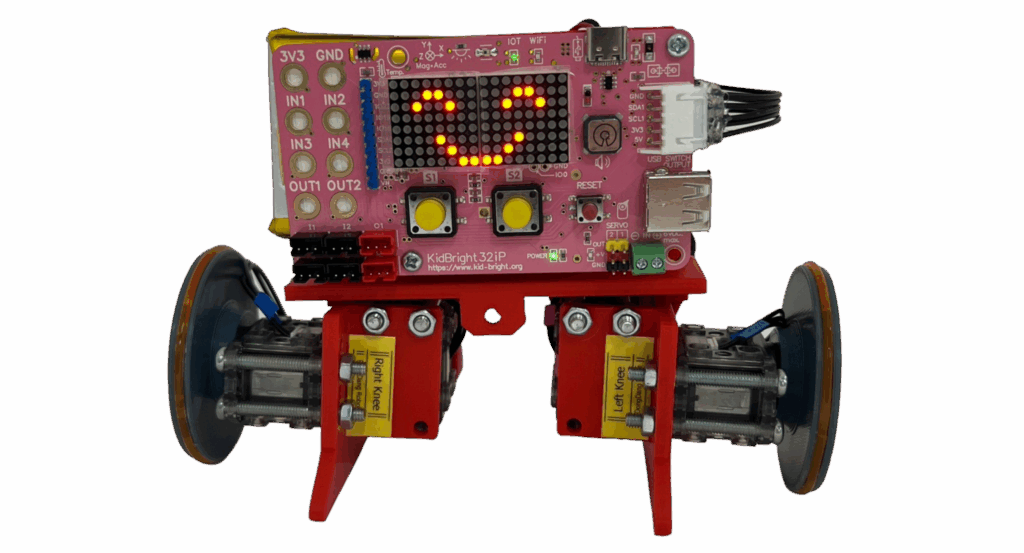

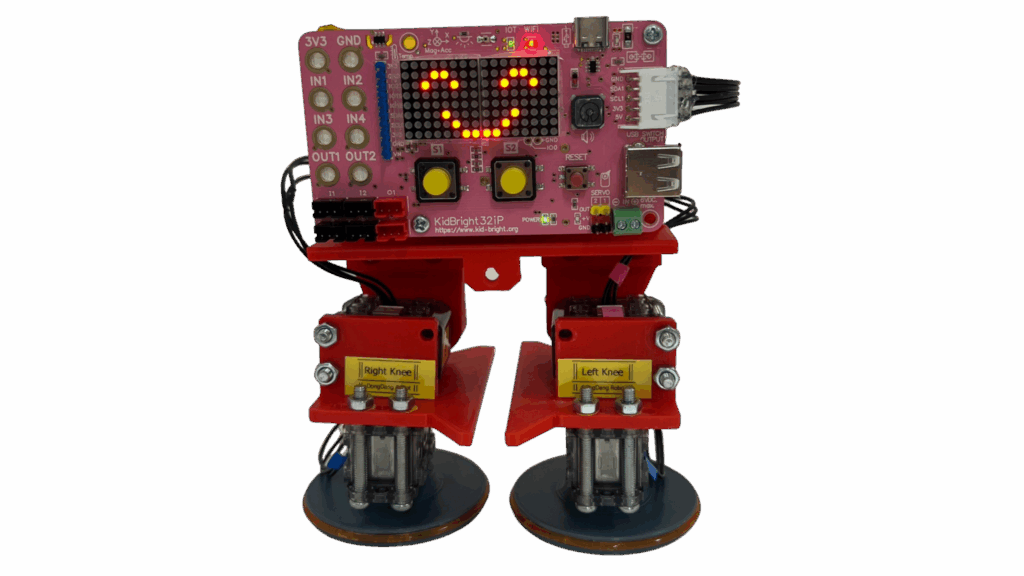

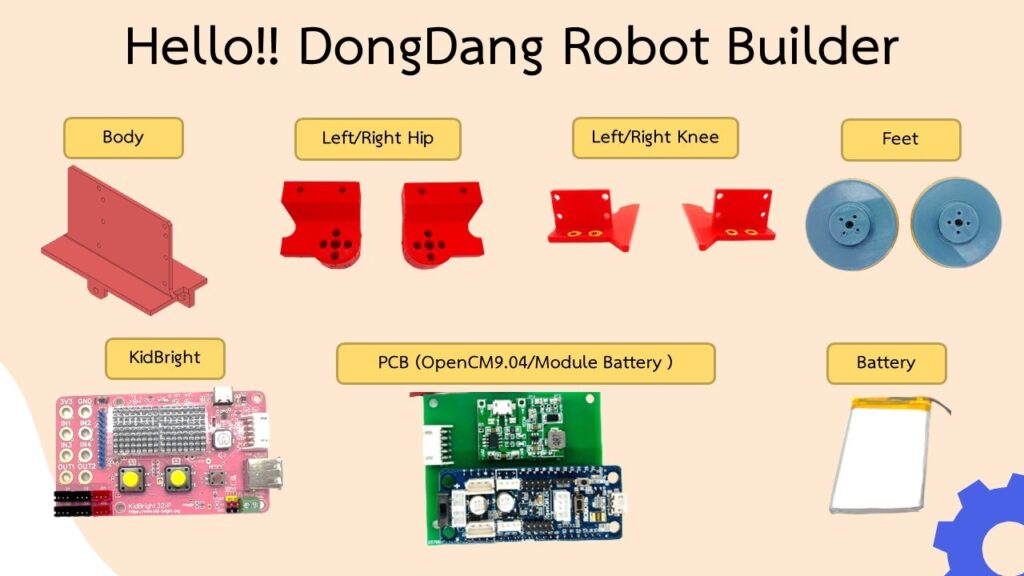

The education robot in this research was designed using the SolidWorks program for 3D modeling robot parts. The robot has circular feet for walking and wheels for fast movement. The wheel was designed to allow the robot to walk on its feet or move on wheels. The robot’s head is designed to accommodate the KidBright microcontroller board, OpenCM9.04 for operating the DYNAMIXEL XL-320 and a battery pack. The robot’s legs can be folded and are designed to support two DYNAMIXEL XL-320s on each side. The component parts of the robot that will be used for 3D printing are shown in Figure 3. The foot part of the robot is shown in Figure 4, and the robot’s head with the control board is shown in Figure 5.

The assembled robot is set to the walk mode by default as shown in Figure 6. In this mode, the robot can move forward, backward, turning left, and turning right. Additionally, the robot can be switched to the wheel mode as shown in Figure 7, where it can move using its wheels. Even in wheel mode, the robot retains the ability to move forward, backward, turn left, and turn right.

I am text block. Click edit button to change this text. Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

2.4. Electronic Design and Implementations

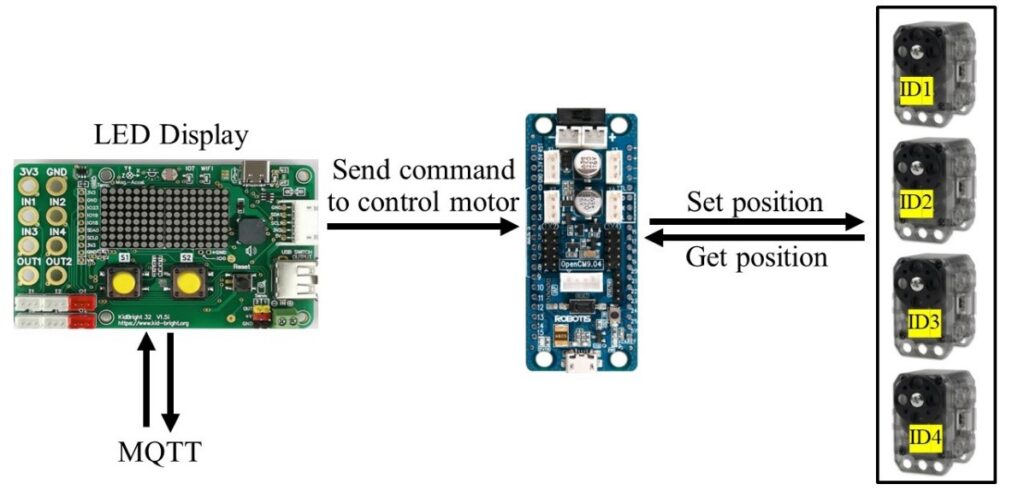

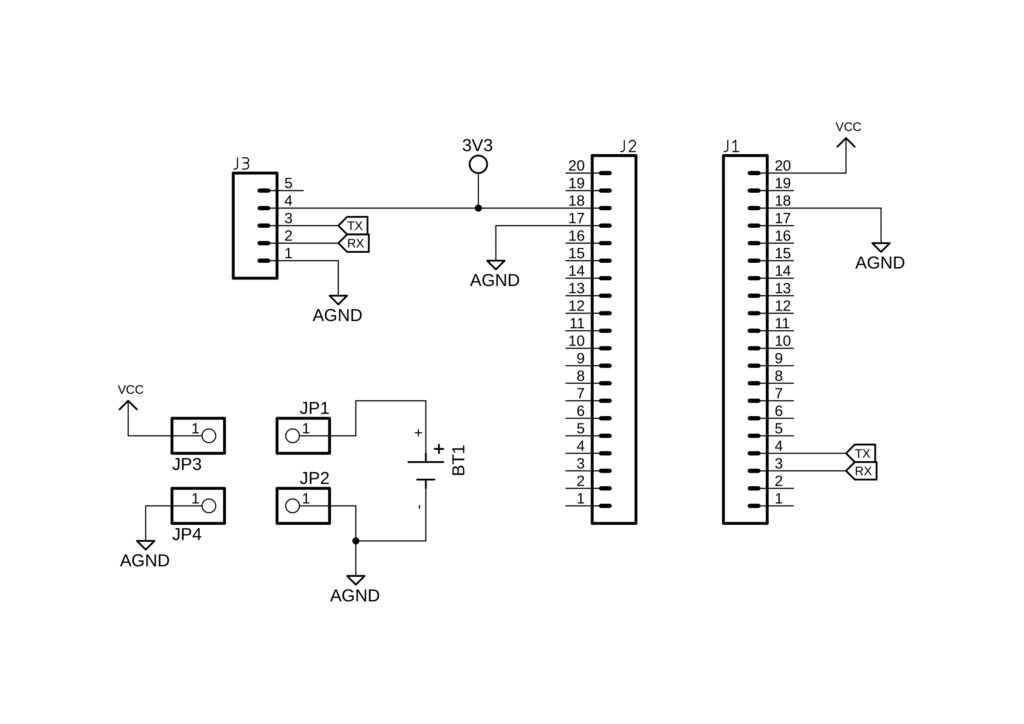

The KidBright microcontroller board was developed by the educational technology research team (EDT) at the National Electronics and Computer Technology Center (NECTEC), Thailand. It has been implemented over 2,200 schools across the country, promoting STEM learning on a wide scale. The board, based on the ESP32 microcontroller, enables device-to-device connectivity with internet of things feature and supports the integration of various external sensor modules via the I2C communication port. Figure 8 shows the connections of the KidBright board, the OpenCM9.04 controller, and the four DYNAMIXEL XL-320 servo motors. The KidBright microcontroller board offers a user-friendly interface, affordability, and IoT capabilities, making it an asset for educational robots, suitable for both in-class learning and remote-control applications.

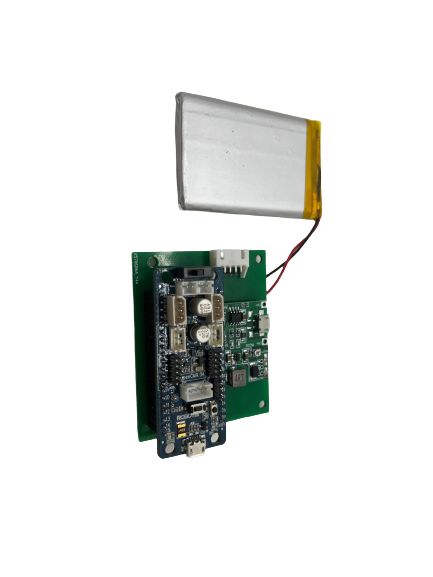

Figure 9 shows the OpenCM9.04 microcontroller and battery pack while Figure 10 displays the circuit battery charging design. The OpenCM9.04 is a microcontroller board used for controlling the DYNAMIXEL XL-320 motors. The battery pack supplies power to both the OpenCM9.04 and the DYNAMIXEL XL-320 motors. These components are essential for the education robot as they provide the necessary power for control the robot’s movement.

2.5. Software Design and Implementations

2.5.1. Education Robot

The robot control program is divided into two parts. The KidBright program part: This part is used to as display graphics on screen and sends and receives data through the MQTT protocol. The KidBright board receives the robot’s commands from the user. The OpenCM9.04 program part: This part is connected to the KidBright board using the I2C communication protocol. It is used to control the position of the DYNAMIXEL XL-320 motors to move to the received position by using the C++ programming language. The MQTT protocol is a lightweight messaging protocol that is well-suited for IoT applications. It is used to send and receive messages between devices over a network. The MQTT protocol is used in the education robot to send and receive commands between the KidBright board and the Microsoft HoloLens 2. The robot can be controlled by the user with two modes, which are wheel mode and walk mode as shown in Figure 11 and Figure 12, respectively.

2.5.2. Robot Assembly Procedures

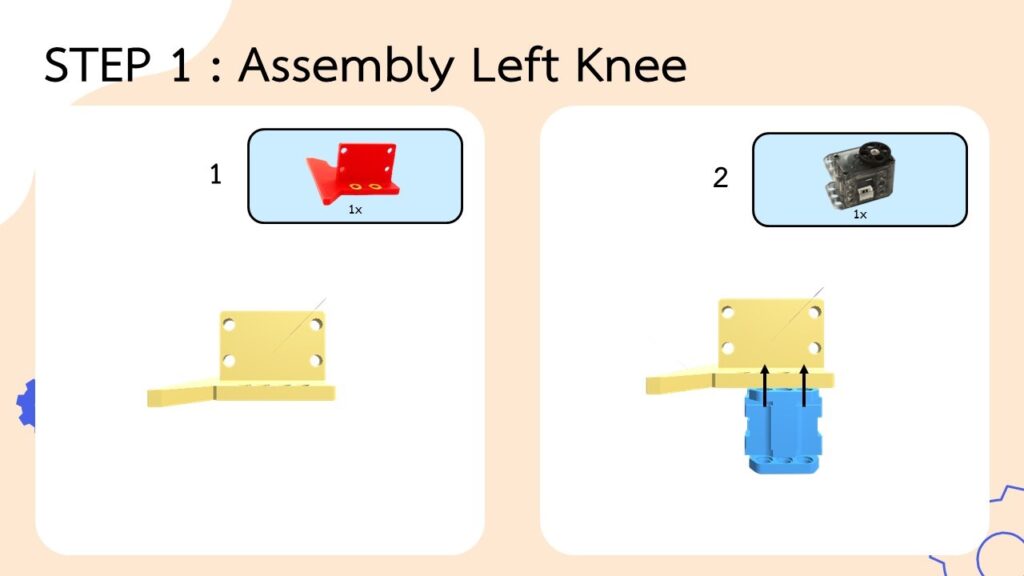

The assembly instructions for this research were modeled after LEGO’s format, catering to a young audience. They were crafted to be intuitive, and accommodating users who may have no prior experience in robot assembly. These instructions utilize a 2D graphical format with distinct images and directional arrows to guide users through the assembly steps. Figure 13, the first page of the instructions, introduces the robot’s parts along with a count of the pieces and components required for assembly. Subsequently, Figure 14 shows the detail of the initial step for the assembly, focusing on constructing the left knee, It is divided into two sub-steps. The guide follows a systematic structure, with each step clearly labeled and numbered. These instructions are a crucial component of the educational robot kit, facilitating the assembly process and enriching the user’s understanding of the robot’s various parts and components.

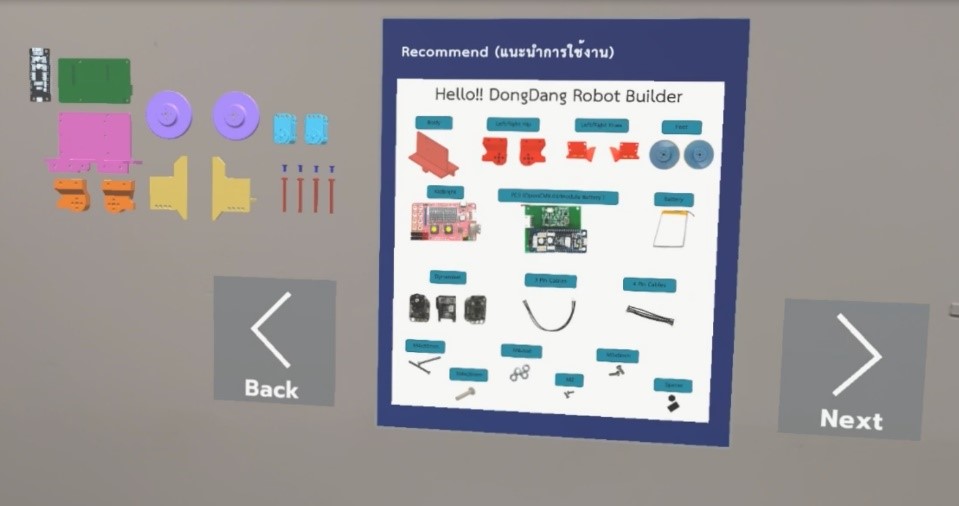

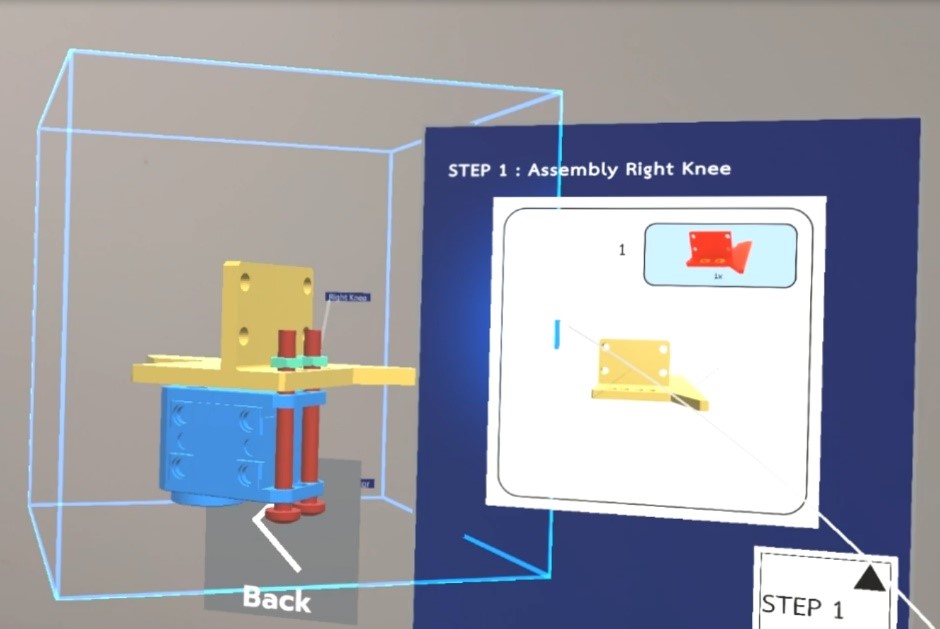

The 2D manual is implemented as a user interface (UI) on the display of Microsoft HoloLens 2. The UI on Microsoft HoloLens 2 is in a 3D format. 3D models can be scaled, rotated, or moved. These models can be animated to form an animation loop of the assembly process. Figure 15 shows Next and Back UI buttons for the next step or to go back to the previous step when the wrong assembly occurs. Assembly figure and description can be shown in Figure 16. The UI was developed using the Unity game engine. The UI was designed to be easy to use and understand, even for users with no prior experience with 3D applications.

3. System Implementations

3.1. Assembly System

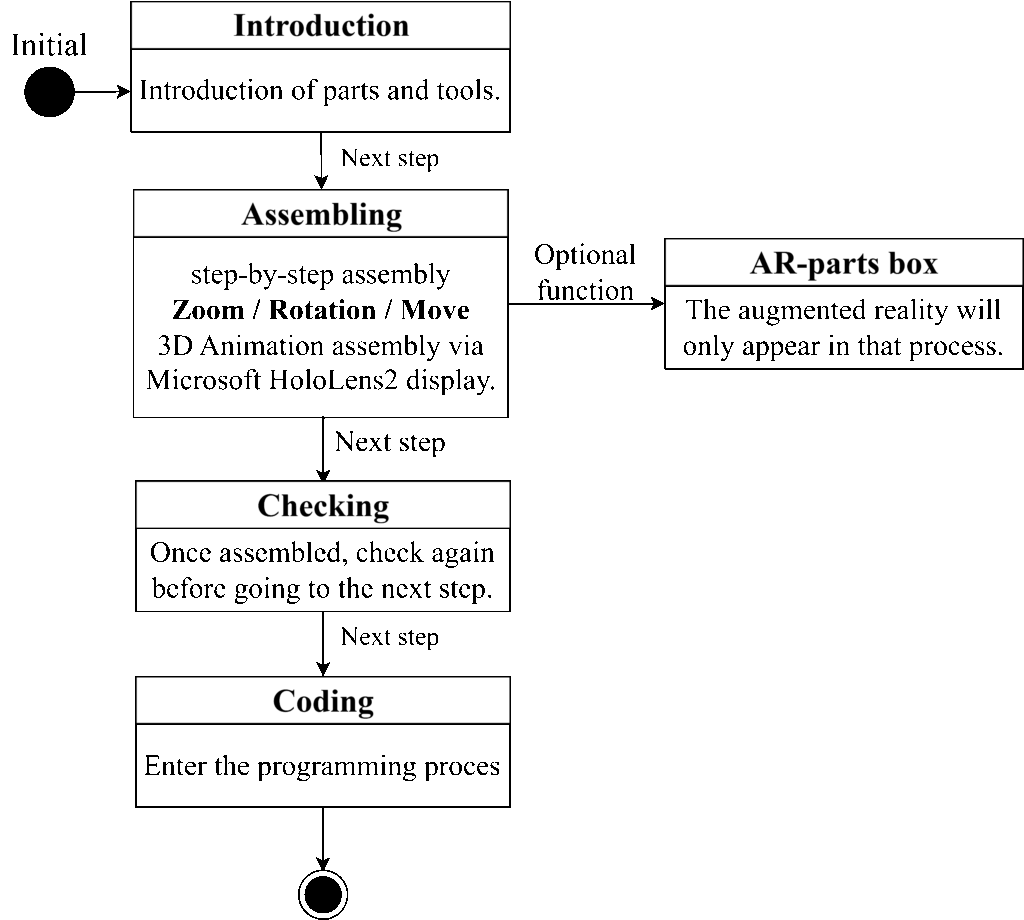

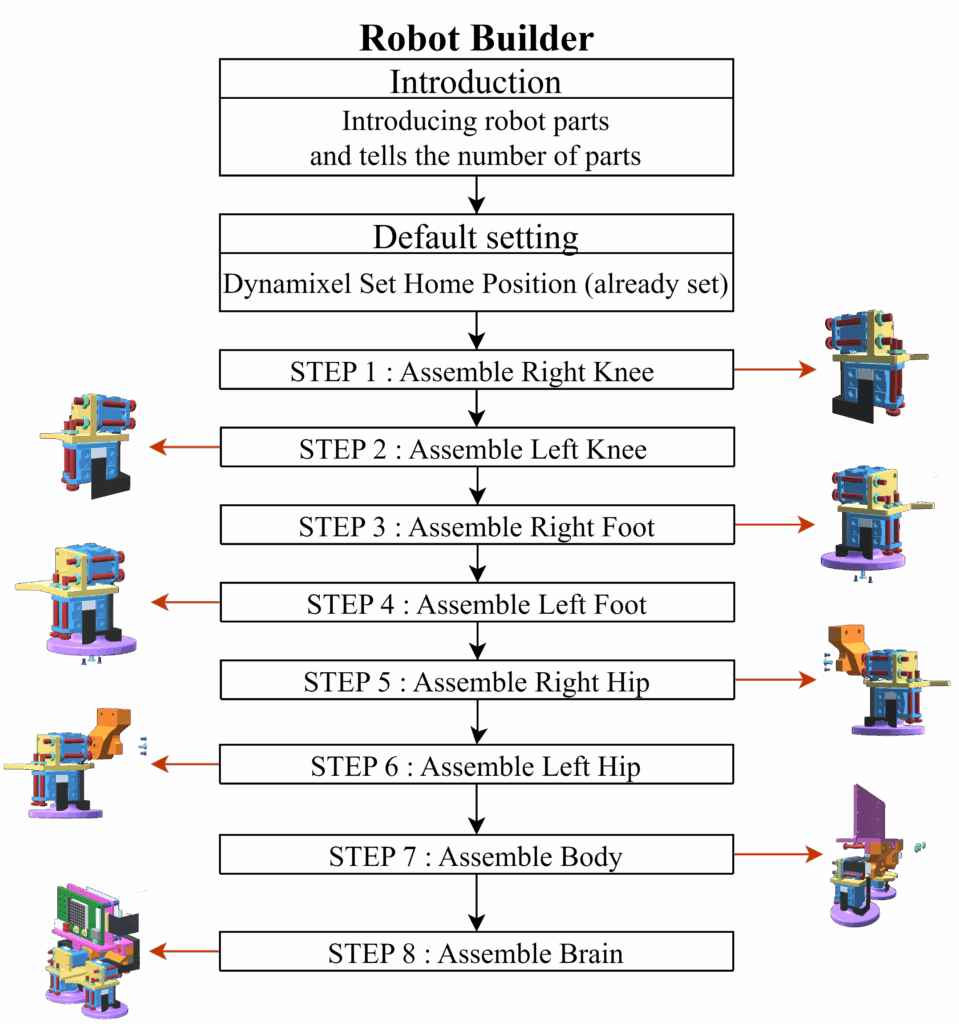

Figure 17 shows the state diagram of ASCAT-AR system. First, the user launches the ASCAT-AR application. The application presents a window showing details of the modeled robot’s components, as well as the models of necessary tools and equipment. Next, the user follows the on-screen guide to assemble the robot. This interactive guide will lead the user through the assembly process, from step one to step eight. AR highlights the exact location of each tool required for the current step, and enhances the ease of the assembly process. After completing the assembly, the application offers a choice of reassembling the robot or proceeding to program its controller. At this step, the user can verify each step of the assembly to ensure the completion. Once the assembly is confirmed to be completely correct, the application moves to a display window for robot control programming.

Figure 18 shows the sequences of assembly process. Each step must be completed before the next step can be proceeded. However, some robot modules can be assembled independently from the others.

3.2. Blockly System and Robot Control

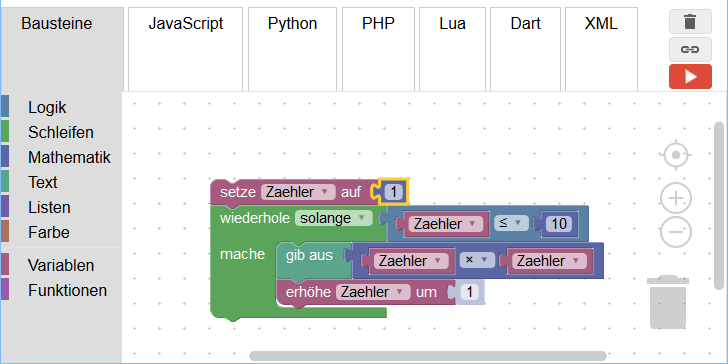

Figure 19 shows the code using block or Blockly, an open-source visual programming language. Blockly allows users to write code using a visual block-based interface. Blockly is based on the prototype concept from Google’s Blockly [14] and is designed to be easy to use and intuitive. This makes Blockly an excellent tool for teaching programming concepts to children and other beginners. Blockly lets beginners build programs fast and easily with visual blocks. Blockly is also flexible and can be used to create many kinds of programs [17]. Overall, Blockly is a powerful and user-friendly visual programming language that has become a popular choice for both teaching and development.

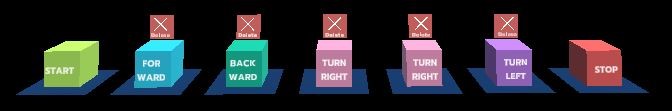

The command set designed for ASCAT-AR system includes the following command buttons, which are MQTT connection button, robot transformation button, movement command buttons (front, back, left, and right), run the command button, as shown in Figure 20. These movement command buttons are used to control the movement of the robot. When the user presses a movement command button, it will create a command block in scene. When the command block appears, the user can pick it up and sort it into any available position. The programming window allows only 5 command blocks at one time as shown in Figure 21. Once the command is in the correct position, the user presses the run button to send the command to the robot. The instruction set is designed to be simple to use. This makes the instruction set a valuable tool for controlling the movement of the robot.

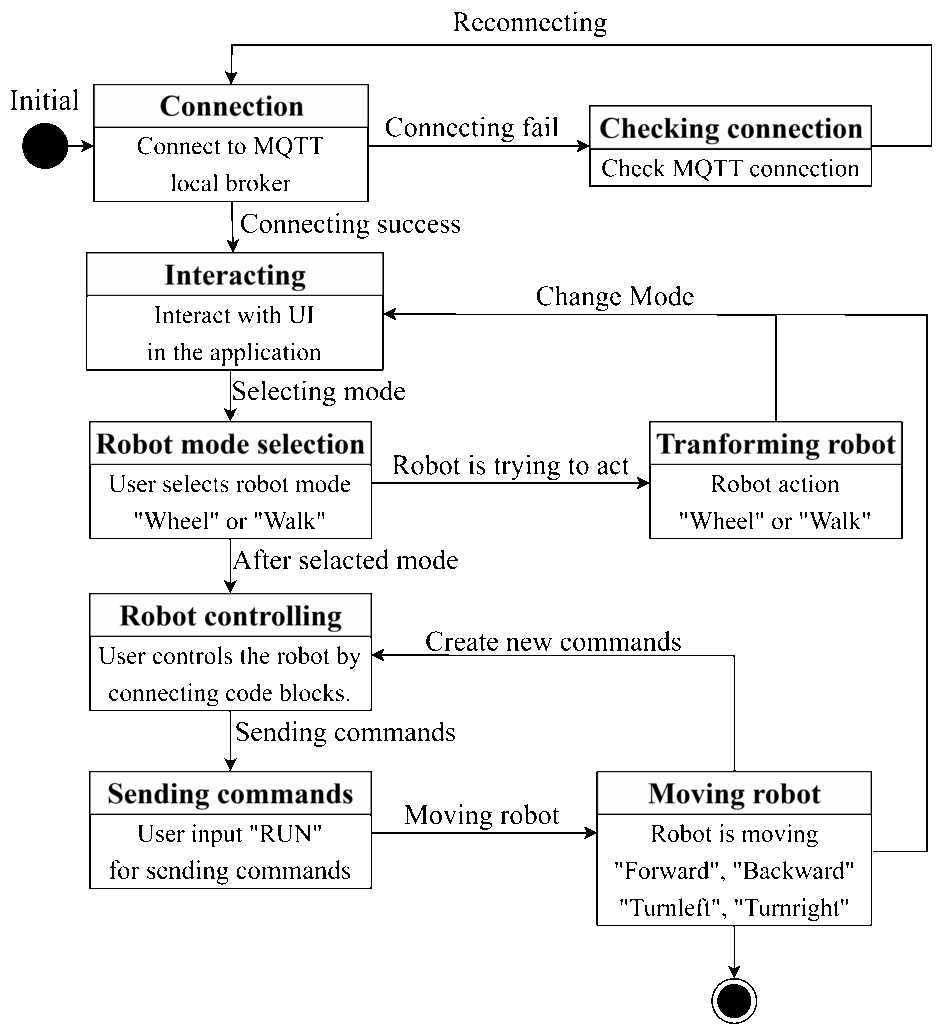

The state diagram of robot control in Figure 22 describes the process of sending the robot command to the education robot. First, the user connects to the MQTT server by clicking the MQTT button on the screen. The user can select the working mode of the robot after the proposed application is connected with the MQTT server. There are two operational modes which are wheel mode and walk mode. In wheel mode, the robot moves like a car. In walk mode, the robot moves like a human walk. The user can select the Front, Back, Left, Right, and Turn commands to move the robot in the desired direction. Once the user completes the block coding, the Run button can be clicked to send the robot commands. As shown in Figure 23, the robot moves accordingly to the selected commands given by the user.

4. Experimental Results

The experimental results of this research cover system performance, usability, and values for specific tasks. System performance shows rendering frame rate of the ASCAT-AR system program on Microsoft HoloLens 2. Usability tests cover the evaluation of user’s satisfaction, ability to learn, and ease of learning. Values for specific tasks are evaluated from data records of the assembly times, success rates, and error rates.

4.1. Population and Requirement

The number of users in the system are 24 persons who are high school students in Thailand. The students were divided into two groups to compare between the uses of the ASCAT-AR system and the assembly manual.

4.2. Evaluation Tools

4.2.1. Robot assembly record form

This form aims to collect the amount of time that the students spent on robot assembly. It can record the time spent, the point of assembly failure, and the time it takes to fix the wrong position, and the number of errors.

4.2.2. Pre-questionnaire and post-questionnaire

The pre-questionnaire and post-questionnaire forms include general questions about user background, experience, and suggestions for improving the system, as well as questions about values for specific tasks and evaluation of satisfaction on proposed system.

4.2.3. Satisfaction questionnaire

The satisfaction form aims to collect feedback on user’s satisfaction about the provided content and system performance of the ASCAT-AR system.

4.2.4. Comparative assessment form

This assessment form was applied for both experimental sets to compare motivation and system differences.

4.3. Procedure of Experiment

The experimental sets were established for Thai high school students aged 13-18 years who have some or no experience in engineering. The experimental sets provide the users about practical experience in robot assembly and the use of Microsoft HoloLens 2 headset.

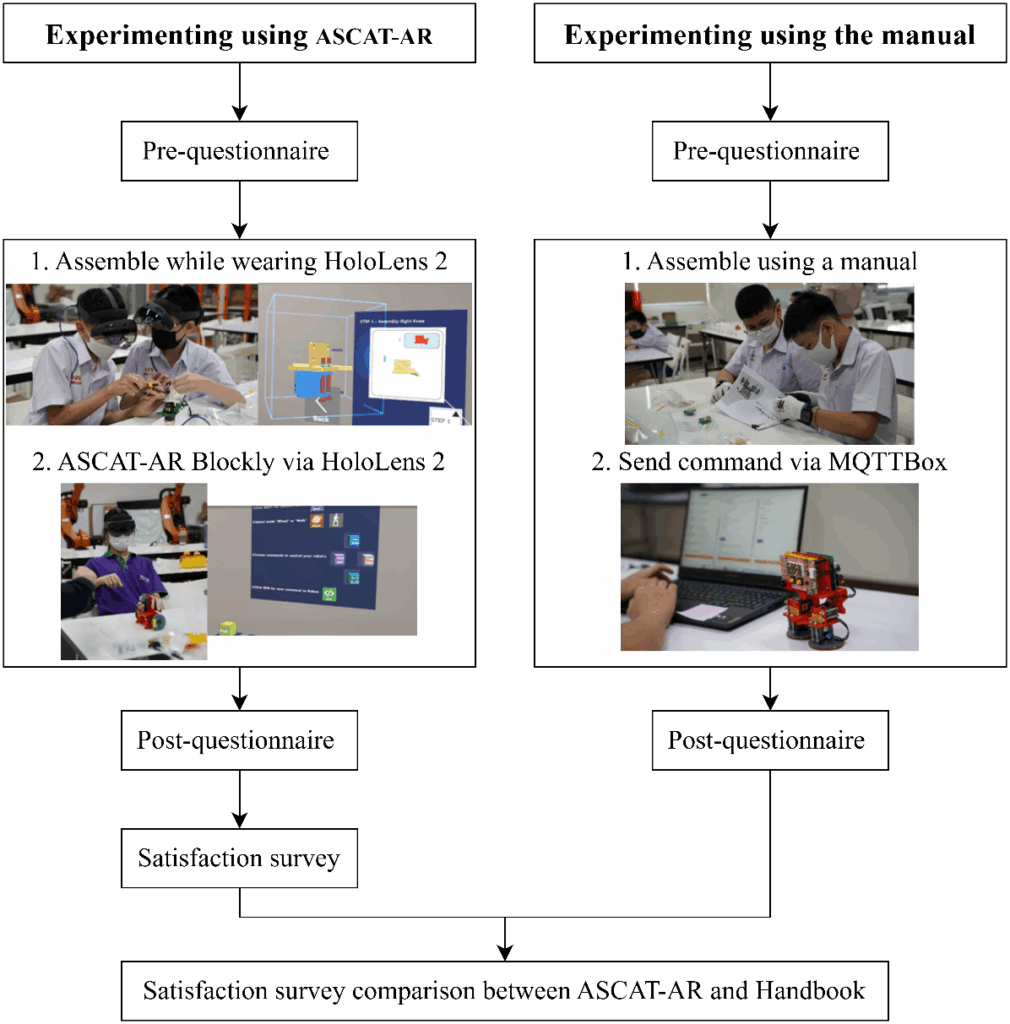

Volunteers were divided into two groups to avoid the memorization of operation flow and provided AR contents. The ASCAT-AR system was tested with 12 students while the other 12 students used the assembly manual. There were pre- and post- questionnaires to collect the students’ knowledge and interest about the robot and the opinions on the experiment.

In Figure 24, students, who have no experience about robot assembly, are divided into two groups. The experiment consists of the following steps:

Step 1: Students need to complete a preliminary questionnaire.

Step 2: Both groups were asked to assemble the robot with different tools. The aim of this exercise is to provide experience about engineering tools and AR technology for students. The expected learning outcome is that both groups of students can complete the robot assembly and control the robot’s movement.

Step 3: After completion of robot assembly and robot control, each group of students was asked to answer a post questionnaire testing.

Step 4: Students, who worked with ASCAT-AR system, need to complete the satisfaction questionnaire.

During experiments were conducted, researchers also observed the students’ behaviors and reactions and interviewed the students regarding their experiences on using the ASCAT-AR system and the instruction manual.

4.4. Experimental Results

The study was designed to evaluate the effectiveness of the ASCAT-AR system in enhancing student learning in STEM education, particularly in robot assembly task. The participants were 24 high school students aged 13-18 from Thailand, who had no prior experience with robotics. The study investigated two learning tools, which are the ASCAT-AR system utilizing augmented reality guidance via the Microsoft HoloLens 2 and the traditional 2D printed manual.

Data were collected using a combination of observation forms, questionnaires, and system logs. During the assembly phase, completion times, error rates, and task success rates were recorded for each participant. Pre- and post-experiment questionnaires were conducted to assess all participants’ knowledge about educational robot assembly and movement control, and their satisfaction on the learning process.

The collected data were analyzed using statistical methods to compare the performance of the two groups. Assembly times were averaged, and error rates were calculated as percentages of incorrect assembly steps.

4.4.1. System Performance

AR contents are rendered on the Microsoft HoloLens 2 devices with a reasonable frame rate. The system delivers a smooth and responsive graphics to enhance the user’s experience. Figure 25 shows that the application can render at frame rates of 44-60 FPS with a resolution of 1440×936 pixels per eye.

4.4.2. Usability

The comparative evaluations from learners, who used the ASCAT-AR system and used instruction manual to assemble and control robot, were conducted to assess system benefits, ease of use, ease of learning, and user satisfaction. Each aspect was rated on a 5-point Likert’s scale (1=lowest, 5=highest) by 24 participants.

Table 3: Results of satisfaction survey comparing between the uses of ASCAT-AR system and instruction manual

| Learnability | ASCAT-AR | Manual |

| I can learn about more parts of the robot. | 3.67 | 4.08 |

| I can perform cooperative task in assembling robots. | 4.42 | 4.75 |

| I want to complete the final robot assembly. | 4.83 | 4.75 |

| I think it took a long time after using this learning tool. | 3.58 | 3.5 |

| Easiness of control | ||

| I find it difficult, and I want to quit. | 2.25 | 2.67 |

| Satisfaction | ||

| I am interested and would like to learn more. | 4.17 | 4.58 |

| How much motivation does the system provide for me to build a robot? | 4.17 | 3.92 |

Table 3 shows the results of the satisfaction survey comparing the uses of ASCAT-AR system and instruction manual. Scores of learnability shows that the use of ASCAT-AR system can motivate and help the users to assemble robots more successfully than the use of instruction manual. Scores of easiness of control identifies that the use of ASCAT-AR system slightly more difficult than the use of instruction manual since users were just using Microsoft HoloLens 2 for the first time. Finally, users think that the ASCAT-AR system can motivate and help them to assemble and control robot.

4.4.3. Values for Specific Task

To collect the feedback from the experiments, the users were scheduled to perform the task within 2 hours for both of the uses of ASCAT-AR system and instruction manual. Table 4 shows the minimum, maximum, and average of user’s robot assembly time for both cases.

Table 4: Time spent on task completion

| Groups | Min time | Max time | Average time |

| ASCAT-AR | 0:49:47 | 1:53:56 | 1:12:24 |

| Manual | 0:59:39 | 1:40:35 | 1:18:12 |

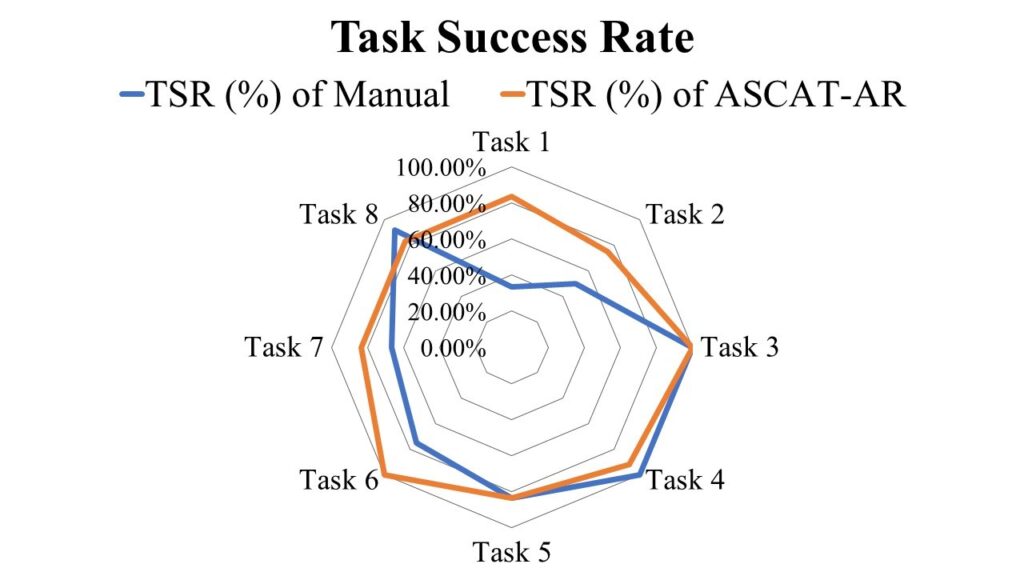

In Figure 26, task completion rate (TSR) is a performance chart used to measure usability and show the percentage of task completion in each step and the system effectiveness of helping users to achieve their goals. The success of the task can be influenced by factors such as user interaction with the interface, overall user experiences, and user motivation. Students using the ASCAT-AR system had a constant percentage success rate from assembly step 1 to final assembly step 8, ranging from 75% to 100%. The results show that students, who used ASCAT-AR system, had a better success rate during step 1 and step 2 than the ones who used the instruction manual with success rate of 33% in task 1.

Comparing error rates, students using the ASCAT-AR system assisted robot assembly system had fewer errors than those assembling robots manually. The robot assembly assisted system had a prototype model that could be rotated 360 degrees for a detailed and accurate view of the assembly position, unlike the 2D figures in the manual.

5. Conclusions and Discussions

This research focuses on the development of an ASCAT-AR system using AR technology. Augmented Reality has been developed and applied in various industries, such as education, medicine, tourism, industry, entertainment, and etc [18]. AR technology has the potential to improve the learning experience. It can help the learner to better understand and remember educational content because it allows users to be engaged in learning, which may lead to generate creative teaching methods and better educational outcomes [19]. Currently, efforts and focus on promoting STEM curricula in Thailand have been found, and it has also been found that some teachers have limited knowledge of STEM education and lack of capability of how to integrate STEM into their teaching practices [20]. The proposed system provides AR content that is utilized to comply with the STEM curriculum integrating multiple various subjects. The goal is to equip students with knowledge and understanding of technology and engineering, enhance their collaborative skills, and foster their interests in applying this competency for future careers. The system specifically helps the development of collaborative skills by engaging students in a collaborative robot assembly task. The system’s core hardware consists of the Microsoft HoloLens 2 device and an educational robot. The ASCAT-AR system displays 3D model data and animations to help students explore structures and components in augmented reality. The system is designed with a user-friendly interface that makes it accessible to non-engineering students. The application and the robot can interact in real time, enhancing learning and creating a robot control experience. The study found that students who used ASCAT-AR system were more motivated to complete the robot assembly than the ones who used the manual. Times spent on task completion of two student groups are slightly different. These results may depend on the learning ability of each student. In addition, visualization of 3D models from the ASCAT-AR system assists the user to know the positions of robot parts and sequence of robot assembly more clearly. This increases the success rate of robot assembly.

The evaluation covered all three aspects: system performance, usability, and values for specific tasks. The evaluation results demonstrated that the ASCAT-AR system can increase the user’s interests in learning to gain more knowledge and skill about robot technology and programming. Additionally, the robot was found to be easy to control using the ASCAT-AR system. The proposed system not only aids in teaching the users how to program and control the robot but also enhances their understanding of technological concepts and fostering their creativity and collaboration skills.

Furthermore, the improved 3D object detection can improve the current system performance in order to help the user to assemble the robot more easily. The system can be applied to other learning subjects to give users a visualization of the concept.

Conflict of Interest

The authors declare no conflict of interest.

- E. Penprase, STEM Education for the 21st Century, Springer International Publishing, Cham, 2020, doi:10.1007/978-3-030-41633-1.

- Armstrong, “Bloom’s Taxonomy,” Vanderbilt University Center for Teaching, 2010.

- Bai, H. Song, “21st Century Skills Development through Inquiry-Based Learning: From Theory to Practice,” Asia Pacific Journal of Education, vol. 38, no. 4, pp. 584–586, 2018, doi:10.1080/02188791.2018.1452348.

- LW, K. DR, A. PW, C. KA, R. Mayer, P. PR, J. Raths, W. MC, A Taxonomy for Learning, Teaching, and Assessing: A Revision of Bloom’s Taxonomy of Educational Objectives, 2001.

- World Economic Forum, “Schools of the Future: Defining New Models of Education for the Fourth Industrial Revolution,” World Economic Forum Reports, January 2020.

- Wachira, L. Deborah, “HANUSCIN and CHATREE FAIKHAMTA Perceptions of In-service Teachers toward Teaching STEM in Thailand,” Asia-Pacific Forum on Science Learning and Teaching, vol. 18, no. 2, p. 1, 2017.

- Garzón, J. Pavón, S. Baldiris, “Systematic Review and Meta-analysis of Augmented Reality in Educational Settings,” Virtual Reality, vol. 23, no. 4, pp. 447–459, 2019, doi:10.1007/s10055-019-00379-9.

- E. Hughes, C.B. Stapleton, D.E. Hughes, E.M. Smith, “Mixed Reality in Education, Entertainment, and Training,” IEEE Computer Graphics and Applications, vol. 25, no. 6, pp. 24–30, 2005, doi:10.1109/MCG.2005.139.

- B., Gleb; I., VR vs AR vs MR: Differences and Real-Life Applications, RubyGarage, 2020.

- L. Hutner, V. Sampson, L. Chu, C.L. Baze, R.H. Crawford, “A Case Study of Science Teachers’ Goal Conflicts Arising when Integrating Engineering into Science Classes,” Science Education, vol. 106, no. 1, pp. 88–118, 2022, doi:10.1002/sce.21690.

- Koolnapadol, A. Nokkaew, P. Tuksino, “The Study of STEM Education Management Connecting the Context of Science Teachers in the School of Extension for Educational Opportunities in the Central Region of Thailand,” International Journal of Science and Innovative Technology, vol. 2, June 2019, pp. 121–130.

- N. and K.K. Promboon, Sumonta, and Finley, “The Evolution and Current Status of STEM Education in Thailand: Policy Directions and Recommendations,” in STEM Education in the Nation: Policies, Practices, and Trends, Springer Singapore, Singapore, pp. 423–459, 2018, doi:10.1007/978-981-10-7857-6_17.

- Sutaphan, C. Yuenyong, “STEM Education Teaching Approach: Inquiry from the Context Based,” Journal of Physics: Conference Series, vol. 1340, no. 1, p. 012003, 2019, doi:10.1088/1742-6596/1340/1/012003.

- Suriyabutr, J. Williams, “Integrated STEM Education in Thai Secondary Schools: Challenges and Addressing of Challenges,” Journal of Physics: Conference Series, vol. 1957, no. 1, p. 012025, 2021, doi:10.1088/1742-6596/1957/1/012025.

- B. Olson, “Otto: A Low-Cost Robotics Platform for Research and Education,” 2001.

- DIY, Build Your Own Robot Like a Ninja, Brno, Czech, 2021.

- D. Group, Try Blockly, Google, 2021.

- Eishita, K. Stanley, “The Impact on Player Experience in Augmented Reality Outdoor Games of Different Noise Models,” Entertainment Computing, vol. 27, 2018, doi:10.1016/j.entcom.2018.04.006.

- Brizar, D. Kažović, “Potential Implementation of Augmented Reality Technology in Education,” in 2023 46th MIPRO ICT and Electronics Convention (MIPRO), 608–612, 2023, doi:10.23919/MIPRO57284.2023.10159865.

- Pitiporntapin, P. Chantara, W. Srikoom, P. Nuangchalerm, L.M. Hines, “Enhancing Thai In-service Teachers’ Perceptions of STEM Education with Tablet-based Professional Development,” Asian Social Science, vol. 14, no. 10, p. 13, 2018, doi:10.5539/ass.v14n10p13.

- Gil Hyun Kang, Hwi Jin Kwon, In Soo Chung, Chul Su Kim, "Development and Usability Evaluation of Mobile Augmented Reality Contents for Railway Vehicle Maintenance Training: Air Compressor Case", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 1, pp. 91–103, 2024. doi: 10.25046/aj090109

- Lucksawan Yutthanakorn, Siam Charoenseang, "Augmented Reality Based Visual Programming of Robot Training for Educational Demonstration Site", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 5, pp. 8–16, 2023. doi: 10.25046/aj080502

- Misael Lazo Amado, Leoncio Cueva Ruiz, Laberiano Andrade-Arenas, "Prototype of an Augmented Reality Application for Cognitive Improvement in Children with Autism Using the DesingScrum Methodology", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 587–596, 2021. doi: 10.25046/aj060163

- Sasithorn Chookaew, Suppachai Howimanporn, Santi Hutamarn, "Investigating Students’ Computational Thinking through STEM Robot-based Learning Activities", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 1366–1371, 2020. doi: 10.25046/aj0506164

- Rendani Wilson Maladzhi, Grace Mukondeleli Kanakana-Katumba, "Evolution of Teaching Approaches for Science, Engineering and Technology within an Online Environment: A Review", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 1207–1216, 2020. doi: 10.25046/aj0506144

- Jim Scheibmeir, Yashwant Malaiya, "Contextualization of the Augmented Reality Quality Model through Social Media Analytics", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 4, pp. 184–191, 2020. doi: 10.25046/aj050422

- Zuhri Syarifudin, Suharjito, "Mobile Based for Basic English Learning Assessment with Augmented Reality", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 2, pp. 774–780, 2020. doi: 10.25046/aj050297

- Diego Iquira-Becerra, Michael Flores-Conislla, Juan Deyby Carlos-Chullo, Briseida Sotelo-Castro, Claudia Payalich-Quispe, Carlo Corrales-Delgado, "A Critical Analysis of Usability and Learning Methods on an Augmented Reality Application for Zoology Education", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 2, pp. 384–392, 2020. doi: 10.25046/aj050250

- Lorenzo Damiani, Roberto Revetria, Emanuele Morra, "Safety in Industry 4.0: The Multi-Purpose Applications of Augmented Reality in Digital Factories", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 2, pp. 248–253, 2020. doi: 10.25046/aj050232

- Takahiro Ishizu, Makoto Sakamoto, Masamichi Hori, Takahiro Shinoda, Takaaki Toyota, Amane Takei, Takao Ito, "Hidden Surface Removal for Interaction between Hand and Virtual Objects in Augmented Reality", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 4, pp. 359–365, 2019. doi: 10.25046/aj040444

- Keisuke Fukagawa, Yuima Kanamori, Akinori Minaduki, "A Development of Agility Mode in Cardiopulmonary Resuscitation Learning Support System Visualized by Augmented Reality", Advances in Science, Technology and Engineering Systems Journal, vol. 3, no. 6, pp. 136–139, 2018. doi: 10.25046/aj030616

- Miho Nishizaki, "Visualizing Affordances of Everyday Objects Using Mobile Augmented Reality to Promote Safer and More Flexible Home Environments for Infants", Advances in Science, Technology and Engineering Systems Journal, vol. 3, no. 6, pp. 74–82, 2018. doi: 10.25046/aj030607

- Carmen Ramos, Tania Patiño, "Program with Ixquic", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 3, pp. 918–921, 2017. doi: 10.25046/aj0203115

- Shu Wang, Vassilis Charissis, David K. Harisson, "Augmented Reality Prototype HUD for Passenger Infotainment in a Vehicular Environment", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 3, pp. 634–641, 2017. doi: 10.25046/aj020381

- Isabel Cristina Siqueira da Silva, Gerson Klein, Denise Munchen Brandão, "Segmented and Detailed Visualization of Anatomical Structures based on Augmented Reality for Health Education and Knowledge Discovery", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 3, pp. 469–478, 2017. doi: 10.25046/aj020360