Vowel Classification Based on Waveform Shapes

Volume 4, Issue 3, Page No 16–24, 2019

Adv. Sci. Technol. Eng. Syst. J. 4(3), 16–24 (2019);

DOI: 10.25046/aj040303

DOI: 10.25046/aj040303

Keywords: Vowel recognition, Speech waveform features, Image processing, ANN, SVM, XGBoost

Vowel classification is an essential part of speech recognition. In classical studies, this problem is mostly handled by using spectral domain features. In this study, a novel approach is proposed for vowel classification based on the visual features of speech waveforms. In sound vocalizing, the position of certain organs of the human vocal system such as tongue, lips and jaw is very effective on the waveform shapes of the produced sound. The motivation to employ visual features instead of classical frequency domain features is its potential usage in specific applications like language education. Even though this study is confined to Turkish vowels, the developed method can be applied to other languages as well since the shapes of the vowels show similar patterns. Turkish vowels are grouped into five categories. For each vowel group, a time domain speech waveform with an interval of two pitch periods is handled as an image. A series of morphological operations is performed on this speech waveform image to obtain the geometric characteristics representing the shape of each class. The extracted visual features are then fed into three different classifiers. The classification performances of these features are compared with classical methods. It is observed that the proposed visual features achieve promising classification rates.

1. Introduction

Vowel classification has been an attractive research field with growing intensity over the recent years. It is closely related to voice activity detection, speech recognition, and speaker identification. Vowels are the main parts of speech and the basic building units of all languages and an intelligible speech would not be possible without them. They are the high energy parts of speech and also show almost periodic patterns. Therefore, they can be easily identified by time characteristics of their speech waveforms. Each vowel is produced as a result of vocal cord vibrations. The frequency of these vibrations is known as pitch frequency, which is a characteristic feature of the speech and the speaker. Pitch frequency variations occur mainly at voiced parts which are mostly formed by vowels. Consequently, vowels are an important source for features in speech processing.

Detecting the locations of the vowels in an utterance is critical in speech recognition because their order, representing the syllable form of the word, can help in determining the possible candidate words in speech. In addition, voice activity detection can be accomplished by determining the voiced parts of the speech which are mainly constituted from vowels. Speech processing technologies using spectral methods are also dependent on vowels and other voiced parts in speech. These methods are mostly built on the magnitude spectrum representation, which displays peaks and troughs along the frequency axis. Voiced segments of speech cause such peaks in the magnitude spectrum. The frequencies corresponding to the peaks, known as formants, are useful for both classifying the speech signal and identifying the speaker. Therefore, vowels are inevitable in the area of speech processing [1].

There are quite a number of studies on vowel classification in the literature. Most of them are based on frequency domain analysis using features such as formant frequencies [2,3], linear predictive coding coefficients (LPCC), perceptual linear prediction (PLP) coefficients [4], mel frequency cepstral coefficients (MFCC) [5,6,7,8], wavelets [9], spectro-temporal features [10], and spectral decomposition [11]. However, there are fewer studies using time domain analysis [12, 13]. There are also vowel classification studies for the imagined speech [14].

Although most of the studies on speech recognition make use of the acoustic features, the visual characteristics obtained from speech waveform shapes can also carry meaningful information to represent the speech. Shape characteristics, for example, envelope of the waveform, area under that waveform, and some other geometrical measurements can be utilized for classification purposes. Extracting these properties can be accomplished by basic image processing techniques such as edge detection and morphological processing. In other words, a speech waveform can be treated as an image. The notion of visual features is perceived as the shapes of the mouth and lips in general, and used also in vowel classification [15]. There exist some articles in the literature concerning the speech and sound signals as an image. Many of them utilize visual properties from the spectral domain. Matsui [16] et al. propose a musical feature extraction technique based on scale invariant feature transform (SIFT), which is one of the feature extractors used in image processing. Dennis et al. [17] use visual signatures from spectrogram for sound event classification. Schutte offers a parts-based model, employing graphical model based speech representation, which is applied to spectrogram image of the speech [18]. Dennis et al. [19] propose another method for recognizing overlapping sound events by using visual local features from the spectrogram of sounds and generalized Hough transform. Apart from these time-frequency approaches, Dulas deals with the speech signal in the time domain. He proposes an algorithm for digit recognition in Polish making use of the envelope pattern of the speech signal. A binary matrix is formed by placing a grid on the speech signal of one pitch period. Similarity coefficients are, then, calculated by comparing the previous and next five matrices around the matrix to be analyzed [20, 21]. Dulas also implements the same approach for finding the inter-phoneme transitions [22].

In this paper, we propose the visual features obtained from the shapes of speech waveforms to classify vowels. We are inspired by the fact that one can determine the differences among the vowels by visually inspecting their shapes. The proposed approach, called herein Speech Vision method (SV), henceforth considers the speech waveform as an image. The images corresponding to the respective vowels are formed from two-pitch period speech segments. After applying several image processing techniques to these waveform images, some useful geometrical descriptors are extracted from them. Later, these descriptors are used for training Artificial Neural Network (ANN), Support Vector Machine (SVM), and eXtreme Gradient Boosting (XGB) models to recognize the vowels. Experiments show that comparable recognition rates are obtained. The use of visual features makes a clear distinction between the application areas of classical frequency domain approach and our suggested method. A possibility of application is in the field of language education, especially language learning of a foreign language, where one needs to test learner’s pronunciation of vowels, or the learner tries to make the shape of the vowel as he/she sees both his/her own pronunciation and the ideal shape of the corresponding vowel on a screen for example. By the same token, the method could also be used in the speaking education of those with hearing disabilities. Another alternative area of application would be text to speech conversion tasks, in which ideal vowel shapes could be used in order to enhance the quality of the digital speech.

This paper is organized as follows. After this Introduction part, in Section II we discuss vowels and their properties. Section III presents the proposed method in details. Tests and results are given in Section IV, and a comparison with other vowel classification studies in the literature is carried out in Section V. Finally, conclusions and discussion appear in Section VI.

2. Characteristics of Vowels

In the Turkish language, there are 8 vowels and 21 consonants. The vowels are {a,e,ı,i,o,ö,u,ü}. There are 44 phonemes, 15 of which are obtained from vowels and the rest from consonants. The production of vowels basically depends on the position of the tongue, lips and jaw. For instance, for the vowel “a”, tongue is moved back, lips are unrounded and the jaw is wide open. Therefore, all the vowels are generated differently depending on the various positions of the parts of the mouth. By considering the shape of the mouth, Turkish vowels are distributed as given in Table 1 [23]. According to this table, there are several categories for the vowels. For example, {a,ı,o,u} are vocalized with the tongue pulled back, while {e,i,ö,ü} are vocalized with the tongue pushed forward. Similarly, {a,e,ı,i} are generated with lips unrounded and {o,ö,u,ü} are generated with lips rounded. We establish vowel groups to be classified in this study according to the position of lips and jaw.

Table 1: Classes of Turkish vowels

| Unrounded (lips) | Rounded (lips) | |||

| Wide (jaw) | Narrow (jaw) | Wide (jaw) | Narrow (jaw) | |

| Back (tongue) |

a | ı | o | u |

| Front (tongue) |

e | i | ö | ü |

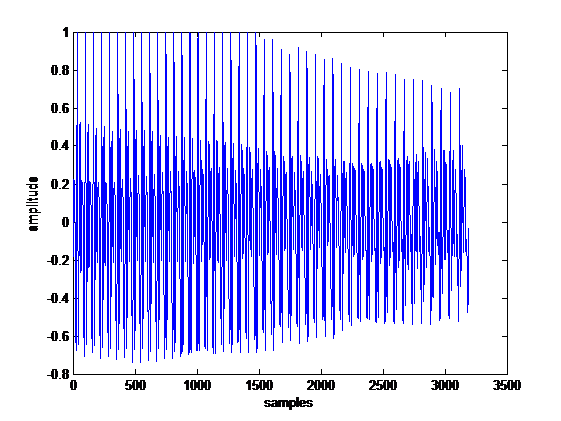

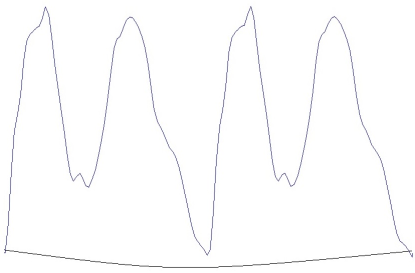

When a voice plot is stated, it is basically meant to be the graph of voice intensity against time. A sample plot of a recorded vowel is given in Figure 1.

Figure 1: Waveform of Vowel “a”

Figure 1: Waveform of Vowel “a”

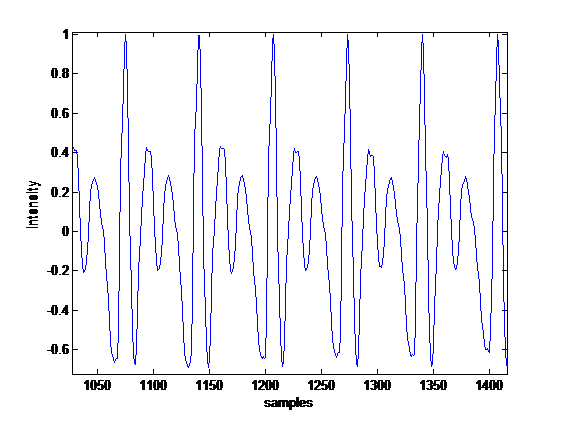

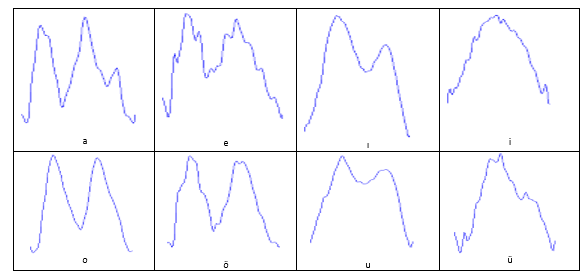

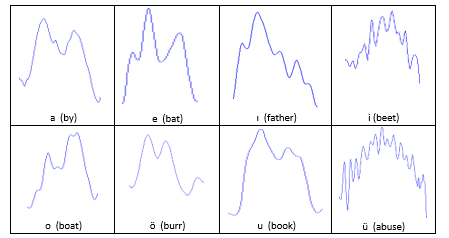

It is noted that the vowel has a certain waveform. If we take a closer look, we can see that there is a repeating pattern in the waveform. This pattern is illustrated in Figure 2. The duration of each repeating pattern is known as the pitch period. This pattern keeps repeating with slight perturbations until the intensity starts to die off. When we focus on an interval of one pitch period of waveforms of all the vowels, we obtain the shapes illustrated in Figure 3. The vowels used in this paper come from a database [24]. As seen in that figure, each waveform generally differs from others in terms of appearance. The similar pattern can be experienced in certain English vowels, which sound like their corresponding Turkish counterparts. Figure 4 shows these vowels chosen from the words within parenthesis.

The argument in this study is that the vowels can be identified by examining their waveform shapes as an image. In other words, visual features extracted from the waveform images can make vowel classification possible without the need for spectral features such as MFCC, LPCC and/or PLP coefficients. From this point of view, the proposed technique contributes to the feature selection part in speech processing. Therefore, some of the image processing and machine vision techniques are applied to those waveforms. The main novelty of this work lies in providing visual features for speech waveforms.

Figure 2: Repeating Patterns in Vowel “a”

Figure 2: Repeating Patterns in Vowel “a”

Figure 3: Sample Pitch Period Plots for 8 Turkish Vowels

Figure 3: Sample Pitch Period Plots for 8 Turkish Vowels

Figure 4: Sample Pitch Period Plots for 8 of the English Vowels

Figure 4: Sample Pitch Period Plots for 8 of the English Vowels

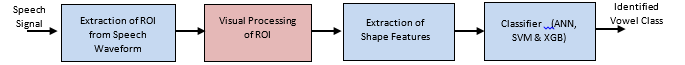

Figure 5: Proposed Method for Vowel Classification

Figure 5: Proposed Method for Vowel Classification

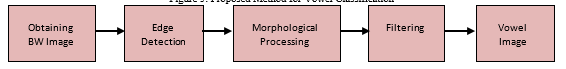

Figure 6: Operations in the “Visual Processing of ROI”

Figure 6: Operations in the “Visual Processing of ROI”

3. Speech Vision Methodology

The overall view of our proposed method is shown in Figure 5. In addition, Figure 6 shows the operations carried out in “Visual Processing of ROI” block. Our method comprises four main parts: the first is the extraction of Region of Interest (ROI), the second is visual processing of ROI, the third is extraction of shape features, and the fourth is the ANN/SVM/XGB part, where inputs are formed from the matrix and fed into the previously trained model to obtain a classification result. The following subsections explain the functions of each block in detail.

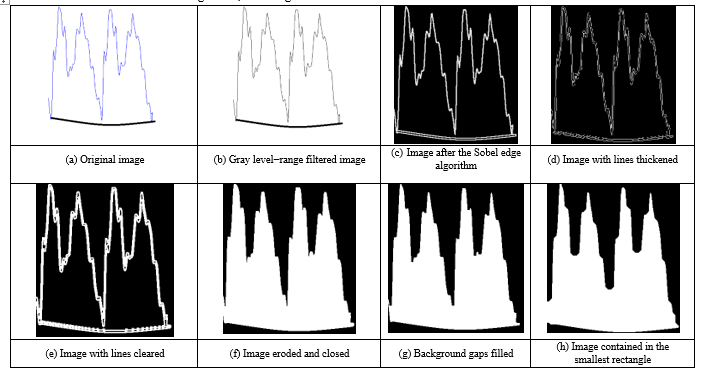

Figure 7: A Sample Closed Shape of Double Pitch Periods

Figure 7: A Sample Closed Shape of Double Pitch Periods

3.1. Processing of region of interest

Figure 8: Steps for Image Operations

Figure 8: Steps for Image Operations

The input speech signals are segmented into two-pitch length waveform images as seen in Figure 7. The reason for choosing double pitch periods is that the shapes of a single, double, and triple pitch periods are compared, and two consecutive pitch periods give the highest scores in classification. As can be seen in Figure 8a, the image contains little jagged edges because of the noise level and the style of the speaker. In order to make vowel recognition speaker independent, one should dispose of those rapid ups and downs. Hence, we apply a sequence of image processing operations to smooth these details and, consequently, obtain a more general appearance of the waveform.

A selected waveform image to be processed is shown in Figure 8a. Then, a range filter which calculates the difference between maximum and minimum gray values in the 3×3 neighborhood of the pixel of interest is applied to the obtained gray-level image. The resulting image can be seen in Figure 8b. After this, we determine the edges of this image using Sobel algorithm with a threshold value of 0.5.

The image obtained is shown in Figure 8c. Following this, we apply a morphological structuring for line thickening, whose result is given in Figure 8d. Then, we clear the edges and borders using 4-connected neighborhood algorithm and obtain the image shown in Figure 8e. Following this operation, we erode the image and close it using a morphological closing method, whose result is shown in Figure 8f. Finally, the gaps on the background are flood-filled while changing connected background pixels (0’s) to foreground pixels (1’s). The result is seen in Figure 8g. A closing operation is applied to this figure and the resulting image is later contained in the smallest rectangle as depicted in Figure 8h. In the morphological operations performed on the images, we used structuring elements of line with length of 3 and angles of 0 and 90 degrees, as well as diamond with size of 1 and disk with size of 10.

3.2. Extraction of shape features

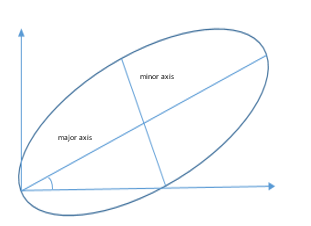

The geometric features that characterize the waveform image seen in Figure 8h are presented in this section. Since the aim is to analyze the rough shape rather than the detailed one, the features are selected in a way that represents the general silhouette of the waveform. The authors in [25] used ten features describing the general silhouettes of aircraft. In this study, we use these features along with the orientation angle as an additional feature. Table 2 lists these features. They are calculated by using the function of regionprops in Matlab [26]. In the features F1, F2, F6, and F11, the white region in Figure 8h, referred to as the image region, is approximated by an ellipse.

The followings are the descriptions of the features;

Table 2: Features Obtained from the Processed Image

| Feature | Name of the feature |

| F1 | Major axis length |

| F2 | Minor axis length |

| F3 | Horizontal length |

| F4 | Vertical length |

| F5 | Perimeter |

| F6 | Eccentricity |

| F7 | Mean |

| F8 | Filled area |

| F9 | Image area |

| F10 | Background area |

| F11 | Orientation angle |

F1- Major axis length: the length of the longer axis of the image region in pixels. See Figure 9.

F2- Minor axis length: the length of the shorter axis of the image region in pixels. See Figure 9.

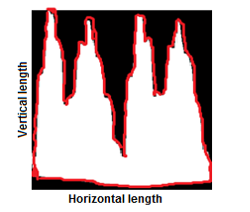

F3- Horizontal length: horizontal length of the image region in pixels. See Figure 10.

F4- Vertical length: vertical length of the image region in pixels. See Figure 10.

F5- Perimeter: perimeter of the image region in pixels, shown in red. See Figure 10.

F6- Eccentricity: a parameter of an ellipse indicating its deviation from the circularity, whose value ranges from 0 (circle) to 1 (line).

F7- Mean: the ratio of the total number of 1’s in the binary image to the total number of pixels.

F8- Filled area: the total number of white pixels in the image.

F9- Image area: estimated area of the object in the image region which is correlated with the filled area. The area is calculated by placing and moving a 2×2 mask on an image. Depending on the corresponding pixel values in the mask, the area is computed. For example, if all the pixels in the mask are black, then the area is zero. When all are white, then the area equals one. The other distributions of pixels in the mask result in area values between zero and one.

F10- Background area: estimated area of the black region in the image.

Figure 9: Major Axis, Minor Axis, and Orientation Angle

Figure 9: Major Axis, Minor Axis, and Orientation Angle

Figure 10: Horizontal and vertical lengths, and perimeter

Figure 10: Horizontal and vertical lengths, and perimeter

F11- Orientation angle: the angle between the horizontal axis and the major axis of the ellipse approximating the image region. See Figure 10.

All the features describe the spatial domain properties of the underlying image. On the other hand, these images are the time domain representations of the speech signals. Thus, classifying the images corresponds to recognizing the speech sounds. Adopting such simple features in speech recognition leads to promising results, as shown in our experiments.

3.3. Classifiers

A general description of the employed classifiers is given here in order to facilitate a better understanding. We utilized three widely used classifiers in our study; namely ANN, SVM, and XGB method. It is well known that these are among the strongest classification tools for pattern recognition applications. They are all able to classify nonlinearly distributed input patterns into target classes. The classifiers are trained using the features in Table 2.

When sounding a vowel; the position of mouth, tongue, and lips is the key factor. The dotted and non-dotted (front and back) vowels in Turkish are quite similar in the way that only the position of the tongue changes when sounding the dotted and non-dotted vowels. Out of the eight vowels in Turkish, five vowel classes are formed in this study, combining ‘dotted’ vowels with non-dotted ones. Those combined vowels were: ‘ı’ and ‘i’, ‘o’ and ‘ö’, ‘u’ and ‘ü’. Besides, the vowels ‘a’ and ‘e’ are treated as separate classes. Therefore, these five vowel classes are considered as the outputs of the classifier.

Following a parameter optimization, an ANN is constructed with a multi-layered feed forward network structure having 11 inputs, 5 outputs, 2 hidden layers with 22 and 13 neurons, respectively. A hyperbolic tangent is chosen as activation function. The network is trained by back propagation algorithm.

As another classifier, SVM is implemented using the kernel Adatron algorithm, which optimally separates data into their respective classes by isolating the inputs, which fall close to the data boundaries. Hence, the kernel Adatron is especially effective in separating sets of data which share complex boundaries. Gaussian kernel functions are preferred in this study.

As a third classifier, a decision tree-based XGB method is used. Again, following a parameter optimization, a multi-class XGB model is employed with 89 booster trees having a maximum depth of three, whilst default values are used for the rest of the parameters.

4. Tests and Results

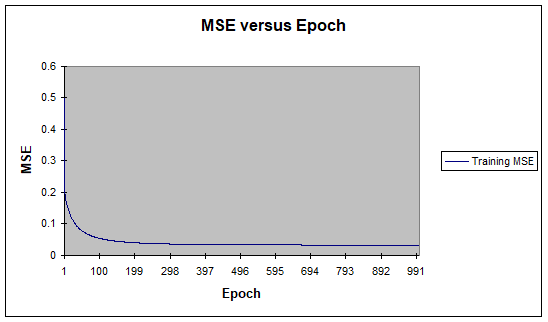

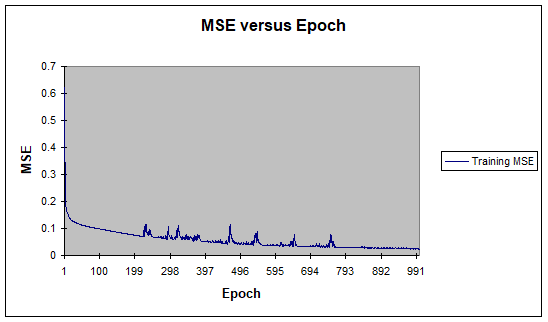

For the design of experiments, 551 samples are used; consisting of 100, 88, 90, 76, 197 samples for Class1 through Class5, respectively, for vowel classification. The vowels are parsed from the diphone database developed in [24]. Noisy conditions are not considered because we aimed to use the classification of the ideal shaped waveforms in different applications as opposed to classical voice recognition techniques. The data are randomized in order to achieve a fair distribution, 80% of which is used for training, 15% for testing, and the remaining 5% for cross validation. The ANN and SVM are trained until the results cannot improve the validation set any further. The Neurosolutions software is used for this process [27]. During the training process how the mean squared training error changes for the SVM and ANN is illustrated in Figure 11 as an example. The Python software is used for XGB modeling and training [28].

A statistical error and R-value analysis is made on the test data in order to compare the produced outputs of the trained models with the actual values that indicate whether estimations succeed or not. The results of this analysis appear in Table 3 and Table 4 for the training and test sets respectively. It can be seen from the tables that ANN and XGB perform better in terms of almost all criteria with XGB having a slightly better performance. ANN performs very well on all vowel classes except Class 4, i.e. ‘o’ and ‘ö’ vowels in Turkish; whereas XGB has more than 80% sensitivity on all classes. In Classes 1 and 3, there is a 100% correct classification for all classifiers.

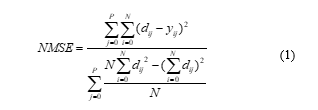

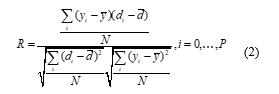

In both tables, MSE is Mean Squared Error, NMSE is Normalized Mean Squared Error, and R is linear correlation coefficient. NMSE is calculated as follows:

where P is the number of output processing elements (neurons), N is the number of exemplars in the data set, yij is the network output for exemplar i at processing element j, and dij is the desired output for exemplar i at processing element j. Since NMSE is an error term, values closer to zero denote better predictability. MSE is simply the numerator of NMSE.

where P is the number of output processing elements (neurons), N is the number of exemplars in the data set, yij is the network output for exemplar i at processing element j, and dij is the desired output for exemplar i at processing element j. Since NMSE is an error term, values closer to zero denote better predictability. MSE is simply the numerator of NMSE.

Figure 11: Mean Squared Error as (a) SVM and (b) ANN Training

Figure 11: Mean Squared Error as (a) SVM and (b) ANN Training

Another statistically meaningful variable used for predictability performance is the correlation coefficient R. It is used to measure how well one variable fits on another, linear regression wise. In our case, these variables are predicted against the desired outputs. The R value is defined as:

where y is the network output, and di is the desired output.

where y is the network output, and di is the desired output.

Table 4: Statistical Parameter Analysis and Comparison over Test Sets

| SVM |

| ANN |

| XGB |

| SVM |

| ANN |

| XGB |

| SVM |

| ANN |

| XGB |

| SVM |

| ANN |

| XGB |

| SVM |

| ANN |

| XGB |

| MSE |

| 0.0205 |

| 0.0006 |

| 0.0005 |

| 0.0987 |

| 0.04529 |

| 0.0095 |

| 0.01309 |

| 0.0004 |

| 0.0006 |

| 0.07955 |

| 0.04946 |

| 0.031 |

| 0.1074 |

| 0.0519 |

| 0.063 |

| NMSE |

| 0.1398 |

| 0.0041 |

| 0.005 |

| 0.78047 |

| 0.35815 |

| 0.089 |

| 0.08244 |

| 0.00255 |

| 0.0034 |

| 0.62906 |

| 0.39109 |

| 0.3824 |

| 0.48823 |

| 0.2359 |

| 0.2628 |

| R |

| 0.94 |

| 0.99836 |

| 0.9993 |

| 0.62122 |

| 0.83046 |

| 0.9172 |

| 0.95904 |

| 0.99895 |

| 0.9808 |

| 0.61131 |

| 0.78621 |

| 0.8086 |

| 0.71667 |

| 0.8796 |

| 0.8781 |

| Sensitivity |

| (%) |

| 100 |

| 100 |

| 100 |

| 54.17 |

| 73.68 |

| 93.33 |

| 100 |

| 100 |

| 100 |

| 80 |

| 84.62 |

| 80 |

| 86.21 |

| 93.55 |

| 87.88 |

| Performance |

| Class 1(A) |

| Class 2(E) |

| Class 3(I-?) |

| Class 4(O-Ö) |

| Class 5(U-Ü) |

The mean squared error (MSE) can be used to determine how well the network output fits the desired output, but it does not necessarily reflect whether the two sets of data move in the same direction. For instance, by simply scaling the network output, we can change the MSE without changing the directionality of the data. The correlation coefficient R solves this problem. By definition, the correlation coefficient between a network output y and a desired output d is defined by Eq. (4). The correlation coefficient is limited to the range [-1 1]. When R = 1, there is a perfect positive linear correlation between y and d; i.e., they vary accordingly. When R = -1, there is a perfect linear negative correlation between y and d;

i.e., they vary in opposite ways (when y increases, d decreases by the same amount). When R =0, there is no correlation between y and d; i.e., the variables are called uncorrelated. Intermediate values describe partial correlations.

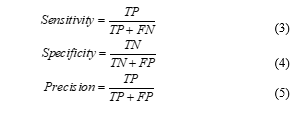

We evaluate the performances of all classifiers in terms of sensitivity, specificity, accuracy, and precision. These parameters are statistical measures for classification. Values close or equal to 100% are desirable. They are related with true positive (TP), true negative (TN), false positive (FP) and false negative (FN) values, as explained below:

TP: Number of cases belonging to a certain class that are correctly classified.

TN: Number of cases not belonging to a certain class that are correctly classified.

FP: Number of cases belonging to a certain class that are incorrectly classified.

FN: Number of cases not belonging to a certain class that are incorrectly classified.

These parameters are calculated by the following equations:

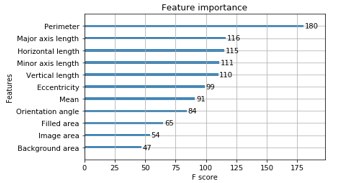

![]() Table 5 shows the performance results of the classifiers adapting the proposed features on the basis of sensitivity, specificity, accuracy and precision. As can be seen, the performance of each classifier justifies that the visual features can be successfully employed in vowel classification. It is noted that the ANN and XGB classifiers perform better than the SVM. Since the XGB method is decision tree-based, and not a black-box, it is possible to see which features are more useful in the model as shown in Figure 12. In fact, this is a score that denotes the goodness of each feature during the building of the boosted decision tree model based on the splits. The more the feature is used in split decisions, the higher the score. The overall score for a feature is calculated as the average of the scores of that feature across all decision trees of the model.

Table 5 shows the performance results of the classifiers adapting the proposed features on the basis of sensitivity, specificity, accuracy and precision. As can be seen, the performance of each classifier justifies that the visual features can be successfully employed in vowel classification. It is noted that the ANN and XGB classifiers perform better than the SVM. Since the XGB method is decision tree-based, and not a black-box, it is possible to see which features are more useful in the model as shown in Figure 12. In fact, this is a score that denotes the goodness of each feature during the building of the boosted decision tree model based on the splits. The more the feature is used in split decisions, the higher the score. The overall score for a feature is calculated as the average of the scores of that feature across all decision trees of the model.

Figure 12: Feature Importance’s for XGB Model

Figure 12: Feature Importance’s for XGB Model

In order to show the effectiveness of the offered visual features, the same vowel classes are also classified by utilizing the MFCCs, which are commonly used for speech recognition. Table 6 depicts the sensitivity results obtained from the proposed and MFCC features classified by all three classifiers. This table also includes the classification performance of the study in [7] which classifies the Turkish vowels by MFCCs using ANN.

It is fair to say that the proposed SV method yields better results, on average, on all classes with the exception of Class 2(E). It is observed that unlike the case of visual features, when MFCCs are used SVM performs slightly better than ANN and XGB.

Table 6: Comparison of Methods Using MFCCs and Proposed Visual Features

| Method | Class 1(A) | Class 2(E) | Class 3(I-İ) | Class 4(O-Ö) | Class 5(U-Ü) |

| ANN (proposed visual features) | 100 | 73.68 | 100 | 84.62 | 93.55 |

| SVM (proposed visual features) | 100 | 54.17 | 100 | 80 | 86.21 |

| XGB (proposed visual features) | 100 | 93.33 | 100 | 80 | 87.88 |

| ANN (MFCC) | 83.33 | 80 | 66.67 | 57.14 | 63.63 |

| SVM (MFCC) | 85.71 | 80 | 77.78 | 66.67 | 60 |

| XGB (MFCC) | 50 | 70 | 60 | 60 | 90 |

| ANN Method in [7] (MFCC) | 88 | 81 | 76 | 78 | 81 |

Table 7: Comparison of Various Vowel Classification Studies

| Ref.No | Language | Input Features | Clasifier | Performance |

| 7 | Turkish | MFCC | ANN | 80.8 |

| 29 | Australian English | Frequency Energy Levels | Gaussian &ANN | 88.6 |

| 30 | English | MFCC | SVM | 72.34 |

| 31 | English | Formant frequencies | ANN | 70.5 |

| 32 | English | Formant frequencies | ANN | 70.53 |

| 33 | English | Tongue and lip movements | SVM | 85.42 |

|

34 |

Hindi | MFCC | HMM | 91.42 |

| 35 | Hindi | Gammatone Cepstral Coeficients + MFCC + Formants | HMM | 91.16 |

| 36 | Hindi | Power Normalized Cepstral Coefficients | HMM | 88.46 |

| Speech Vision (SV) | Turkish | Time Domain Visual Features | ANN | 90,37 |

| SVM | 84,08 | |||

| XGB | 92,24 |

4.1. Comparison with Relevant Studies

In order to evaluate the performance of the SV approach more objectively, a literature search on various vowel classification performances is also carried out. In detail, a brief comparison is given in Table 6 with the results of another study; however, this study also contained Turkish vowels [7] whereas we are keen to look into the success rates of vowel classification in different other languages. On the other hand, it should be pointed out that the indirect comparison here is just to give a rough idea about the performance of the SV approach among other vowel classification results in general.

Harrington and Cassidy conducted a study on vowel classification in Australian English, using frequency energy levels with Gaussian and ANN classifiers [29]. Indeed, there are a number of studies classifying English vowels with SVM and ANN classifiers using MFCC and Formant Frequencies [30, 31, 32]. Another study was also conducted using tongue and lip movements to classify English vowels with SVM [33]. In addition, there are a few studies on vowel classification in the Hindi language using various frequency domain features and employing Hidden Markov Model (HMM) classifiers [34, 35, 36]. A comparison of these various studies with our SV approach, in terms of sensitivity, is given in Table 7.

5. Conclusion and Discussion

This paper describes a novel approach introducing visual features for classifying vowels. The proposed approach makes use of the geometric features obtained from speech waveform shapes. Shape-based features from speech signals have rarely been employed for speech recognition. On the other hand, the features that are widely used are usually in the transform domain, i.e. spectrograms. However, the techniques using spectrograms involve computational costs due to the Fourier transform calculations. In our approach, the recorded two-pitch long speech waveform is first processed to extract the visual features. For this purpose, the waveform is treated as an image. Therefore, several aforementioned image processing techniques are utilized. Then, the features are obtained from the processed waveform image. Finally, ANN, SVM, and XGB classifiers are trained for the vowels to be classified. The test results show that using visual features accomplish quite satisfactory performances.

It is fair to say, in comparison with the success rates of classical speech features; our speech vision approach introduces a promising performance. As it can be clearly seen in Table 7, it has the highest performance with the XGB classifier, which is slightly above 92%, among all the compared studies. Additionally, our neural network and SVM classifiers result in better or comparable scores with the others. Thus, it is clear that the proposed visual features work well for Turkish vowel classification.

These features can be used in applications where the visual part would make a difference such as in teaching hearing disabled individuals to speak. Although we applied the proposed features to Turkish vowels, it could be adapted to other languages easily, since the vowels in all languages share similar characteristics in the time domain. Combining both acoustic and visual features for vowel classification can be considered for future work.

- M. Benzeghiba, et al. “Automatic speech recognition and speech variability: A review” Speech Communication, 49.10, pp. 763-786, 2007.

- B. Prica and S. Ilić, “Recognition of vowels in continuous speech by using formants” Facta universitatis-series: Electronics and Energetics, 23(3), pp. 379-393, 2010.

- S. Phitakwinai, S., H. Sawada, S. Auephanwiriyakul and N. Theera-Umpon, “Japanese Vowel Sound Classification Using Fuzzy Inference System”, Journal of the Korea Convergence Society, 5(1), pp. 35-41, 2011.

- H. Hermansky, H. “Perceptual linear predictive (PLP) analysis of speech” Journal of the Acoustical Society of America, 87(4), pp. 1738-1752, 1990.

- N. Theera-Umpon, C. Suppakarn and A. Sansanee, “Phoneme and tonal accent recognition for Thai speech” Expert Systems with Applications, 38.10, pp. 13254-13259, 2011.

- H. Huang, et al. “Phone classification via manifold learning based dimensionality reduction algorithms” Speech Communication, 76, pp. 28-41, 2016.

- O. Parlaktuna, et al. “Vowel and consonant recognition in Turkish using neural networks toward continuous speech recognition” in Electrotechnical Conference Proceedings., 7th Mediterranean. IEEE, 1994.

- C. Vaz, A. Tsiartas and S. Narayanan, S., “Energy-constrained minimum variance response filter for robust vowel spectral estimation. In Acoustics, Speech and Signal Processing (ICASSP), 2014 IEEE International Conference on (pp. 6275-6279), 2014.

- M. Cutajar, et al. “Discrete wavelet transforms with multiclass SVM for phoneme recognition” in: EUROCON, 2013 IEEE. pp. 1695-1700, 2013.

- Sivaram, G. S. and H. Hermansky, H. “Sparse multilayer perceptron for phoneme recognition” Audio, Speech, and Language Processing, IEEE Transactions on, 20(1), pp. 23-29 2012.

- P. Thaine, and G. Penn, “Vowel and Consonant Classification through Spectral Decomposition” in Proceedings of the First Workshop on Subword and Character Level Models in NLP, pp. 82-91, 2017.

- M. T. Johnson, et al., “Time-domain isolated phoneme classification using reconstructed phase spaces” Speech and Audio Processing, IEEE Transactions on 13.4: pp. 458-466, 2005.

- J. Manikandan, B. Venkataramani, P. Preeti, G. Sananda and K. V. Sadhana, “Implementation of a phoneme recognition system using zero-crossing and magnitude sum function” in TENCON 2009 IEEE Region 10 Conference, pp. 1-5, 2009.

- T. J. Lee and K. B. Sim, “Vowel classification of imagined speech in an electroencephalogram using the deep belief network” Journal of Institute of Control, Robotics and Systems, 21(1), pp. 59-64, 2015.

- K. I. Han, H. J. Park and K. M. Lee, “ Speech recognition and lip shape feature extraction for English vowel pronunciation of the hearing-impaired based on SVM technique” in Big Data and Smart Computing (BigComp), 2016 International Conference on pp. 293-296. IEEE, 2016.

- T. Matsui, et al. “Gradient-based musical feature extraction based on scale-invariant feature transform” in Signal Processing Conference, 19th European. IEEE, pp. 724-728, 2011.

- J. Dennis, H. D. Tran, L. I. Haizhou, “Spectrogram image feature for sound event classification in mismatched conditions”, Signal Processing Letters, IEEE, 18.2, pp. 130-133, 2011.

- K. T. Schutte, “Parts-based models and local features for automatic speech recognition” PhD Thesis, Massachusetts Institute of Technology, 2009.

- J. Dennis, H. D. Tran and E. S. Chng, “Overlapping sound event recognition using local spectrogram features and the generalised hough transform” Pattern Recognition Letters, 34.9, pp. 1085-1093, 2013.

- J. Dulas, “Speech Recognition Based on the Grid Method and Image Similarity” INTECH Open Access Publisher, 2011.

- J. Dulas, “Automatic word’s identification algorithm used for digits classification” Przeglad Elektrotechniczny, 87, pp. 230-233, 2011.

- J. Dulas, “The new method of the inter-phonemes transitions finding” Przegląd Elektrotechniczny, 88.10a, pp. 135-138, 2012.

- İ. Ergenç, Spoken Language and Dictionary of Turkish Articulation, Multilingual, 2002.

- Ö. Salor, B. L. Pellom, T. Ciloglu, K. Hacioglu, and M. Demirekler, “On developing new text and audio corpora and speech recognition tools for the Turkish language” in INTERSPEECH, 2002.

- A. G. Karacor, E. Torun E. and R. Abay, “Aircraft Classification Using Image Processing Techniques and Artificial Neural Networks” International Journal of Pattern Recognition and Artificial Intelligence, Vol. 25 No 8, pp. 1321-1335, 2011.

- Matlab, The Mathworks Inc. (1984 – 2013), www.mathworks.com.

- Neurosolutions,NeuroDimension Inc,(1994-2015), www.neurosolutions.com

- G. Rossum, Python tutorial, Technical Report CS-R9526, Centrum voor Wiskunde en Informatica (CWI), 1995.

- J. Harrington, and S. Cassidy, “Dynamic and target theories of vowel classification: Evidence from monophthongs and diphthongs” Australian English. Language and Speech, 37(4), pp. 357-373, 1994.

- P. Clarkson, and P. J. Moreno, “On the use of support vector machines for phonetic classification” in Acoustics, Speech, and Signal Processing, 1999. Proceedings, 1999 IEEE International Conference on, pp. 585-588, 1999.

- R. Carlson, and J. R. Glass, “Vowel classification based on analysis-by-synthesis” in ICSLP, 1992.

- P. Schmid, and E. Barnard, “Explicit, n-best formant features for vowel classification” in Acoustics, Speech, and Signal Processing, ICASSP-97., 1997 IEEE International Conference on, pp. 991-994, 1997.

- J. Wang,, J. R. Green, and A. Samal, “Individual articulator’s contribution to phoneme production” in Acoustics, Speech and Signal Processing (ICASSP), 2013 IEEE International Conference on pp. 7785-7789, 2013.

- S. Mishra, A. Bhowmick and M. C. Shrotriya, “Hindi vowel classification using QCN-MFCC features” Perspectives in Science, 8, pp. 28-31, 2016

- A. S. T. I. K. Biswas, P.K. Sahu, A. N. I. R. B. A. N. Bhowmick, and M. A. H. E. S. H. Chandra, “Hindi vowel classification using GFCC and formant analysis in sensor mismatch condition” WSEAS Trans Syst, Vol. 13, pp. 130-43, 2014.

- M. Chandra, “Hindi Vowel Classification using QCN-PNCC Features” Indian Journal of Science and Technology, 9(38), 2016.

- Nikolai Dimech, Ivan Grech, Russell Farrugia, Owen Casha, Barnaby Portelli, Joseph Micallef, "Design and Electronic Interfacing of FR4 and Polyimide PCB-based Electromagnetic Resonating Micro-mirrors ", Advances in Science, Technology and Engineering Systems Journal, vol. 11, no. 1, pp. 1–10, 2026. doi: 10.25046/aj110101

- Abdennacer El-Ouarzadi, Anass Cherkaoui, Abdelaziz Essadike, Abdenbi Bouzid, "Hybrid Optical Scanning Holography for Automatic Three-Dimensional Reconstruction of Brain Tumors from MRI using Active Contours", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 4, pp. 07–13, 2024. doi: 10.25046/aj090402

- Taichi Ito, Ken’ichi Minamino, Shintaro Umeki, "Visualization of the Effect of Additional Fertilization on Paddy Rice by Time-Series Analysis of Vegetation Indices using UAV and Minimizing the Number of Monitoring Days for its Workload Reduction", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 3, pp. 29–40, 2024. doi: 10.25046/aj090303

- Veena Phunpeng, Wilailak Wanna, Sorada Khaengkarn, Thongchart Kerdphol, "Feedback Controller for Longitudinal Stability of Cessna182 Fixed-Wing UAVs", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 5, pp. 17–27, 2023. doi: 10.25046/aj080503

- Mario Cuomo, Federica Massimi, Francesco Benedetto, "Detecting CTC Attack in IoMT Communications using Deep Learning Approach", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 2, pp. 130–138, 2023. doi: 10.25046/aj080215

- Paul Miracle Udah, Ayomide Ibrahim Suleiman, Jibril Abdullahi Bala, Ahmad Abubakar Sadiq, Taliha Abiodun Folorunso, Julia Eichie, Adeyinka Peace Adedigba, Abiodun Musa Aibinu, "Development of an Intelligent Road Anomaly Detection System for Autonomous Vehicles", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 2, pp. 1–13, 2023. doi: 10.25046/aj080201

- Kamil Halouzka, Ladislav Burita, Aneta Coufalikova, Pavel Kozak, Petr Františ, "A Comparison of Cyber Security Reports for 2020 of Central European Countries", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 4, pp. 105–113, 2022. doi: 10.25046/aj070414

- Osaretin Eboya, Julia Binti Juremi, "iDRP Framework: An Intelligent Malware Exploration Framework for Big Data and Internet of Things (IoT) Ecosystem", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 5, pp. 185–202, 2021. doi: 10.25046/aj060521

- Randy Kuang, Dafu Lou, Alex He, Alexandre Conlon, "Quantum Secure Lightweight Cryptography with Quantum Permutation Pad", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 4, pp. 401–405, 2021. doi: 10.25046/aj060445

- Zhiyuan Chen, Howe Seng Goh, Kai Ling Sin, Kelly Lim, Nicole Ka Hei Chung, Xin Yu Liew, "Automated Agriculture Commodity Price Prediction System with Machine Learning Techniques", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 4, pp. 376–384, 2021. doi: 10.25046/aj060442

- Hathairat Ketmaneechairat, Maleerat Maliyaem, Chalermpong Intarat, "Kamphaeng Saen Beef Cattle Identification Approach using Muzzle Print Image", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 4, pp. 110–122, 2021. doi: 10.25046/aj060413

- Dimas Sirin Madefanny, Suharjito, "Integration Information Systems Design of Material Planning in the Manufacturing Industry using Service Oriented Architecture", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 3, pp. 100–106, 2021. doi: 10.25046/aj060311

- Niranjan Ravi, Mohamed El-Sharkawy, "Enhanced Data Transportation in Remote Locations Using UAV Aided Edge Computing", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 1091–1100, 2021. doi: 10.25046/aj0602124

- Kenza Aitelkadi, Hicham Outmghoust, Salahddine laarab, Kaltoum Moumayiz, Imane Sebari, "Detection and Counting of Fruit Trees from RGB UAV Images by Convolutional Neural Networks Approach", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 887–893, 2021. doi: 10.25046/aj0602101

- Binghan Li, Yindong Hua, Mi Lu, "Advanced Multiple Linear Regression Based Dark Channel Prior Applied on Dehazing Image and Generating Synthetic Haze", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 790–800, 2021. doi: 10.25046/aj060291

- Mochammad Haldi Widianto, Ari Purno Wahyu, Dadan Gusna, "Prototype Design Internet of Things Based Waste Management Using Image Processing", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 709–715, 2021. doi: 10.25046/aj060282

- Dancan Otieno Onyango, Christopher Ogolo Ikporukpo, John Olalekan Taiwo, Stephen Balaka Opiyo, Kevin Okoth Otieno, "Comparative Analysis of Land Use/Land Cover Change and Watershed Urbanization in the Lakeside Counties of the Kenyan Lake Victoria Basin Using Remote Sensing and GIS Techniques", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 671–688, 2021. doi: 10.25046/aj060278

- Hayat El Aissaoui, Abdelghani El Ougli, Belkassem Tidhaf, "Neural Networks and Fuzzy Logic Based Maximum Power Point Tracking Control for Wind Energy Conversion System", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 586–592, 2021. doi: 10.25046/aj060267

- Kuzichkin Oleg R., Vasilyev Gleb S., Surzhik Dmitry I., Kurilov Igor A., "Application of Piecewise Linear Approximation of the UAV Trajectory for Adaptive Routing in FANET", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 559–565, 2021. doi: 10.25046/aj060263

- Kamel Fahmi Bou-Hamdan, "Design and Implementation of an Ultrasonic Scanner Setup that is Controlled using MATLAB and a Microcontroller", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 85–92, 2021. doi: 10.25046/aj060211

- Akram Ajouli, "SEA: An UML Profile for Software Evolution Analysis in Design Phase", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 1334–1342, 2021. doi: 10.25046/aj0601153

- Bryan Huaytalla, Diego Humari, Guillermo Kemper, "An algorithm for Peruvian counterfeit Banknote Detection based on Digital Image Processing and SVM", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 1171–1178, 2021. doi: 10.25046/aj0601132

- Sana Elhidaoui, Khalid Benhida, Said Elfezazi, Yassine Azougagh, Abdellatif Benabdelhafid, "Model of Fish Cannery Supply Chain Integrating Environmental Constraints (AHP and TOPSIS)", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 798–809, 2021. doi: 10.25046/aj060189

- Thinh Dang Cong, Toi Le Thanh, Hao Mai Tri, Phuc Ton That Bao, Trang Hoang, "Applications of TCAD Simulation Software for Fabrication and study of Process Variation Effects on Threshold Voltage in 180nm Floating-Gate Device", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 146–152, 2021. doi: 10.25046/aj060116

- Mahmut Demirtas, Kerem C ̧ agdas ̧ Durmus ̧, Gülçín Tanıs ̧, Caner Arslan, Metin Balcı, "Downlink Indoor Coverage Performance of Unmanned Aerial Vehicle LTE Base Stations", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 128–133, 2021. doi: 10.25046/aj060114

- Yaswanthkumar S K, Keerthana M, Vishnu Prasath M S, "A Machine Vision Approach for Underwater Remote Operated Vehicle to Detect Drowning Humans", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 1734–1740, 2020. doi: 10.25046/aj0506207

- Aaron Don M. Africa, Emmanuel T. Trinidad, Lawrence Materum, "Projection of Wireless Multipath Clusters Using Multi-Dimensional Visualization Techniques", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 1064–1070, 2020. doi: 10.25046/aj0506129

- Hnin Thu Zar Aye, Win Pa Pa, "Dependency Head Annotation for Myanmar Dependency Treebank", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 788–800, 2020. doi: 10.25046/aj050694

- Jenjira Sukmanee, Ramil Kesvarakul, Nattawut Janthong, "Network Modeling with ANP to Determine the Appropriate Area for the Development of Dry Port in Thailand", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 676–683, 2020. doi: 10.25046/aj050681

- Selene Tamayo Castro, Kristian Aldapa Salcido, Linda García Rodríguez, "Proposal of a New Descriptive-Correlational Model of Population Lifestyle Analysis and Disease Diagnosis", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 555–560, 2020. doi: 10.25046/aj050667

- Jojo Blanza, Lawrence Materum, "Interface for Visualization of Wireless Propagation Multipath Clustering Outcomes", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 544–549, 2020. doi: 10.25046/aj050665

- Jojo Blanza, Lawrence Materum, "Variation Between DDC and SCAMSMA for Clustering of Wireless MultipathWaves in Indoor and Semi-Urban Channel Scenarios", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 538–543, 2020. doi: 10.25046/aj050664

- Miroslav Kratky, Vaclav Minarik, Michal Sustr, Jan Ivan, "Electronic Warfare Methods Combatting UAVs", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 447–454, 2020. doi: 10.25046/aj050653

- Yonatan López Santos, Diana Sánchez-Partida, Patricia Cano-Olivos, "Strategic Model to Assess the Sustainability and Competitiveness of Focal Agri-Food Smes and their Supply Chains: A Vision Beyond COVID 19", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 1214–1224, 2020. doi: 10.25046/aj0505147

- Hasn Mahmood Khudair, Taif Alawsi, Anwaar A. Aldergazly, A. H. Majeed, "Design and Implementation of Aerial Vehicle Remote Sensing and Surveillance System, Dehazing Technique Using Modified Dark Channel Prior", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 1111–1117, 2020. doi: 10.25046/aj0505135

- Adrian Florea, Valentin Fleaca, Simona Daniela Marcu, "Innovative Solution for Parking-Sharing of Private Institutions Using Various Occupancy Tracking Methods", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 808–819, 2020. doi: 10.25046/aj050598

- Khalid A. AlAfandy, Hicham, Mohamed Lazaar, Mohammed Al Achhab, "Investment of Classic Deep CNNs and SVM for Classifying Remote Sensing Images", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 652–659, 2020. doi: 10.25046/aj050580

- Kerin Augustin, Natasia, Ditdit Nugeraha Utama, "Butterfly Life Cycle Algorithm for Measuring Company’s Growth Performance Based on BSC and SWOT Perspectives", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 554–558, 2020. doi: 10.25046/aj050568

- Rajesh Kumar, Geetha S, "Malware Classification Using XGboost-Gradient Boosted Decision Tree", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 536–549, 2020. doi: 10.25046/aj050566

- Raúl Jiménez-Gutiérrez, Diana Sánchez-Partida, José-Luis Martínez-Flores, Eduardo-Arturo Garzón-Garnica, "Simulated Annealing for Traveling Salesman Problem with Hotel Selection for a Distribution Company Based in Mexico", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 500–505, 2020. doi: 10.25046/aj050562

- Rohith Raj S, Pratiba D, Ramakanth Kumar P, "Facial Expression Recognition using Facial Landmarks: A Novel Approach", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 24–28, 2020. doi: 10.25046/aj050504

- Anouar Bachar, Noureddine El Makhfi, Omar EL Bannay, "Machine Learning for Network Intrusion Detection Based on SVM Binary Classification Model", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 4, pp. 638–644, 2020. doi: 10.25046/aj050476

- Dmytro Kucherov, Olha Sushchenko, Andrii Kozub, Volodymyr Nakonechnyi, "Assessing the Operator’s Readiness to Perform Tasks of Controlling by the Unmanned Aerial Platforms", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 4, pp. 457–462, 2020. doi: 10.25046/aj050454

- Maroua Abdellaoui, Dounia Daghouj, Mohammed Fattah, Younes Balboul, Said Mazer, Moulhime El Bekkali, "Artificial Intelligence Approach for Target Classification: A State of the Art", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 4, pp. 445–456, 2020. doi: 10.25046/aj050453

- Roberta Avanzato, Francesco Beritelli, "A CNN-based Differential Image Processing Approach for Rainfall Classification", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 4, pp. 438–444, 2020. doi: 10.25046/aj050452

- Quach Hai Tho, Huynh Cong Phap, Pham Anh Phuong, "Solutions for Building a System to Support Motion Control for Autonomous Vehicle", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 3, pp. 583–588, 2020. doi: 10.25046/aj050373

- Quach Hai Tho, Huynh Cong Phap, Pham Anh Phuong, "A Solution Applying the Law on Road Traffic into A Set of Constraints to Establish A Motion Trajectory for Autonomous Vehicle", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 3, pp. 450–456, 2020. doi: 10.25046/aj050356

- Efrain Mendez, German Baltazar-Reyes, Israel Macias, Adriana Vargas-Martinez, Jorge de Jesus Lozoya-Santos, Ricardo Ramirez-Mendoza, Ruben Morales-Menendez and Arturo Molina, "ANN Based MRAC-PID Controller Implementation for a Furuta Pendulum System Stabilization", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 3, pp. 324–333, 2020. doi: 10.25046/aj050342

- Kartono Kartono, Purwanto Purwanto, Suripin Suripin, "Analysis of Local Rainfall Characteristics as a Mitigation Strategy for Hydrometeorology Disaster in Rain-fed Reservoirs Area", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 3, pp. 299–305, 2020. doi: 10.25046/aj050339

- Noraziah Adzhar, Yuhani Yusof, Muhammad Azrin Ahmad, "A Review on Autonomous Mobile Robot Path Planning Algorithms", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 3, pp. 236–240, 2020. doi: 10.25046/aj050330

- Bui Quoc Doanh, Ta Chi Hieu, Truong Sy Nam, Pham Thi Phuong Anh, Pham Thanh Hiep, "Performance Analysis of Joint Precoding and Equalization Design with Shared Redundancy for Imperfect CSI MIMO Systems", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 3, pp. 142–149, 2020. doi: 10.25046/aj050319

- Sally Almanasra, Ali Alshahrani, "Alternative Real-time Image-Based Smoke Detection Algorithm", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 3, pp. 123–128, 2020. doi: 10.25046/aj050316

- Anna Konert, Tadeusz Dunin, "A Harmonized European Drone Market? – New EU Rules on Unmanned Aircraft Systems", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 3, pp. 93–99, 2020. doi: 10.25046/aj050312

- Aditi Haresh Vyas, Mayuri A. Mehta, "A Comprehensive Survey on Image Modality Based Computerized Dry Eye Disease Detection Techniques", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 2, pp. 748–756, 2020. doi: 10.25046/aj050293

- Brian Meneses-Claudio, Witman Alvarado-Diaz, Avid Roman-Gonzalez, "Classification System for the Interpretation of the Braille Alphabet through Image Processing", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 1, pp. 403–407, 2020. doi: 10.25046/aj050151

- Walaa Gouda, Randa Jabeur Ben Chikha, "NAO Humanoid Robot Obstacle Avoidance Using Monocular Camera", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 1, pp. 274–284, 2020. doi: 10.25046/aj050135

- Guillermo Kemper, David Atencia, Ivan Ortega, Roberto Kemper, Alejandro Yabar, "An Algorithm for Automatic Measurement of KI-67 Proliferation Index in Digital Images of Breast Tissue", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 1, pp. 201–211, 2020. doi: 10.25046/aj050126

- Mohamed Bakry El_Mashade, Haitham Akah, Shimaa Abd El-Monem, "Windowing Accuracy Evaluation for PSLR Enhancement of SAR Image Recovery", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 1, pp. 48–57, 2020. doi: 10.25046/aj050107

- Cuong Van Nguyen, Toan Van Quyen, Anh My Le, Linh Hoang Truong, Minh Tuan Nguyen, "Advanced Hybrid Energy Harvesting Systems for Unmanned Aerial Vehicles (UAVs)", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 1, pp. 34–39, 2020. doi: 10.25046/aj050105

- Daniel Szabo, Emese Gincsaine Szadeczky-Kardoss, "Novel Cost Function based Motion-planning Method for Robotic Manipulators", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 6, pp. 386–396, 2019. doi: 10.25046/aj040649

- Daniel Arteaga, Guillermo Kemper, Samuel G. Huaman Bustamante, Joel Telles, Leon Bendayan, Jose Sanjurjo, "A Method for Mosaicking Aerial Images based on Flight Trajectory and the Calculation of Symmetric Transfer Error per Inlier", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 6, pp. 328–338, 2019. doi: 10.25046/aj040642

- Omar Freddy Chamorro Atalaya, Dora Yvonne Arce Santillan, Jorge Isaac Castro Bedriñana, Yesica Pamela Leandro Chacón, Martin Díaz Choque, "The Correlation of the Specific and Global Performance of Teachers in UNTELS Engineering Schools", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 6, pp. 196–202, 2019. doi: 10.25046/aj040625

- Slim Chaoui, Osama Ouda, Chafaa Hamrouni, "A Joint Source Channel Decoding for Image Transmission", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 6, pp. 183–191, 2019. doi: 10.25046/aj040623

- Ahmad Yusairi Bani Hashim, Silah Hayati Kamsani, Mahasan Mat Ali, Syamimi Shamsuddin, Ahmad Zaki Shukor, "Simulation and Reproduction of a Manipulator According to Classical Arm Representation and Trajectory Planning", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 6, pp. 158–162, 2019. doi: 10.25046/aj040619

- Evan Hurwitz, Chigozie Orji, "Multi Biometric Thermal Face Recognition Using FWT and LDA Feature Extraction Methods with RBM DBN and FFNN Classifier Algorithms", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 6, pp. 67–90, 2019. doi: 10.25046/aj040609

- Moufad Imane, Jawab Fouad, "Proposal Methodology of Planning and Location of Loading/Unloading Spaces for Urban Freight Vehicle: A Case Study", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 5, pp. 273–280, 2019. doi: 10.25046/aj040534

- Wafa Abdouni-Abdallah, Muhammad Saeed Khan, Athanasios Konstantinidis, Anne-Claude Tarot, Aziz Ouacha, "Optimization Method of Wideband Multilayer Meander-Line Polarizer using Semi-Analytical approach and Application to 6-18GHz Polarizer including test with Horn Antenna", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 5, pp. 132–138, 2019. doi: 10.25046/aj040517

- Gennadii Georgievich Cherepanov, Anatolii Ivanovich Mikhalskii, Zhanna Anatolievna Novosrltseva, "Forecasting Bio-economic Effects in the Milk Production based on the Potential of Animals for Productivity and Viability", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 5, pp. 110–114, 2019. doi: 10.25046/aj040514

- Takahiro Ishizu, Makoto Sakamoto, Masamichi Hori, Takahiro Shinoda, Takaaki Toyota, Amane Takei, Takao Ito, "Hidden Surface Removal for Interaction between Hand and Virtual Objects in Augmented Reality", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 4, pp. 359–365, 2019. doi: 10.25046/aj040444

- Kwenga Ismael Munene, Nobuo Funabiki, Md. Manowarul Islam, Minoru Kuribayashi, Md. Selim Al Mamun, Wen-Chung Kao, "An Extension of Throughput Drop Estimation Model for Three-Link Concurrent Communications under Partially Overlapping Channels and Channel Bonding in IEEE 802.11n WLAN", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 4, pp. 94–105, 2019. doi: 10.25046/aj040411

- Md Nasimuzzaman Chowdhury, Ken Ferens, "A Support Vector Machine Cost Function in Simulated Annealing for Network Intrusion Detection", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 3, pp. 260–277, 2019. doi: 10.25046/aj040334

- Fernando Hernández, Roberto Vega, Freddy Tapia, Derlin Morocho, Walter Fuertes, "Early Detection of Alzheimer’s Using Digital Image Processing Through Iridology, An Alternative Method", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 3, pp. 126–137, 2019. doi: 10.25046/aj040317

- Bayan Sapargaliyeva, Aigul Naukenova, Bakhyt Alipova, Javier Rodrigo Ilarri, "Flame Distribution and Attenuation in Narrow Channels Using Mathematical Software", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 3, pp. 53–57, 2019. doi: 10.25046/aj040308

- Abdullah Al-Shaalan, "Technical and Economic Merits Resulting from Power Systems Interconnection", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 2, pp. 34–39, 2019. doi: 10.25046/aj040205

- Muhammad Aizat Bin Abu Bakar, Abu Hassan Bin Abdullah, Fathinul Syahir Bin Ahmad Sa’ad, "Development of Application Specific Electronic Nose for Monitoring the Atmospheric Hazards in Confined Space", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 1, pp. 200–216, 2019. doi: 10.25046/aj040120

- Kristóf Csorba, Ádám Budai, "cv4sensorhub – A Multi-Domain Framework for Semi-Automatic Image Processing", Advances in Science, Technology and Engineering Systems Journal, vol. 3, no. 6, pp. 159–164, 2018. doi: 10.25046/aj030620

- Adel Mounir Said, Emad Abd-Elrahman, Hossam Afifi, "Q-Learning versus SVM Study for Green Context-Aware Multimodal ITS Stations", Advances in Science, Technology and Engineering Systems Journal, vol. 3, no. 5, pp. 328–338, 2018. doi: 10.25046/aj030539

- Imad Hasan Tahini, Alex Dadykin, "Proposed System of New Generation LMS Using Visual Models to Accelerate Language Acquisition", Advances in Science, Technology and Engineering Systems Journal, vol. 3, no. 5, pp. 277–287, 2018. doi: 10.25046/aj030533

- Md. Asadur Rahman, Md. Shajedul Islam Sohag, Rasel Ahmmed, Md. Mahmudul Haque, Anika Anjum, "Defined Limited Fractional Channel Scheme for Call Admission Control by Two-Dimensional Markov Process based Statistical Modeling", Advances in Science, Technology and Engineering Systems Journal, vol. 3, no. 4, pp. 295–307, 2018. doi: 10.25046/aj030430

- Kun Zhang, Liu Liu, Cheng Tao, Ke Zhang, Ze Yuan, Jianhua Zhang, "Wireless Channel Measurement and Modeling in Industrial Environments", Advances in Science, Technology and Engineering Systems Journal, vol. 3, no. 4, pp. 254–259, 2018. doi: 10.25046/aj030425

- Abraham Amole, Bamidele Sanya Osalusi, "Textural Analysis of Pap Smears Images for k-NN and SVM Based Cervical Cancer Classification System", Advances in Science, Technology and Engineering Systems Journal, vol. 3, no. 4, pp. 218–223, 2018. doi: 10.25046/aj030420

- Dhiman Chowdhury, Mrinmoy Sarkar, Mohammad Zakaria Haider, "A Cyber-Vigilance System for Anti-Terrorist Drives Based on an Unmanned Aerial Vehicular Networking Signal Jammer for Specific Territorial Security", Advances in Science, Technology and Engineering Systems Journal, vol. 3, no. 3, pp. 43–50, 2018. doi: 10.25046/aj030306

- Laud Charles Ochei, Christopher Ifeanyichukwu Ejiofor, "A Model for Optimising the Deployment of Cloud-hosted Application Components for Guaranteeing Multitenancy Isolation", Advances in Science, Technology and Engineering Systems Journal, vol. 3, no. 2, pp. 174–183, 2018. doi: 10.25046/aj030220

- Si Si Mar Win, Than Nwe Aung, "Automated Text Annotation for Social Media Data during Natural Disasters", Advances in Science, Technology and Engineering Systems Journal, vol. 3, no. 2, pp. 119–127, 2018. doi: 10.25046/aj030214

- Rasel Ahmmed, Md. Asadur Rahman, Md. Foisal Hossain, "An Advanced Algorithm Combining SVM and ANN Classifiers to Categorize Tumor with Position from Brain MRI Images", Advances in Science, Technology and Engineering Systems Journal, vol. 3, no. 2, pp. 40–48, 2018. doi: 10.25046/aj030205

- Emese Gincsainé Szádeczky-Kardoss, Zoltán Gyenes, "Velocity obstacles for car-like mobile robots: Determination of colliding velocity and curvature pairs", Advances in Science, Technology and Engineering Systems Journal, vol. 3, no. 1, pp. 225–233, 2018. doi: 10.25046/aj030127

- Ahmed Sanhaji, Ahmed Dakkak, "Nonresonance between the first two Eigencurves of Laplacian for a Nonautonomous Neumann Problem", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 5, pp. 152–159, 2017. doi: 10.25046/aj020522

- Mehdi Guessous, Lahbib Zenkouar, "A novel beamforming based model of coverage and transmission costing in IEEE 802.11 WLAN networks", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 6, pp. 28–39, 2017. doi: 10.25046/aj020604

- Alexey Golubev, Maxim Shcherbakov, "Public transportation network design: a geospatial data-driven method", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 3, pp. 1298–1306, 2017. doi: 10.25046/aj0203164

- Mohd Zuhair, Sonia Thomas, "Classification of patient by analyzing EEG signal using DWT and least square support vector machine", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 3, pp. 1280–1289, 2017. doi: 10.25046/aj0203162

- Muhammad Asif Manzoor, Yasser Morgan, "Support Vector Machine based Vehicle Make and Model Recognition System", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 3, pp. 1080–1085, 2017. doi: 10.25046/aj0203137

- Muhammad Asif Manzoor, Yasser Morgan, "Network Intrusion Detection System using Apache Storm", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 3, pp. 812–818, 2017. doi: 10.25046/aj0203102

- Paulo A. Ferreira, João P. Ferreira, Manuel Crisóstomo, A. Paulo Coimbra, "Treadmill and Vision System for Human Gait Acquisition and Analysis", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 3, pp. 796–804, 2017. doi: 10.25046/aj0203100

- Mazen Ghandour, Hui Liu, Norbert Stoll, Kerstin Thurow, "Human Robot Interaction for Hybrid Collision Avoidance System for Indoor Mobile Robots", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 3, pp. 650–657, 2017. doi: 10.25046/aj020383

- Ann Sabih, Yousif Al-Dunainawi, H. S. Al-Raweshidy, Maysam F. Abbod, "Optimisation of Software-Defined Networks Performance Using a Hybrid Intelligent System", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 3, pp. 617–622, 2017. doi: 10.25046/aj020379

- Ali Akbar Pammu, Kwen-Siong Chong, Bah-Hwee Gwee, "A Highly-Secured Arithmetic Hiding cum Look-Up Table (AHLUT) based S-Box for AES-128 Implementation", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 3, pp. 420–426, 2017. doi: 10.25046/aj020354

- Maxwell Oppong Afriyie, Obour Agyekum Kwame Opuni-Boachie, Affum Emmanuel Ampoma, "Channel Inversion Schemes with Compensation Network for Two-Element Compact Array in Multi-User MIMO", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 4, pp. 26–31, 2017. doi: 10.25046/aj020404

- Indranil Nath, "A Derived Metrics as a Measurement to Support Efficient Requirements Analysis and Release Management", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 3, pp. 36–40, 2017. doi: 10.25046/aj020306

- Lubna s. Ben Taher, "Evaluation of Three Evaporation Estimation Techniques In A Semi-Arid Region (Omar El Mukhtar Reservoir Sluge, Libya- As a case Study)", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 2, pp. 19–29, 2017. doi: 10.25046/aj020204

- R. Manju Parkavi, M. Shanthi, M.C. Bhuvaneshwari, "Recent Trends in ELM and MLELM: A review", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 1, pp. 69–75, 2017. doi: 10.25046/aj020108