Robot-Assisted Posture Emulation for Visually Impaired Children

Volume 4, Issue 1, Page No 193-199, 2019

Author’s Name: Fang-Lin Chao1,a), Hung-Chi Chu2, Liza Lee3

View Affiliations

1Department of Industrial Design, Chaoyang University of Technology, 436, Taiwan R.O.C.

2Department of Information and Communication Engineering, 3Department of Early Childhood Development & Education, Chaoyang University of Technology, 436, Taiwan R.O.C.

a)Author to whom correspondence should be addressed. E-mail: flin@cyut.edu.tw

Adv. Sci. Technol. Eng. Syst. J. 4(1), 193-199 (2019); ![]() DOI: 10.25046/aj040119

DOI: 10.25046/aj040119

Keywords: Posture movement, Small robot, Touching, Visually impaired

Export Citations

This study proposes robot-assisted posture emulation for visually impaired children. The motor of a small robot (low torque) can be controlled using our palms. A user does not risk injury when the robotic hand is directly touched. The dimensions of the body of a commercially available small robot are different from those of a person. We adjusted the length of the upper arm to easily distinguish the movements of the upper and lower limbs. Adults and children were requested to perceive the robot’s movements through touch and imitate its action. The study demonstrated that visually impaired subjects enjoyed playing with the robot and the frequency of body movements increased in robot-assisted guidance. The majority of the children could identify the main posture and imitate it. The scoring of continuous movement is medium. A stand-alone concept design was proposed. The design can present the main actions of the upper body and prevent body dumping when touched. Main torso and hand movements can reveal the body language of most users.

Received: 20 October 2018, Accepted: 08 February 2019, Published Online: 14 February 2019

1. Introduction

The benefits of play facilitate integration, survival, and understanding. Play supports flexibility in thinking, adaptability, learning, and exploring the environment. These abilities are essential for developing social, emotional, and physical skills [1]. The ability to pretend play reveals a child’s cognitive and social capacities [2].

1.1. Background

Effects exerted by visual impairment include psychological, social, mobility, and occupational effects. The psychological effect refers to negative consequences such as the loss of self-identity and confidence [3]. Play and exercise can create scenarios that promote social inclusion.

For parents, mobility is the main concern. Limited mobility affects a child’s participation in daily physical activities. In the absence of guidance, children experience frustration [3]. An Indian dancer conducted a workshop for visually challenged and sighted underprivileged students at Acharya Sri Rakum School [4]. School children were observed to prefer such guidance and interactive teaching. For visually challenged children, the use of canes while moving is an additional psychological barrier [4].

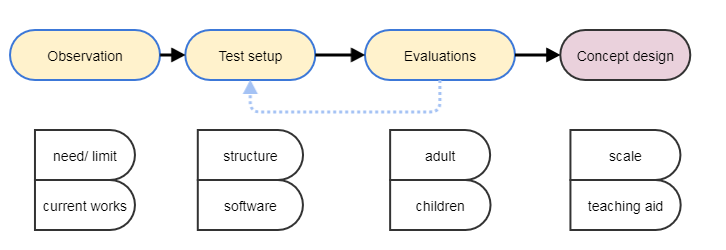

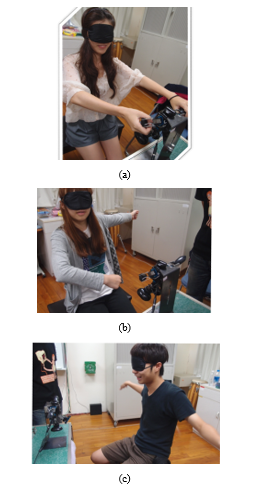

Several observations were noted in the special school for visually impaired. As shown in Figure 1(a), many students preferred staying at their desk during the class break. They were concerned regarding potential risks that can arise in outdoor activities and therefore tended to stay at their desk.

Students did not exhibit considerable behavioral changes in a typical classroom. Figure 1(b) shows students with a downward looking posture while conversing and limited body expressions. The absence of body language results in a loss of a communication channel with others. Slow physical movements suggest low confidence. Figure 1(c) depicts children being group guided to reduce uncertainty. They supported each other and followed the footsteps of the previous student. In this case, students missed the opportunity to explore surroundings in a safe environment independently. Some students can be unfit, which causes a difficulty in physical balance and coordination. Individual guidance is required to encourage such students to exercise. Furthermore, it is necessary to provide accessories and an environment that can provide safe opportunities for physical exploration.

Gestures in this study included simple movements: moving the hand left and right indicated rejection and pressing down indicated affirmation. Silent gestures produced by English speakers enacted in motion elements [5]. Humans rely on motion events when they convey those events without language. Research on gestures demonstrated a strong correlation between speech and body in language use [6].

Figure 1 Behavior observations of visually impaired children: (a) student in a regular classroom, (b) lack of posture expression during talk, (c) group guiding by teacher

Figure 1 Behavior observations of visually impaired children: (a) student in a regular classroom, (b) lack of posture expression during talk, (c) group guiding by teacher

Gestures play an essential role in language learning and development [7]. Gestures serve as a critical communication channel between people. Some dancers use hands to touch the body of an instructor to illustrate movements; this may cause uncomfort to the instructor. Blind children use gestures to convey thoughts and ideas [7]. Gesture is integral to the speaking process. Corresponding postures and other sensations can fulfill the learning gap.

1.2. Related existing studies

Physical exercise can reduce feelings of isolation in blind children and strengthen their friendship with each other. Figure 2 depicts a linear servo structure constructed using five motors [8] to emulate the main torso or hand posture. A solid bar was fixed with a servo motor by using a joint. Joints extend the flexibility of structures and can perform a curvature movement. A student requires two hands together to perceive a posture.

Robot dance has been developed since 2000. Many programmable humanoid robot dancers (Figure 3) are available. A dance-teaching robot was proposed as a platform (Figure 4) in Japan. An estimation method for dance steps was developed in [10] by using time series data of force. A study [11] presented a physical human–robot interaction that combined cognitive and physical feedback of performance. Direct contact enables synchronized motion according to the partner’s movement. A role adaptation method was used for the human–robot shared control [12]. The robot can adjust its role according to the human’s intention by using the measured force.

Figure 2. Linear servo structure emulates body posture with linkage bar [8].

Figure 2. Linear servo structure emulates body posture with linkage bar [8].

Figure 3. Humanoid robot dancer [9]

Figure 3. Humanoid robot dancer [9]

Figure 4. “Robot Dance Teacher” of Tohoku University [10]

Figure 4. “Robot Dance Teacher” of Tohoku University [10]

Teaching activities that require physical contact and complex motion were investigated in [13]. The methodology presents teaching through the following physical human–robot interaction: learning is enhanced by providing a constant feedback to users and by making a progressive change in the interaction of the controller. A robot was used by a special education teacher and physical therapist [14]. An external device encouraged a dancer to perform more energetically to the rhythm of upbeat songs. Children were not instructed to mimic the movements of a human or robot dancer, which is the common practice of a normal dancer.

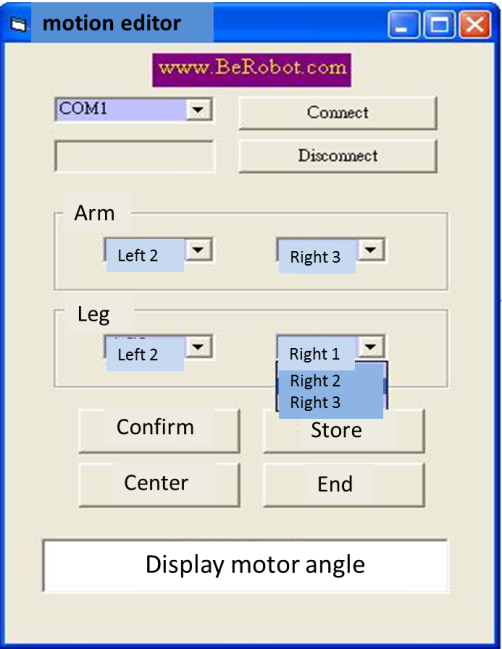

2. Methodology

2.1. Motivated posture display

A small commercially available robot [15] (Figure 5) was used as motivation for posture expression. The trial was based on three considerations: (1) provide haptic attention, (2) encourage posture response, and (3) fit the available school budget. When children perceive the posture of a body, they imitate the movements of the body. The small size of robots was suitable for activities involving touching with the hands.

The main controller was placed in the central square part. Two feet and hands with servomotors extended from the body; the lower legs were wide and thick. This bigfoot structure was intended to reduce the center of gravity. The length of the leg and arm was not consistent with the ratio of that of a human. Although the robot can emulate the body posture, adjustments are necessary to ensure proper interaction with children.

2.2. Structure of studies

Qualitative research involves interviews and observations. Students and a teacher joined the practice. Then, three observers filled observation forms accompanied by video recording. First, the test was conducted by a regular adult wearing an eye mask.

A supporting frame, which consisted of a steel frame attached with an adjustable clamp to hold a micro-robot’s body, was provided to prevent the robot from falling (Figure 7). The height of the bracket was 30 cm, and hooks were fastened at the upper and lower ends of the body. Although the body was buckled, the limbs were free to move. Therefore, the user could safely touch the limbs without worrying about falling.

Figure 6 shows observation steps, evaluation procedures are:

- explain the procedure

- touching the upper part of the robot’s body

- emulate the corresponding posture, evaluate the response

- correcting the response with vocal or physical guidance

- reaching the lower body, follow the posture

- perform whole-body posture

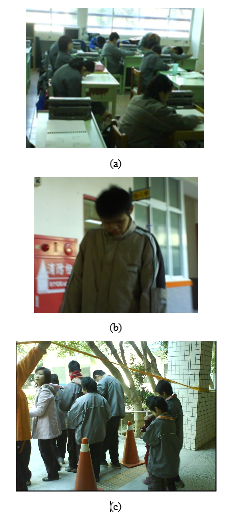

2.3. Motion edit

BeRobot is equipped with a robotic motion commander, which is a graphic interface that enables a user to control motions such as twisting, bending down, bending knees, walking, and standing. Figure 7 displays the motion commander interface.

Figure 7. Motion commander of remote control mode and interactive mode

Figure 7. Motion commander of remote control mode and interactive mode

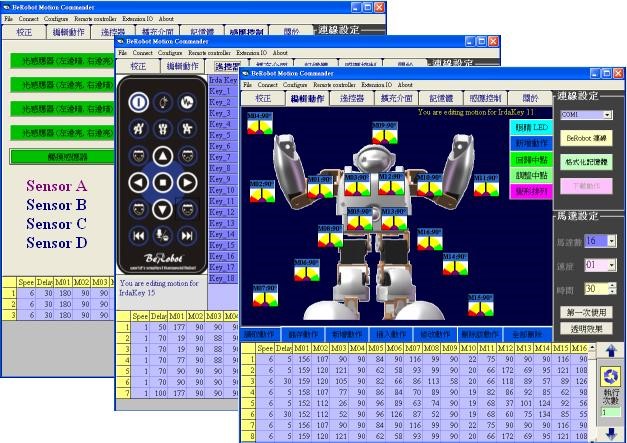

A program mode was included for the complex movement design. As depicted in Figure 8, we designed a simplified control interface with fewer parameters to enable the school teacher to overcome the barrier of motions. We used an USB interface to control the robot with a computer. As indicated in Figure 8, the section editor program was developed using Microsoft Visual Studio. By selecting the movement type, users produced desired simplified postures. The midpoint was the initial state of the pose. An ordinary action consists of a few simple hand and foot movements. When we adjust to the correct position, the angle of each servo motor can be transmitted through the USB port and stored in the memory. The user can manually control the timing by directly clicking to switch among different postures. Ordinary movements are achieved by combining simple postures.

Figure 8 Motion editor: interface for interactive editing of posture

Figure 8 Motion editor: interface for interactive editing of posture

3. User test

3.1. Adults test: object preparation and task

The size of the robot (length × width × height) was 6.5 × 10.2 × 15.5 cm. To enhance movement detection, we increased the length of the upper arm. We used laser cutting to create a steel strip extension. The dimensions of the upper and lower arms were close to those in the real situation.

We invited college students to detect a potential problem. The purpose of the test was to gauge the body sensitivity through touch. Observers were trained graduate students. All interview sessions were recorded on video. Observers viewed the videos and characterized them.

We first demonstrated a few postures (e.g., open hands and one hand straight down), and the subject attempted to replicate similar actions. We requested the students to touch robot in a mild manner, the achievement rate was high. During the test, the subject was allowed to verbally state their intentions, which allowed us to understand their feelings. Second, continuous motion was preferred. They expressed positive experiences and motivated physical movements.

- Adjusted the robot one movement at a time

- Extended the duration of touch, in which time to imitate each of the postures was long

- Changed the robot’s orientation from back to front to ensure that a student’s direction was the same as that of the guiding robot

- Regular pauses were added to ensure that subjects perceived the movement

- Vocal and physical guidance was provided during the tests.

3.2. Adult test results

College students wore eye masks to ensure that they perceived motion through touch. To avoid the scenario of testers influencing each other, we isolated subjects by testing one person at a time. The picture and video indicate that most of them were happy. Because their palms were large, they could perceive the overall posture. The majority of college students could repeat the presented posture.

During the continuous operation, interference between the palm and the robot affected the clarity of perception of a movement. When a switch occurs in the action, grip of the human with the robot may detach. Some subjects self-evolved according to the final posture. Therefore, direct touch was restricted during the practice of a static posture. Figure 9 depicts the interactions. Findings of experiments, in which a regular adult wore an eye mask, are as follows:

(a) A 22-year-old girl smiled. Although her palm was sufficiently large, she held the robot’s hand. She seems to be looking forward to being led by the move.

(b) Girl-2 made a fist in one hand and moved smoothly backward in the opposite direction. Because the subject was face to face with the robot, she questioned whether she should mimic the robot in the same direction.

(c) Male subjects mimicked the movement of flying wings. Although the robot was small in size, it could perceive the basic posture of the flying movement.

A semi-structured acceptance interview was conducted, and its responses are presented as follows:

(1) Can you know the corresponding body parts of the robot?

Before beginning the activity, guiding tutors specified the robot’s body parts by encouraging participants to touch the part of the robot being introduced. Thus, users could identify body parts, such as the head and hands, of the robot. In the case of blind children, a tutor was attentive to rejection situations. Blind children are reluctant to touch unfamiliar objects. A guided story allows them to gradually relax during the test.

Figure 9 Supporting structure and its test situation where user interact with a programmed controlled robot: (a) touching, (b) the response, (c) response with both vocal and physical guidance

Figure 9 Supporting structure and its test situation where user interact with a programmed controlled robot: (a) touching, (b) the response, (c) response with both vocal and physical guidance

(2) Can you emulate the robot’s movements?

Preliminary measurement results indicated that 80% of people could identify the movement. However, 20% of users were unable to emulate these actions in the allotted time. Although some participants could identify the parts, not all were able to identify the movement. Blind children require additional time to touch the robot.

(3) Would you recommend this activity for blind children? Any other proposals or ideas?

Approximately 80% of the measured responses indicated that the robot was small and the dimensions of the body did not match with those in a real situation. For example, the robot’s head was relatively small and in contact with the main body. Participants indicated that the position of the robot’s head should be clear to identify the corresponding body part.

3.3. Children’s test: object preparation & task

Six visually impaired subjects aged between 7 and 12 years were selected. They are referred to as A, B, C, D, and E in this discussion. All of them were students of a special education school for the visually impaired located in the mid-Taiwan region.

To avoid disrupting normal classes of students, we arranged to test six children during the classmate activity time. We conducted four teaching sessions for approximately 15 min for each student. A student’s degree of acceptance and fluency of movement were recorded each week to measure the performance of the child after receiving physical aids. Researchers first narrated a story and invited children to experience the posture patterns of the robot (Figure 10). To avoid frustration, actions were arranged from simple to complex.

Compared with the test of an adult, visually impaired children were agitated when touching the stent. To reduce influence, we removed the stent and manually maintained the stability of the robot. The micro-robot can be fiddled in hand to increase the intimacy; the teacher familiarized the children with the robot.

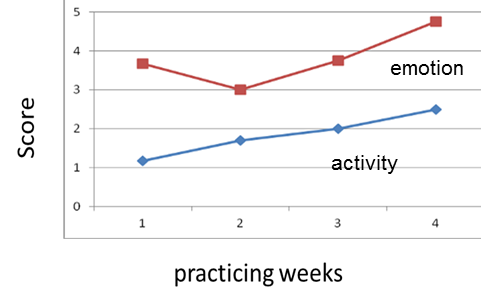

Cautious touching extended the response time. An exercising behavior coding system was developed by modifying the system created by Moore, which consists of two behavioral categories: activity and emotion. Activity refers to a child’s posture behavior and emotion refers to participation behavior. Specific items of observation were graded on a scale of 1–5, in which the following precautions were maintained:

- Activity action: Touch the robot in a precise motion and appropriate manner without being reminded. You can touch the robot with fingers and palms rather than through arbitrary movements. Similar actions can be performed without assistance of robots.

- Emotion: Emotion consists of psychological aspects such as excitement, feeling, cognitive processes, and behavioral responses to a situation. This refers to a child’s happiness, anger, sadness, and joy.

Children can actively touch the robot and express positive emotions by using spoken language and body and facial expressions. From the beginning to the end of the activity, the children did not cry or use negative language. The children were fond of the event and appeared highly interested.

3.4. Children’s Results

During the four weeks, both vocal and touch guidance were provided to enhance interactions. When removing the stent in the second week, a test required holding the robot to avoid the robot from tipping down. The records indicated the following aspects:

A-1 (student A, first week): When the student entered the classroom, he said he wanted to play with the robot. He touched the robot and smiled. When the music stopped, he stretched his hand to pull the robot’s limbs, which caused changes in movement settings. After a reminder, he could imitate the action of “lifting both hands”, “flying” and “swim.”

D-1: The student did not imitate the robot’s action and vigorously grabbed the robot’s hand. After a reminder, he gently touched the robot with his hands and palms.

A-2: The facilitator held his hand, and the student touched the robot gently. However, the student gripped the robot once the facilitator left. The child could distinguish robot movements such as flat or low. However, he did not repeat every action and replied, “I won’t.”

Figure 10. Participant interacted with the robot through guidance.

Figure 10. Participant interacted with the robot through guidance.

D-2: The child gently touched the robot after being prompted. He followed the robot and moved around. When the music stopped, he emulated the robot’s action. His facial expression was pleasant.

A-3: The student expressed that he wanted to touch the robot. After being prodded several times, he attempted to emulate the robot’s movements. However, some of the actions were incorrect. He gently touched the robot and was able to identify the body part of the robot.

D-3: After verbal reminders, the student displayed initiative to imitate the correct robotic action. The subject had residual vision and could see some movements.

A-4: The student could grasp the arm of the robot with his hand and pulled the arm. He touched the robot lightly and attempted complex moves. He pulled the robot’s arm, swayed around, smiled, and said, “Sister, look.”

D-4: The student swung his limbs without assistance, and he could do the same action immediately after the robot stopped. He had many verbal interactions with the person recording the video and continued to smile.

The study indicated that visually impaired subjects enjoyed playing with the robot. Figure 11 presents the average results of independent observers. By manually analyzing recorded videos, we concluded that the frequency of bodily movements increased. The performance of emotional aspects was considerably higher than that of activity actions. Emotions were influenced by mentality. During the test, when children were familiar with teaching, the resistance considerably reduced. Activity action requires carefully distinguishing the details of the move which requires clearly tactile identification.

The demand for verbal guidance decreased week-by-week. The average value of the correct body movements gradually increased. However, the guide frequently reminded the subjects to properly touch the robot. Several students correctly imitated movements; the C-students could accurately and completely repeat the movement by the fourth week. In the emotional aspect, the student often displayed smiles, expressed their favorite robots, and wanted to touch the robots. The D-students displayed rich positive emotions in the fourth week.

Figure 11. The evaluation results by four independent observers.

Figure 11. The evaluation results by four independent observers.

Two of the participants were absent for two weeks. The absence caused an analysis problem. Although analysis was performed in a small group, results revealed that the effectiveness of the robot depended on the teacher’s guidance. The finding suggested that the interaction with a robot positively encouraged posture emulation behavior.

4. Discussion and Concept

In contrast with adults, visually impaired children exhibited emotional reactions or inadequate concentration because they were not able to view the setting. The mood affects the willingness to perform actions. Therefore, some children initially resisted and demonstrated a limited response in the first week. Therefore, teachers’ encouragement was required to ensure children gradually participated. An auxiliary object of design plays an assisting role. Objects can assist teachers in initial activities, but they cannot replace the vital role of a teacher. “The guidance for properly touch the micro-robot” demonstrates the fragility of the micro-robot, and we propose the following design concepts for teaching.

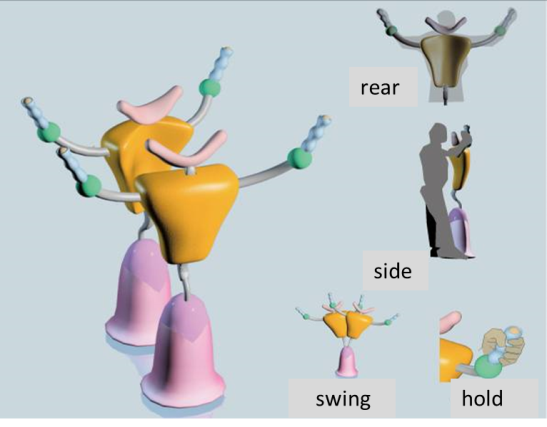

4.1. Concept design

We selected a stationary base structure. Figure 12 illustrates changes in the body with the motor located on the torso and hands. The user attached the head to the upper cushion and held the robot’s hands. Rotatable gears were present on the arm and elbow, and the simplest waist shaft requires five motors. The arm and the waist can be rotated sideways by adding more degrees of freedom, which reduces the cost of the robot. Because the magnitude of the movement was not large, the child will not hurt when he or she cautiously attached to the robot.

Figure 12. Perspective view of robot and user interaction, which emulates upper body posture, with a basement for stabilization

Figure 12. Perspective view of robot and user interaction, which emulates upper body posture, with a basement for stabilization

The user used this upper-body humanoid robot as a teacher’s body. When a posture changes, the whole body can perceive the change. The motion control driver adjusts parameters to ease the learning of blind children and synchronize learning with music. This design incorporated motors with pressure sensors, which determined the speed of the response. Although the external force was large, it demonstrated that a user could keep up with the current action and slow down to avoid danger.

4.2. Comparison with existing work

Emulating the posture of the entity assists students in understanding the meaning of posture expression. The learning of correct postures aids communication with others. Most previous studies have focused on cutting-edge dance-teaching, which required complex control systems and mechanical constructions. For the vulnerable visually impaired group, it is difficult to obtain sufficient funds to procure such teaching aids. The visually impaired group requires a simple structure, which appropriately simplifies the design goal and transforms the complex dance into simple postures. Once familiar with gestures, movement learning can be expanded. This can be a useful tool for visually impaired people.

5. Conclusions

Dance is a continuous movement that involves a change in posture at different times. We evaluated posture displays through direct contact. The small robot motor has a low torque, which can be controlled by the strength of our palms. The user does not risk injury when the robotic hand is directly touched. The body’s dimension of a small robot is different from those of human. We adjusted the length of the upper arm to easily distinguish the movements of the upper and lower limbs. This setup demonstrated positive results in visually impaired children. They attempted to emulate the posture of the robot. A standing concept of the torso and hand movements was presented as a teaching aid.

Acknowledgment

This research was supported by the National Science Council, Taiwan, R.O.C, under grant NSC 99-2221-E-324-026-MY2. We gratefully thank research students and the Special Education School in Taichung for arrangement of field test.

- Case-Smith, Jane, Anne S. Allen, and Pat Nuse Pratt, eds. Occupational Therapy for children, Louis: Mosby, 2001.

- Stagnitti, Karen, and Carolyn Unsworth. “The importance of pretend play in child development: An occupational therapy perspective” British Journal of Occupational Therapy, 63(3), 121-127,

- Roe, Joao. “Social inclusion: meeting the socio-emotional needs of children with vision needs” British Journal of Visual Impairment, 26(2), 147-158,

- Visually impaired children and dance, [Online]. 2011 Available: https://youtu.be/hYalrsMfU90

- Özçalışkan, Şeyda, Ché Lucero, and Susan Goldin-Meadow. “Does language shape silent posture?” Cognition 148, 10-18,

- Lowie, Wander, and Marjolijn Verspoor. “Variability and variation in second language acquisition orders: A dynamic reevaluation” Language Learning, 65(1), 63-88,

- Iverson JM and Goldin-Meadow S. “Why people posture when they speak” Nature, 396(6708), 228,

- [Online]. 2019 Available:https://www.trossenrobotics.com/robotgeek-snapper-robotic-arm

- [Online]. 2019 Available:https://www.videoblocks.com/video/humanoid-robot-dance

- Takeda, Takahiro, Yasuhisa Hirata, and Kazuhiro Kosuge. “Dance step estimation method based on HMM for dance partner robot.” IEEE Transactions on Industrial Electronics 54.2 (2007): 699-706.

- Granados, Diego Felipe Paez, et al. “Dance Teaching by a Robot: Combining Cognitive and Physical Human-Robot Interaction for Supporting the Skill Learning Process.” IEEE Robotics and Automation Letters 2.3 (2017): 1452-1459.

- Li, K. P. Tee, W. L. Chan, R. Yan, Y. Chua, and D. K. Limbu, “Continuous Role Adaptation for Human-Robot Shared Control,” IEEE Transactions on Robotics, vol. 31, no. 3, pp. 672–681, 2015.

- Granados, Diego Felipe Paez, Jun Kinugawa, and Kazuhiro Kosuge. “A Robot Teacher: Progressive Learning Approach towards Teaching Physical Activities in Human-Robot Interaction.” Proceedings of the IEEE International Symposium on Robot and Human Interactive Communication (ROMAN 2016). IEEE. 2016.

- Barnes, Jaclyn A. Musical Robot Dance Freeze for Children. Diss. Michigan Technological University, 2017.

- [Online]. 2019 Available: berobot.com