Evaluation of Information Competencies in the School Setting in Santiago de Chile

Volume 6, Issue 4, Page No 298-305, 2021

Author’s Name: Jorge Joo-Nagata1,a), Fernando Martínez-Abad2

View Affiliations

1Universidad Metropolitana de Ciencias de la Educación, Departamento de Historia y Geografía, Ñuñoa, 7760197, Chile

2Universidad de Salamanca, Instituto Universitario de Ciencias de la Educación, Salamanca, 37008, Spain

a)Author to whom correspondence should be addressed. E-mail: jorge.joo@umce.cl

Adv. Sci. Technol. Eng. Syst. J. 6(4), 298-305 (2021); ![]() DOI: 10.25046/aj060433

DOI: 10.25046/aj060433

Keywords: Competencies for life, Blended learning, Primary education

Export Citations

This study evaluated the competencies related to digital information use through technological tools aiming to acquire applicable knowledge by searching and retrieving information. Methodologically, a quasi-experimental design without a control group was applied to a sample of primary education students from Chile (n=266). First, a diagnosis of the digital-informational skills is performed, and, later, the results of a course in a blended learning context -b-learning- (treatment) are shown. The results show significant differences between the participant groups, confirming the learning in information competencies and distinguishing an initial level from a posterior intermediate level.

Received: 07 June 2021, Accepted: 19 July 2021, Published Online: 16 August 2021

1. Introduction

In the present context of Information and Communications Technology (ICT), the development of basic skills, and contexts of data overstocking, they have gradually become the three greatest fields for process of teaching-learning in different schooling levels. Additionally, expectations and objectives for information use in a widely intertwined world are set. This way, key skills in the ICT context are search, use, evaluation, and information processing [1,2].

Thus, it is understood that the integration of new data and communication systems, mainly from the set of technologies such as the Internet, corresponds to a social reality that is challenging the global educational system [3,4] and, particularly, the educational system in Chile. The concern of the educational administration is well reflected in the successive efforts to inquire about and propose improvements in material coverage (facilities, hardware and software material coverage, connectivity at a national level), in training and innovation of teachers or the organization of teachers at a management or curriculum development level. However, the scientific knowledge on acquisition and development of digital and information competencies requires the application of systemic methodologies for collection, analysis, and validation of the information that allows drawing applicable conclusions, with a capacity of generalization to advance in this new curricular content.

The quality of education in Chile, in its different levels, is the reason why it is so stressed on considering including key competencies in the foundations of the mandatory educational curriculum and, a variable that considerably impacts in this improvement is the training level performed at a teacher level and the school education stage [5,6]. The evaluation and training are included in the implementation of ICT innovative actions at educational centers.

The objective of this article is to evaluate digital and information competencies along with the implementation and evaluation of an ICT innovative project leading to the training in searching and processing of information in students at the school educational system. Specifically, a diagnosis of the digital-informational competencies level in school students was performed to later propose an experimental design able to verify the effectiveness of a training program of blended learning for the development of digital-informational education, competencies in the permanent training of school students. Finally, a variation level in the evaluated dimensions is set – search, evaluation, processing, and information communication – at the group of analyzed students.

The rest of the article is organized as follows: firstly, this document provides information on digital-informational skills in a formal educational setting and the current situation of ICT. Then, the applied methodology, and the sample characteristics are detailed. Hereafter, the pre-test and post-test results are summarized. Lastly, discussion and conclusions are shown..

2. Theoretical framework

2.1. Digital-informational skills and the school setting

The fact of using ICT as learning objects, vehicles of information literacy and tools of permanent training of students from the school system is going to provide an added value to the research field, showing themselves not only as methodological elements but also as findings and achievements. The ICT use in the development of school education is often presented as a positive impact on the development of digital skills that belong to the 21st century and skills in general [7,8]. Thus, digital literacy can be understood as the development of a wide range of skills derived from the use of applied technology, allowing students to research, create contents and communicate digitally, and therefore, to constantly participate in the development of a society with a strong digital component [7–9].

Despite the classic denomination of “digital natives” [10], where it is established that these digital skills are in current students by default, several studies have determined that their knowledge is in medium and low levels [11–15]. Thus, considering this information, it is necessary to develop these skills aiming to prevent differences in the use of digital technologies. In the particular case of Chile, several studies have shown that students are capable of solving tasks related to the use of the information as consumers, as well as organizing and managing digital information. On the other hand, very few students can have success in skills related to the use of information as producers and creators of contents [9,16,17].

Skills related to information management through digital tools –particularly free access resources found on the Internet– with the objective of acquiring valid and verified knowledge through the search and collection of information, along with the interpretation of text information from the reading of new data, reflection, and its evaluation, will be understood as information literacy, also known as ALFIN, by its Spanish initials [18–20].

Beyond the existing controversy on the inportance of terminology used in the very definition of concepts such as “Processing of Information” and “Digital Skill” [21–23], the fact is that “Informational Skill” can be placed within the scope of action of this key competency. At the center of the generic skills the information literacy is found, which has become a new paradigm at the ICT scene, which is understood as the cognitive–affective fabric that allows to people not only recognizing their informational needs but also acting, understanding, finding, evaluating, and using information of the most diverse nature and sources.

2.2. Digital learning in a blending learning setting

For the development of digital and information literacy, the adoption of online modality is increasingly massive, in particular when the courses are integrated into academic study plans, or in contexts where this type of education is required [24]. Besides, the experience of adopting online or b-learning modalities has been strengthened in extreme situations like confinement derived from COVID-19 disease, where teachers understand the context of online learning. However, during the implementation, a variety of problems have arised, like facilities availability, Internet access, the need for new forms of planning, implementation, and evaluation of learning, and collaboration with the parents [25–27]. Notwithstanding the above, digital technologies represent an educational resource for a better adaptation to different types of students and their different personal situations. Elements such as focusing on diversity and encouraging the development of key skills are taken into consideration, while they offer multiple possibilities for collaboration between teachers and partners in new communicative scenes of online character.

Thus, the challenge of providing an interactive learning experience for students in big classmate groups and the concern on the quality of teaching in this type of milieu have been key catalysts for the reconsideration of educational approaches in the different educational levels [28].

Blended learning (b-learning), also known as hybrid learning, constitutes an evolving field of research within the wider dimension of electronic learning (e-learning), and it related to instruction practices combining traditional presential approaches with online learning or mediated by technology with the Internet as the main tool used [24,29]. From the beginning of the 21st century, a significant number of studies in this field, with their diverse implications in educational fields have been developed. However, there is still limited evidence that b-learning improves the results of learning in students [24,30].

3. General context

The interest of organizations such as the European Union, the OECD, and UNESCO for this type of research is relevant [31–33], since the circle of evaluation-formation and educational innovation constitutes one of the strategic axes in the education field for the development, not only of key areas in all the productive fabric but also in critical thought in a globalized world. This way, the formation of citizens regarding skills focused on demands of work environment in the 21st century should constitute a fundamental concern for current governments.

Currently, a great impact in the study of information competencies in research has been reached [34–38]. However, efforts on the progress of evaluation tools of the proficiency level in digital and information skills are not wider enough. Most of the research carried out have established and implemented measure scales of self-perception of their own digital and information skill, of their elaboration, and in a setting of the general evaluation of digital skills [39–47]. It is important to point out that, while most of the research previously analyzed and cited keep a precise and concrete application, only a few of them present an integration of the evaluation and the use of digital and information competencies within the educational curriculum [47–49]. Although there is a specific increase of these digital skills in teachers from diverse areas, particularly teachers who teach one course, the development focused on the digital and information competencies in Chile has not been produced, and rather, they have been settled from the general implementation of digital skills [9,50–54].

Additionally, a significant part of the research carried out in the formal education field, from national as well as international contexts, and especially in primary school and university educational levels, establish training programs that develop themselves from specific curricular or disciplinary aspects [39,42,55–60]. Nevertheless, due to the multidimensional structure that digital and information skills raise, the experiences within these training programs tend to aim at the specific dimension of technology, collection, and processing of data, rather than a complex, global and holistic view of them.

4. Objectives and hypothesis

Analyze the level of ICT competencies of the school education body to, secondly, present an experimental design able to check the efficacy of a teaching program for the increase of information competencies in the permanent formation of students from school education. Finally, the variation level of the measured dimensions –search, evaluation, processing, and communication of the information– is established in the group of students that were analyzed.

5. Materials and methods

5.1. Research design

This is an information analysis study based on investigation data in students of the last stage of primary school [61], where a quasi-experimental test type design without a control group was used [62,63]. In this study, an educational intervention with ICT and information competencies was performed to support innovation and integration models in the teaching and learning process..

5.2. Participants

The study sample is students of both sexes who belong to the last year of primary education in Chile, in the Province of Maipo area. The sample were stratified according to the school levels they belonged to, along with their source schools.

The sample was composed of 266 students and, according to the socio-demographic features, distribution can be recapitulated as:

- By variable sex of the sample was 50.4% of female students and 49,6% of male students.

- By age of students was established between 12 to 17 years, with an average of 13.89 years.

- The distribution based on education level was made according to sex: 63.53% of students belonged to the 8º grade of primary school, where 50.88% were women and 49.11% were men. 36.46% of students belonged to the 7º grade of primary school, where 50.51% were men and 49.48% were women.

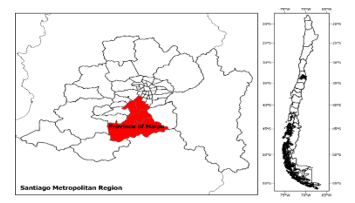

Figure 1: Study area: Province of Maipo

Figure 1: Study area: Province of Maipo

The sample derivates from the population of primary school students, whose ages range between 12 and 17 years old, belonging to the Province of Maipo in the Santiago Metropolitan Region (Figure 1), equivalent to N=106,745 [64], with a level of significance of α = 0.05 and a maximum of homogeneity p = q = 0.5 for the sample of 266 participants, where the sampling error obtained is equal to 8.2%

5.3. Data collection instrument (test)

The dependent variable has been defined as the level of acquired competency of the students on digital and information skills, measured before (pre-test) and after (post-test) the implementation of the educational intervention (treatment).

The instrument was applied in the pre-test stages as a diagnosis of the competencies, and the post-test was applied for the evaluation of the information competencies reached by the participants students, measuring the level of performance obtained after the treatment. The test is composed of 29 elements of dichotomous nature, from 37 questions of single and multiple selection. 7 questions in the information search dimension, 10 questions in an evaluation dimension, 5 questions in the information processing dimension and other 9 questions on communication and dissemination of the information are presented (table 1).

Table 1: Dimensions and variables used in the instrument (test)

| Dimension | Source | Metric | Description |

| Predictor variables | |||

| Socio-demographic | – Test.

– Data provided by the educational center or the Ministry of Education. |

– Sex.

– Age. – Grade – Municipality |

Socio-demographic data of the participants of the study (sample). |

| Criterion variables | |||

| Digital and information skills focused on the informational part. | Test | -Search of the information. | Grade obtained in the survey (instrument) about the item. Questions 1 to l7 |

| – Evaluation of the information | Grade obtained in the survey (instrument) about the item. 9 questions going from items 8 to 9. | ||

| Analysis and selection of information (processing) | Data obtained through the survey (instrument) about item. 9 questions going from items 10 to 14. | ||

| Communication of the information. | Grade obtained in the survey (instrument) about item. 9 questions going from items 15 to 18. | ||

5.4. Treatment

The independent or treatment variable involved the teaching intervention applied to the participant during 30 training hours in a blended context [65–67], and adapted from the proposal described in [68]. This way, the teaching intervention applied in the training of sample corresponds to an adaptation of the instrument for the development of information competencies of secondary education [65,68] which has been tested and validated in that area.

5.5. Data analysis

Concerning the data analysis, after the exploratory initial analysis of the distributions of the variables and the evenness of the variances and covariances structures, parametric techniques, ANOVA with repeated measures are applied. Intra-subjects factors (pre-test/post-test) and inter-subjects factors (type of school) are incorporated. After the study with repeated measures, other techniques complementing the results are applied, such as the t-test. Additionally, all scores have been calculated so that each of the items is valued with a maximum score of 1 and a minimum score of 0. Besides, the scores of dimensions such as the average score of the set of items of the n dimension multiplied for 10 have been calculated. Thus, all dimensions are ranged from 0 to 10 points, which facilitates interpretation and contrast. Finally, the total score is calculated as the sum of the scores in the 4 dimensions. To ensure the internal consistency of the test results, the Cronbach’s alpha test was performed, whose value was equal to 0.731, considered as adequate or acceptable [69,70]. All these statistical processes have been carried out in the SPSS 25 and R 4.0 software.

5.6. Procedure

The data in tests, in all cases, was collected mainly through online questionnaires. Responsible teachers were contacted, and the students participated by performing both pre-test and post-test online.

6. Results

6.1. General and descriptive results

Firstly, it is possible to determine values that approximate an average of 5 points (table 2) for intra-subjects characteristics (sex, grade and school). In parallel, there may be an important dispersion of values, which increases in post-test results.

It is observed that at the sample’s level, the average reaches higher scores in Search, Processing, and Communication dimensions, while lower scores are registered in the Evaluation dimension (table 3). At a general level, the average score is greater in the student samples in the post-test. The dispersion of values increases in the post-test, so the teaching e-learning process has created higher levels of inequity between the measured students.

Table 2: total descriptive scores (n = 266)

| Pre-test | Post-test | ||||

| Mean | S.D | Mean | S.D. | ||

| Sex | Male | 4.809 | 1.263 | 5.207 | 1.413 |

| Female | 4.694 | 1.213 | 5.331 | 1.449 | |

| Grade | 7º | 4.778 | 1.089 | 4.998 | 1.446 |

| 8º | 4.736 | 1.317 | 5.425 | 1.401 | |

| School | School 1 | 4.682 | 1.236 | 5.171 | 1.399 |

| School 2 | 5.051 | 1.207 | 5.697 | 1.494 | |

Table 3: Descriptive statistics per dimension (n = 266)

| Mean | Sx | P25 | P50 | P75 | ||

| PRE-TEST | SEARCH | 4.540 | 1.532 | 3.654 | 4.615 | 5.385 |

| EVALUATION | 5.079 | 1.939 | 3.333 | 5.556 | 6.667 | |

| PROCESSING | 4.511 | 1.692 | 3.636 | 4.545 | 5.455 | |

| COMUNICATION | 5.000 | 2.044 | 4.000 | 5.000 | 6.000 | |

| TOTAL | 4.752 | 1.237 | 3.953 | 4.651 | 5.581 | |

| POST-TEST | SEARCH | 5.552 | 1.824 | 3.846 | 5.385 | 6.923 |

| EVALUATION | 5.046 | 1.985 | 3.333 | 5.000 | 6.667 | |

| PROCESSING | 4.863 | 1.876 | 3.636 | 4.545 | 6.364 | |

| COMUNICATION | 5.550 | 2.128 | 4.000 | 6.000 | 7.000 | |

| TOTAL | 5.270 | 1.430 | 4.419 | 5.233 | 6.337 | |

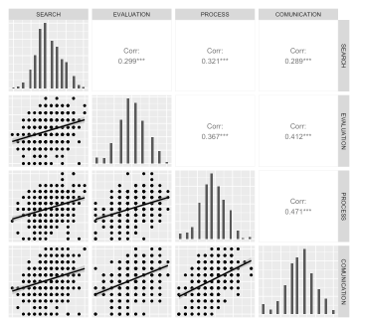

Figure 2: relation and distribution between dimensions

Figure 2: relation and distribution between dimensions

Moreover, it is observed how the distribution of pretest and post-test is similar in both groups, and their progress is similarly reached, while in the Evaluation dimension there is a decline or no progress at all for both cases. It does not seem to be interaction according to the school; therefore, it is not considered a covariable. By obtaining correlations between dimensions, it is possible to establish that all values are significant and positive (figure 2).

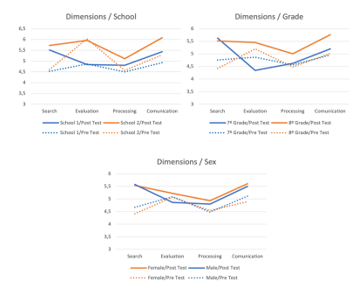

By comparing between groups and the results obtained in the post-test, it is possible to perceive differences between the course and the school groups. However, based on the sex variable, values in the scores in different dimensions do not show great differences (figure 3).

Figure 3: Post-test results based on dimensions and groups (n=266)

Figure 3: Post-test results based on dimensions and groups (n=266)

It is also possible to establish that in total scores, from all the variables analyzed in the contrasts, the sex variable is not significant in an inter-subject level nor an intra-subject interaction (table 4).

Table 4: Comparison per variables (n = 266)

| Pre-test | Post-test | ||||||||

| Mean Dif. | t (p.) | Mean Dif. | t (p.) | ||||||

| Sex | -0.115 | -0.758 (0.449) | 0.123 | -0.704 (0.482) | |||||

| Grade | 0.041 | – 0.264 (0.792) | -0.427 | -2.365 (0.019) | |||||

| School | -0.368 | -1.909 (0.057) | -0.526 | -2.366 (0.019) | |||||

| Post-test – Pre-test | |||||||||

| Mean Dif. | t (p.) | ||||||||

| Sex | Male | -0.398 | -3.997 (<.001) | ||||||

| Female | -0.636 | -5.614 (<.001) | |||||||

| Grade | 7º | -0.220 | -1.667 (0.099) | ||||||

| 8º | -0.689 | -7.688 (<0.001) | |||||||

| School | School 1 | -0.488 | -5.631 (<0.001) | ||||||

| School 2 | -0.646 | -4.359 (<0.001) | |||||||

| Complete sample | -0.518 | -6.841 (<0.001) | |||||||

6.2. ANOVA results of repeated measures

A hypothesis in the use of the ANOVA test with repeated measures is the matrix homogeneity of the covariances of the dependent variables [71,72]. This hypothesis is determined through the Box test, with the following results (table 5):

Table 5: Box test of the equality of covariances matrix

| Box M. | 11.194 |

| F | 1.842 |

| df1 | 6 |

| df2 | 268376.644 |

| Sig. | 0.087 |

Thus, the significance level showed that in this test a value of 0.087 was obtained, which exceeds 0.05 and, therefore, with a probability grade of 95% that the hypothesis on covariances matrix observed of the dependent variables are equal between groups.

Descriptive statistics and the results of the ANOVA test of repeated measures applied to data (table 6) showed that there is a significant interaction depending on the Grade, so it was decided to keep this variable, regardless that it is not significant at an individual level.

Table 6: Intra-subjects effects

| INTRA-SUBJECT EFFECTS | F | p. |

| PRE-POST | 30.92 | <.001 |

| PRE-POST * Grade | 8.518 | 0.004 |

| PRE-POST * School | 0.088 | 0.767 |

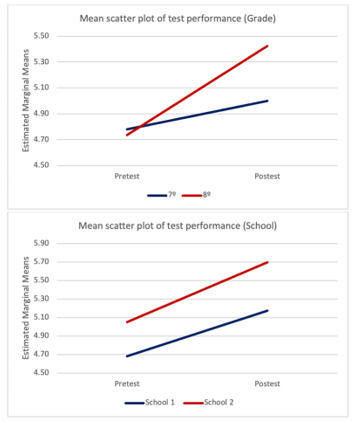

The training action was significantly more effective in the eighth-grade group course than in the seventh-grade course. While the levels are similar in the pre-test scores – slightly higher in the eighth-grade group -, the training action had better results in the eighth-grade students. Nevertheless, the School variable does not have interaction in pre-post test scores (Figure 4).

Figure 4: interactions between pre-test and post-test scores in grade and school variables

Figure 4: interactions between pre-test and post-test scores in grade and school variables

In table 7 it is possible to see the effects caused by the characteristics of the subjects as a transversal measure. Thus, it is possible to establish that the School variable is significant, but that is not the case for the Grade variable. This implies that at a general level, and calculating the average score of each subject in the pre-test and post-test, and also considering the criteria of the average score variable, there are significant differences for the School variable, but not for the Grade variable.

Table 7: Inter-subjects effect

| INTER-SUBJECT EFFECTS | F | p. |

| Grade | 0.186 | .666 |

| School | 4.408 | .037 |

| Grade * School | – | – |

Although all students in different schools and courses reached substantial progress in their digital competencies, there are also constant differences between the schools participating in the research.

7. Discussion

The students participating in the study, despite belonging to two different schools and two different teaching levels, in which there are different methodologies and contents within the elementary school, behave as a heterogeneous group in the information competencies area, with statistically significant differences between groups compared in the totals as much as in the analyzed dimensions (tables 1-7 and figures 1-2), which is complemented with the average age of the test responding students along with the sex variable, factors that do not have great incidence in the results due to the developed b-learning educational intervention.

Regarding the diverse analyzed variables, students behave in a heterogeneous manner, despite all the participants increasing their scores in the post-test measures. Within the established dimensions, the differences existing in the evaluation and the processing of the digital information areas are highlighted, a situation that has been stated in other investigations [16]. This way, particular uses in the application of technologies skills are presented. Regarding values on indicators of digital competencies and forms in which they show, participant students keep medium to low digital competencies indexes, which coincide with what has been carried out in others similar research [4,49,73].

8. Conclusions

Within the complexity of the Information Society, which is constantly mediated by the impact of ICT, it demands to the educational processes the incorporation of key skills, where the digital and information skills are prominent, related to the treatment of information in a virtual setting and the competency of digital processing. From this aspect, the training of the students from the first cycles of teaching gains importance. Thus, this study evaluated the efficacy of an educational intervention in a b-learning setting for the training in information competencies at an elementary educational level (seventh and eighth grades), obtaining progress considered significant but not enough for the ICT context where we live.

Empirical values obtained in the different dimensions confirm an appropriation in the digital competencies learning, where it is possible to differentiate an initial level from a posterior level after the applying of the educational intervention, and also between other variables as grade from school variables, establishing different realities in each educational context.

In the conclusions, the importance of the evaluation and training in digital and information skills is considered, addressing the fundamental dimensions that compose them, and establishing the efficacy of the implemented educational intervention. On the other hand, the research contributes to the study area according to the scale of reference – Santiago de Chile – and the training context – elementary students. Even though in Chile there are efforts to know the use of technologies in secondary teaching students [9], in the case of elementary students there are no studies that establish the characteristics and the levels concerning the information competencies in a digital setting.

Finally, after an analysis of the research contributions, weaknesses are established, which are focused on the design and development of the evaluation instrument and the experimental level of the applied design.

Conflict of Interest

The authors declare no conflict of interest.

Acknowledgment

This research was supported by the Research Direction of the Universidad Metropolitana de Ciencias de la Educación, Chile [Proyecto FIPEA 02-18].

- M. Bielva Calvo, F. Martinez Abad, M.E. Herrera García, M.J. Rodríguez Conde, “Diseño de un instrumento de evaluación de competencias informacionales en Educación Secundaria Obligatoria a través de la selección de indicadores clave,” Education in the Knowledge Society (EKS), 16(3), 124–143, 2015, doi:10.14201/eks2015163124143.

- UNESCO, A Global Framework of Reference on Digital Literacy Skills for Indicator 4.4.2, 2018.

- A. Aslan, C. Zhu, “Pre-service teachers’ perceptions of ICT integration in teacher education in Turkey,” Turkish Online Journal of Educational Technology, 14, 97–110, 2015.

- V. Dagiene, Development of ICT competency in pre-service teacher education, IGI Global, Lazio: 65–75, 2013.

- E. Palma Gajardo, “Percepción y Valoración de la Calidad Educativa de Alumnos y Padres en 14 Centros Escolares de la Región Metropolitana de Santiago de Chile,” REICE. Revista Iberoamericana sobre Calidad, Eficacia y Cambio en Educación, 6(1), 85–13, 2008.

- M. Perticará, M. Román, Los desafíos de mejorar la calidad y la equidad de la educación básica y media en Chile, Konrad-Adenauer-Stiftung, Santiago, Chile: 95–122, 2014.

- J. Fraillon, J. Ainley, W. Schulz, T. Friedman, E. Gebhardt, Preparing for life in a digital age: The IEA international computer and information literacy study. International report, Springer International Publishing, London: 15–25, 2014, doi:10.1007/978-3-319-14222-7_1.

- R. Schmid, D. Petko, “Does the use of educational technology in personalized learning environments correlate with self-reported digital skills and beliefs of secondary-school students?,” Computers & Education, 136, 75–86, 2019, doi:10.1016/j.compedu.2019.03.006.

- M. Claro, D.D. Preiss, E. San Martín, I. Jara, J.E. Hinostroza, S. Valenzuela, F. Cortes, M. Nussbaum, “Assessment of 21st century ICT skills in Chile: Test design and results from high school level students,” Computers & Education, 59(3), 1042–1053, 2012, doi:10.1016/j.compedu.2012.04.004.

- M. Prensky, “Digital natives, digital inmigrants,” On the Horizon, 9(5), 1–6, 2001.

- C.J. Asarta, J.R. Schmidt, “Comparing student performance in blended and traditional courses: Does prior academic achievement matter?,” The Internet and Higher Education, 32, 29–38, 2017, doi:10.1016/j.iheduc.2016.08.002.

- F.D. Guillen-Gamez, M.J. Mayorga-Fernández, M.T.D. Moral, “Comparative research in the digital competence of the pre-service education teacher: face-to-face vs blended education and gender,” Journal of E-Learning and Knowledge Society, 16(3), 1–9, 2020, doi:10.20368/1971-8829/1135214.

- M.A. Harjoto, “Blended versus face-to-face: Evidence from a graduate corporate finance class,” Journal of Education for Business, 92(3), 129–137, 2017, doi:10.1080/08832323.2017.1299082.

- L. Mohebi, Leaders’ Perception of ICT Integration in Private Schools: An Exploratory Study from Dubai (UAE), SSRN Scholarly Paper ID 3401811, Social Science Research Network, Rochester, NY, 2019, doi:10.2139/ssrn.3401811.

- P. Slechtova, “Attitudes of Undergraduate Students to the Use of ICT in Education,” Procedia – Social and Behavioral Sciences, 171, 1128–1134, 2015, doi:10.1016/j.sbspro.2015.01.218.

- T. Ayale-Pérez, J. Joo-Nagata, “The digital culture of students of pedagogy specialising in the humanities in Santiago de Chile,” Computers & Education, 133, 1–12, 2019, doi:10.1016/j.compedu.2019.01.002.

- J. Joo Nagata, P. Humanante Ramos, M.Á. Conde González, J.R. García-Bermejo, F. García Peñalvo, “Comparison of the Use of Personal Learning Environments (PLE) Between Students from Chile and Ecuador: An Approach,” in Proceedings of the Second International Conference on Technological Ecosystems for Enhancing Multiculturality, ACM, New York, NY, USA: 75–80, 2014, doi:10.1145/2669711.2669882.

- D. Bawden, “Revisión de los conceptos de alfabetización informacional y alfabetización digital,” Anales de Documentación, 5, 361–408, 2002.

- I. Sz?köl, K. Horváth, “Introducing of New Teaching Methods in Teaching Informatics,” in: Auer, M. E. and Tsiatsos, T., eds., in The Challenges of the Digital Transformation in Education, Springer International Publishing, Cham: 542–551, 2020, doi:10.1007/978-3-030-11932-4_52.

- S. Virkus, “Information literacy in Europe: a literature review,” Information Research, 8(4), 2003.

- J. Castaño, J.M. Duart, T. Sancho, “A second digital divide among university students,” Cultura y Educación, 24(3), 363–377, 2012, doi:10.1174/113564012802845695.

- J. Castaño-Muñoz, “Digital inequality among university students in developed countries and its relation to academic performance.,” International Journal of Educational Technology in Higher Education, 7(1), 43–52, 2010.

- L. Starkey, “A review of research exploring teacher preparation for the digital age,” Cambridge Journal of Education, 50(1), 37–56, 2020, doi:10.1080/0305764X.2019.1625867.

- C. McGuinness, C. Fulton, “Digital Literacy in Higher Education: A Case Study of Student Engagement with E-Tutorials Using Blended Learning,” Journal of Information Technology Education: Innovations in Practice, 18, 001–028, 2019.

- S. Dhawan, “Online Learning: A Panacea in the Time of COVID-19 Crisis,” Journal of Educational Technology Systems, 49(1), 5–22, 2020, doi:10.1177/0047239520934018.

- I. Fauzi, I.H.S. Khusuma, “Teachers’ Elementary School in Online Learning of COVID-19 Pandemic Conditions,” Jurnal Iqra’?: Kajian Ilmu Pendidikan, 5(1), 58–70, 2020, doi:10.25217/ji.v5i1.914.

- D. Moszkowicz, H. Duboc, C. Dubertret, D. Roux, F. Bretagnol, “Daily medical education for confined students during coronavirus disease 2019 pandemic: A simple videoconference solution,” Clinical Anatomy, 33(6), 927–928, 2020, doi:10.1002/ca.23601.

- D.J. Hornsby, R. Osman, “Massification in higher education: large classes and student learning,” Higher Education, 67(6), 711–719, 2014, doi:10.1007/s10734-014-9733-1.

- D.R. Garrison, H. Kanuka, “Blended learning: Uncovering its transformative potential in higher education,” Internet and Higher Education, 7(2), 95–105, 2004, doi:10.1016/j.iheduc.2004.02.001.

- G. Siemens, D. Gaševi?, S. Dawson, Preparing for the digital university: A review of the history and current state of distance, blended, and online learning., Athabasca University, Arlington: Link Research Lab.: 234, 2015.

- Eurydice, La profesión docente en Europa. Prácticas, percepciones y políticas, Oficina de Publicaciones de la Unión Europea, Luxemburgo, 2015.

- OCDE, La definición y selección de las competencias clave. Resumen ejecutivo., DeSeCo, OCDE: 20, 2005.

- T. Valencia-Molina, A. Serna-Collazos, S. Ochoa-Angrino, A.M. Caicedo-Tamayo, J.A. Montes-González, J.D. Chávez-Vescance, Competencias y estándares TIC desde la dimensión pedagógica: una perspectiva desde los niveles de apropiación de las TIC en la práctica educativa docente., Cali, 2016.

- M. Bouckaert, “Designing a materials development course for EFL student teachers: principles and pitfalls,” Innovation in Language Learning and Teaching, 10(2), 90–105, 2016, doi:10.1080/17501229.2015.1090994.

- C. Flores-Lueg, R. Roig-Vila, “Percepción de estudiantes de Pedagogía sobre el desarrollo de su competencia digital a lo largo de su proceso formativo,” Estudios pedagógicos, 42(3), 7, 2016.

- J. Garrido M, D. Contreras G, C. Miranda J, “Analysis of the pedagogical available of preservices teacher to use ICT,” Estudios Pedagógicos (Valdivia), 39(ESPECIAL), 59–74, 2013, doi:10.4067/S0718-07052013000300005.

- A. Martínez, J.G. Cegarra Navarro, J.A. Rubio Sánchez, “Aprendizaje basado en competencias: Una propuesta para la autoevaluación del docente,” Profesorado. Revista de Currículum y Formación de Profesorado, 16(2), 325–338, 2012.

- P. Martínez Clares, B. Echeverría Samanes, “Formación basada en competencias,” Revista de Investigación Educativa, 27(1), 125–147, 2009.

- A. Blasco Olivares, G. Durban Roca, “La competencia informacional en la enseñanza obligatoria a partir de la articulación de un modelo específico,” Revista española de Documentación Científica, 35(Monográfico), 100–135, 2012, doi:10.3989/redc.2012.mono.979.

- L. González Niño, G.P. Marciales Vivas, H.A. Castañeda Peña, J.W. Barbosa Chacón, J.C. Barbosa Herrera, “Competencia informacional: desarrollo de un instrumento para su observación,” Lenguaje, 41(1), 105–131, 2013.

- S.U. Kim, D. Shumaker, “Student, Librarian, and Instructor Perceptions of Information Literacy Instruction and Skills in a First Year Experience Program: A Case Study,” The Journal of Academic Librarianship, 4(41), 449–456, 2015, doi:10.1016/j.acalib.2015.04.005.

- E. Kuiper, M. Volman, J. Terwel, “Developing Web literacy in collaborative inquiry activities,” Computers & Education, 52, 668–680, 2009, doi:10.1016/j.compedu.2008.11.010.

- F. Martinez-Abad, P. Torrijos-Fincias, M.J. Rodríguez-Conde, “The eAssessment of Key Competences and their Relationship with Academic Performance:,” Journal of Information Technology Research, 9(4), 16–27, 2016, doi:10.4018/JITR.2016100102.

- E. Resnis, K. Gibson, A. Hartsell?Gundy, M. Misco, “Information literacy assessment: a case study at Miami University,” New Library World, 111(7/8), 287–301, 2010, doi:10.1108/03074801011059920.

- H. Saito, K. Miwa, “Construction of a learning environment supporting learners’ reflection: A case of information seeking on the Web,” Computers & Education, 49(2), 214–229, 2007, doi:10.1016/j.compedu.2005.07.001.

- S. Santharooban, “Analyzing the level of information literacy skills of medical undergraduate of Eastern University, Sri Lanka,” Journal of the University Librarians Association of Sri Lanka, 19(2), 2016.

- S. Santharooban, P.G. Premadasa, “Development of an information literacy model for problem based learning,” Annals of Library and Information Studies (ALIS), 62(3), 138–144, 2015.

- S.C. Kong, “A curriculum framework for implementing information technology in school education to foster information literacy,” Computers & Education, 51, 129–141, 2008, doi:10.1016/j.compedu.2007.04.005.

- A. Pérez Escoda, M.J. Rodríguez Conde, “Evaluación de las competencias digitales autopercibidas del profesorado de Educación Primaria en Castilla y León (España),” Revista de Investigación Educativa, 34(2), 399–415, 2016, doi:10.6018/rie.34.2.215121.

- M. Claro, A. Salinas, T. Cabello-Hutt, E. San Martín, D.D. Preiss, S. Valenzuela, I. Jara, “Teaching in a Digital Environment (TIDE): Defining and measuring teachers’ capacity to develop students’ digital information and communication skills,” Computers & Education, 121, 162–174, 2018, doi:10.1016/j.compedu.2018.03.001.

- Enlaces, Competencias TIC en la Profesión Docente, Ministerio de Educación, Chile, Santiago de Chile, 2007.

- Enlaces, Estándares en Tecnología de la Información y la Comunicación para la Formación Inicial Docente, Ministerio de Educación, Chile, Santiago de Chile, 2007.

- Enlaces, Matriz de habilidades TIC para el Aprendizaje, 2013.

- I. Jara, M. Claro, J.E. Hinostroza, E. San Martín, P. Rodríguez, T. Cabello, A. Ibieta, C. Labbé, “Understanding factors related to Chilean students’ digital skills: A mixed methods analysis,” Computers & Education, 88, 387–398, 2015, doi:10.1016/j.compedu.2015.07.016.

- I. Aguaded, I. Marín-Gutiérrez, E. Díaz-Pareja, “La alfabetización mediática entre estudiantes de primaria y secundaria en Andalucía (España),” RIED. Revista Iberoamericana de Educación a Distancia, 18(2), 275–298, 2015, doi:10.5944/ried.18.2.13407.

- M. Fuentes Agustí, C. Monereo Font, “Cómo buscan información en Internet los adolescentes,” Investigación en la escuela, (64), 45–58, 2008.

- M.J. Grant, A.J. Brettle, “Developing and evaluating an interactive information skills tutorial,” Health Information & Libraries Journal, 23(2), 79–88, 2006, doi:10.1111/j.1471-1842.2006.00655.x.

- N. Landry, J. Basque, “L’éducation aux médias?: contributions, pratiques et perspectives de recherche en sciences de la communication,” Communiquer. Revue de communication sociale et publique, (15), 47–63, 2015, doi:10.4000/communiquer.1664.

- G.P. Marciales Vivas, H.A. Castañeda-Peña, J.W. Barbosa-Chacón, I. Barreto, L. Melo, “Fenomenografía de las competencias informacionales: perfiles y transiciones,” Revista Latinoamericana de Psicología, 58–68, 2016, doi:10.1016/j.rlp.2015.09.007.

- M.L. Tiscareño Arroyo, J. de J. Cortés-Vera, “Competencias informacionales de estudiantes universitarios:: una responsabilidad compartida. Una revisión de la literatura en países latinoamericanos de habla hispana.,” Revista Interamericana de Bibliotecología, 37(2), 117–126, 2014.

- J.H. McMillan, S. Schumacher, Research in Education: Evidence-Based Inquiry, 7th Edition, Pearson, New Jersey, 2010.

- C. Anwar, A. Saregar, Y. Yuberti, N. Zellia, W. Widayanti, R. Diani, I.S. Wekke, “Effect size test of learning model arias and PBL: Concept mastery of temperature and heat on senior high school students,” Eurasia Journal of Mathematics, Science and Technology Education, 15(3), 2019, doi:10.29333/ejmste/103032.

- R. Sagala, R. Umam, A. Thahir, A. Saregar, I. Wardani, “The effectiveness of stem-based on gender differences: The impact of physics concept understanding,” European Journal of Educational Research, 8(3), 753–761, 2019, doi:10.12973/eu-jer.8.3.753.

- Asociación Chilena de Municipalidades, Universidad San Sebastián, COMUNAS Y EDUCACIÓN: Una aproximación a la oferta educativa comunal, Dirección de Estudios AMUCH Asociación de Municipalidades de Chile, Santiago (Chile): 34, 2017.

- M. Bielva Calvo, F.M. Martínez Abad, M.J. Rodríguez Conde, “Validación psicométrica de un instrumento de evaluación de competencias informacionales en la educación secundaria,” Bordón. Revista de Pedagogía, 69(1), 27–43, 2016, doi:10.13042/Bordon.2016.48593.

- J. Cabero Almenara, “Formación del profesorado universitario en TIC. Aplicación del método Delphi para la selección de los contenidos formativos,” Educación XX1, 17(1), 111–132, 2013, doi:10.5944/educxx1.17.1.10707.

- J. Cabero Almenara, M. Llorente Cejudo, V. Marín Díaz, “Hacia el diseño de un instrumento de diagnóstico de ‘competencias tecnológicas del profesorado’ universitario,” 2010.

- F. Martínez Abad, S. Olmos Migueláñez, M.J. Rodríguez Conde, María J., “Evaluación de un programa de formación en competencias informacionales para el futuro profesorado de E.S.O.,” Revista de Educacion, 370, 45–70, 2015.

- D. George, P. Mallery, SPSS for Windows Step by Step: A Simple Guide and Reference, 11.0 Update, Allyn and Bacon, 2003.

- J.C. Nunnally, Psychometric theory, 2nd ed., McGraw-Hill, New York, 1978.

- M.E. Legget, M. Toh, A. Meintjes, S. Fitzsimons, G. Gamble, R.N. Doughty, “Digital devices for teaching cardiac auscultation – a randomized pilot study,” Medical Education Online, 23(1), 1524688, 2018, doi:10.1080/10872981.2018.1524688.

- A. Tahriri, M.D. Tous, S. MovahedFar, “The Impact of Digital Storytelling on EFL Learners’ Oracy Skills and Motivation,” International Journal of Applied Linguistics and English Literature, 4(3), 144–153, 2015, doi:10.7575/aiac.ijalel.v.4n.3p.144.

- J. García-Martín, J.-N. García-Sánchez, “Pre-service teachers’ perceptions of the competence dimensions of digital literacy and of psychological and educational measures,” Computers & Education, 107(Supplement C), 54–67, 2017, doi:10.1016/j.compedu.2016.12.010.